Quick guide to optimize your game for TitaniumGL.

How, and why should a developer optimize the game for TitaniumGL?

|

People who use TitaniumGL usually have a configuration with broken or missing OpenGL support. If you are a game developer, its

maybee a good idea for you to ensure your product works with TitaniumGL. To

optimize your game to TitaniumGL, is very easy. You will

face the same cases, like you optimizing to ATi or nVidia

drivers. Dont forget: Most of TitaniumGL users are using old graphics cards. These old graphics cards are very slow, so if you want to create a TitaniumGL profile optimisation, you must probably use lowest settings. Geometry -TitaniumGL is triangle based. This means, triangle

data input will be the fastest in EVERY case. Triangle

strip, quads, quad strip, triangle-fan will be slower

than trangle-based geometry. Quad strip and triangle fan

is the slowest.

-If you can, avoid the use of drawarrays. It may will

be slow. Texture handling -Rendering a multitextured geometry is faster, than

rendering the geometry twice. Pipeline implementation

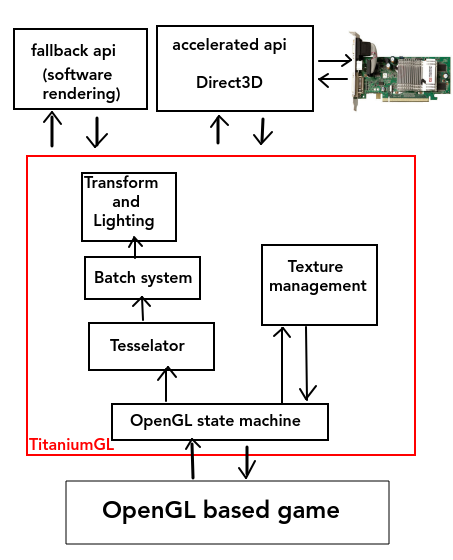

-Do not use stencil/alpha/lookop buffers. They will not work. -Some blending modes on old cards are unimplemented, except additive blending and alphablending. The best is to use this two kind of blending. Some blending modes are unimplemented even in TitaniumGL itself. -Material handling is not yet correctly implemented, and light management is buggy. Do not expect good shading at all. -TitaniumGL (like some other vendors) will NOT lose your device, even if you are trying it. Destroying and recreating a context is a very bad idea to delete your textures, as TitaniumGL will simply not to it. Making it 10x times to be sure, its a very very bad idea. -You can not access TitaniumGL on multiple threads. It will crash. Don't even attempt doing it. -Hammering 1000s of OpenGL initialization functions to search for magical bits here and there is a super bad idea. -TitaniumGL does not rely on the DirectX stack too much. TitaniumGL is minimizing the interaction with Direct3D. Unlikely with other OpenGL to Direct3D wrapper, now DirectX is only used to render the geometry with hardware acceleration, its not used to implement the whole OpenGL API. Becouse of this, TitaniumGL will avoid the graphics cards hardware design flaws and driver problems, but still stays fast becouse the 3D is accelerated. This is, why the same features available on all graphic cards with TitaniumGL. Bug reports Every TitaniumGL version is tested with 30-40 game software, 10-20 feature tester miniapp, and with some stress-test applications. TitaniumGL has pointer-corruption detector, internal memory protection, pointer overrunning protections, and several other security locks. TitaniumGL has some debugging features too, but that requies a specially compiled version. If you send me a bugreport, its being added to my buglist, and i will check it. This does not means that it will be fixed in the upcoming versions, but i will try to fix it. If you have donated, your bugreport will be investigated with higher priority, but its still not means that i can fix your issue, becouse a driver is always very complicated. Always use the newest version! -If you are a gamer, and you found a bug, you can send a bugreport to my email address. The bug-report must contain the buggy game/software name, the name of your graphics card, CPU, size of your RAM, and of couse the bug itself. No other information needed from your computer. -If you are a developer, and you have found a bug, you can send a bugreport to my email address. Mostly its much simplyer to fix the problem in your application, by changing the problematic parts to others. Your bug-report must contain your stuff's name, the name of your graphics card, and of couse the bug itself. No other information needed from your computer. Please write, what function did causing the bug, if it crashing, please remove other codes and try to test the crashing codes alone. Its still crashing? Do you use multithreading? Or is the graphics corrupt? How do you render the corrupt parts? Its still corrupt if you using different way to render? Its good to send me screenshots from the bug and from the correct image. You also can send me source code, in this case please tell me the corresponding file and line in your source. - |