Communication Networks/Print version

| This is the print version of Communication Networks You won't see this message or any elements not part of the book's content when you print or preview this page. |

The current, editable version of this book is available in Wikibooks, the open-content textbooks collection, at

https://en.wikibooks.org/wiki/Communication_Networks

Introduction

What is this book about?

[edit | edit source]This book is about electrical communications networks, including both analog, digital, and hybrid networks. We will look at both broadcast and bi-directional data networks. This book will focus attention on existing technology, and will not be concerned particularly with too much mathematical theory.

What will this book cover?

[edit | edit source]This book is an example-driven book. We will use examples of real world communication technologies and communication networks to teach and demonstrate some of the principles behind communication theory. We will discuss examples of communication networks, and introduce the various mathematical principles that those networks rely on.

Who is this book for?

[edit | edit source]This book is intended for an advanced undergraduate in electrical engineering or a related field.

What are the prerequisites?

[edit | edit source]The reader of this book should have a solid background knowledge of the subjects discussed in Signals and Systems and Communication Systems. The reader should also be familiar with Algebra and Calculus, although they are not strictly required.

What are networks?

[edit | edit source]The idea of networking is an old one. A network can be defined as "A collection of two or more devices which are interconnected using common protocols to exchange data."

"A collection of two or more devices.."

[edit | edit source]A network can be of practically any size. The only practical limitations are those imposed by the protocols which it implements. A small home based network may be comprised of simply 2 computers which share a common connection to the internet. A larger example would be a corporate network where every employee in each department of the corporation has their own workstation, which they use to access not only the internet, but also the servers deployed throughout the network, and the workstations of other employees. Furthermore, a network device is not constrained to just a PC workstation. There are many devices which may be connected to a network, including routers, switches, bridges, access points, firewalls, etc.

"..which are interconnected using common protocols.."

[edit | edit source]The fundamental requirement for two devices is to use the same protocol for exchanging information. This is no different than human communication, for instance. In this analogy, the language used can be considered the protocol. If I want to initiate communication with someone else, the fundamental requirement is that we both know how to speak the same language, regardless of what that language is. In the same way, in order for two devices to exchange information, they must be aware of a common set of rules or specifications to communicate with each other. The primary purpose of a network is to exchange data. The devices connected to a network may have one (or more) of several roles in accomplishing that purpose. The most common would be your PC workstation or a server, where data is originated and stored. Other devices, such as a router, help in getting that data from one point on a network to another. In order to satisfy the requirements of a network, the original developers recognized the need for open standards so that any entity can contribute to, and use network technology. The International Organization for Standards (ISO) developed the Open Systems Interconnection (OSI) reference 7-layer model, which defines the standards for how networks operate.

Authors

Authors of this book

[edit | edit source]- Anish Arkatkar : Started this book

- Kamlesh Kudchadkar : Introducing Wireless(802.11)Security for this course.

- Hardik Vaghela : Section on Data Link Layer (Error Control).

- Ketan Nawal : Section on Data Link Layer (Flow Control).

- Priyank Gandhi : Section on Data Link Layer (MAC).

- Satish Gavali : Section on HyperText Transfer Protocol (HTTP).

- Richard Bhuleskar : Section on Domain Name System (DNS).

- Gopal Paliwal : Section on Transport Control Protocol (TCP).

- Devarshi Vyas : Section on User Datagram Protocol (UDP).

- Yan Chen : Contributed content and reformatted the book into individual pages.

Acknowledgments

[edit | edit source]This book was created by contributions from Computer Engineering students of San Jose State University, CMPE206 (Fall 2006).

Licensing

Licensing

[edit | edit source]The text of this book is released under the following license:

|

|

Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled "GNU Free Documentation License." |

History of Networking

Timeline

[edit | edit source]The cellular concept of space-divided networks was first developed in AT&T in the 1940's and 1950's. AMPS, an analog frequency division multiplexing network was first implemented in Chicago in 1983, and was completely saturated with users the next year. The FCC, in response to overwhelming user demand, increased the available cellular bandwidth from 40Mhz to 50Mhz.

The second generation (see below) started in the early 1990's with the advent of the Digital European Cordless Telephone (DECT) system, and the Global System for Mobile Communication (GSM). Data networks were also developed such as HiperLAN and the IEEE 802.11 working group, which produced the 802.11 legacy standard in 1997. The 802.11a and 802.11b revisions were standardized in October 1999.

The third generation started with the CDMA2000 standard in Korea, and UMTS in Europe and FOMA in Japan. The IEEE 802.16 WiMAX specification was approved in December 2001.

Wireless Generations

[edit | edit source]It is often instructive to break the history of wireless networking up into several specific generations.

First Generation (1G)

[edit | edit source]The 1G wireless generation comprised of mainly analog signals for carrying voice and music. These were one directional broadcast systems such as Television broadcast, AM/FM radio, and similar communications.

Second Generation (2G)

[edit | edit source]2G introduced concepts such as TDMA and CDMA for allowing bi-directional communications among nodes in large networks. 2G is when some of the first cellular phones were made available, although communications were restricted to very low bit-rates.

The second generation is frequently divided into sub-sets as well. "2.5G" represented a significant increase in throughput capacity as digital communications techniques became more refined. "2.75G" is another common pseudo-generation that saw an additional increase in speed and capacity among digital wireless networks.

Third Generation (3G)

[edit | edit source]3G represents the combination of voice traffic with data traffic, and the advent of high-bandwidth mobile devices such as PDAs and smartphones. Spectrum Band Freq. varies depending on the mobile technology standard adopted in the system. Current HSDPA deployments support down-link speeds of 1.8, 3.6, 7.2 and 14.0 megabit/s and the HSPA family with up-link speeds up to 5.76 Mbit/s.

Fourth Generation (4G)

[edit | edit source]4G is the current generation wireless network and is characterized by the ubiquity of broadband data connections and universal Internet access. Many of these networks are being designed around the WiMAX (IEEE 802.16) specification. 4G has created a paradigm shift in viewing voice as data, with technologies like Voice over LTE (VoLTE) trying to replace the traditional 1G and 2G voice networks. 4G LTE offered higher bandwidth than home Wi-Fi networks reaching speeds up to 20 Mbps. LTE Advanced also known as 3GPP has a peak upload speed of 500 Mbps and download speed of 1000 Mbps.

Fifth Generation (5G)

[edit | edit source]The next generation of wireless networks, currently under development.

Network Basics

What are networks?

[edit | edit source]Networks are large distributed systems designed to send information from one location to another. An end point is a place in a network where data transmission either originates or terminates. A node is a point in the network where data travels through without stopping. Nodes are connected by channels, paths that data flows down. Channels can be physical linear objects such as a wire or a fiber optic cable, or it can be less tangible, like a wireless connection at a particular frequency.

Providers and consumers

[edit | edit source]An end point that produces information is known as a producer or a server. An endpoint that receives information is known as a consumer or a client. In many networks, such as bi-directional networks, an endpoint can be both a client and a server.

Bi-directional communications

[edit | edit source]Bi-directional communications means that data is flowing both to and from an end point. An end point can be both a client and a server.

Point-to-point communication

[edit | edit source]Some channels are point-to-point: they have only a single producer (at one end), and a single consumer (at the far end).

Many networks have "full duplex" communication between nodes, meaning they have 2 separate point-to-point channels (one in each direction) between the nodes (on separate wires or allocated to separate frequencies).

Some "mesh" networks are built from point-to-point channels. Since wiring every node to every other node is prohibitively expensive, when one node needs to communicate with a distant node, the "intermediate" nodes must pass through the information.

Multiple access

[edit | edit source]Multiple access networks are networks where multiple clients, multiple servers, or both are attempting to access the network simultaneously. Networks with one server and multiple clients are called "broadcast networks", "multicast networks", or "SIMO networks". "SIMO" stands for "Single Input Multiple Output". Networks with multiple clients and servers are known as "MIMO" or "Multiple Input Multiple Output" networks.

Data collisions

[edit | edit source]In a MIMO network, when multiple servers attempt to send data on a single channel at the same time, a data collision occurs. Because data typically consists of electric or electromagnetic radiation, a data collision causes both pieces of information to become unreadable. Clients on the network will either read meaningless data (garbage data) or will read no data at all. MIMO networks therefore will use some sort of collision avoidance or collision detection mechanisms to prevent data collision problems from affecting the network.

Networks with only one fixed sender per channel (point-to-point channels and SIMO channels) never have data collisions on the channel.

Network Topologies

Topologies

[edit | edit source]The shape of a network, and the relationship between the nodes in that network is known as the network topology. The network topology determines, in large part, what kinds of functions the network can perform, and what the quality of the communication will be between nodes.

Common Network Topologies

[edit | edit source]

Star Topology

[edit | edit source]A star topology creates a network by arranging 2 or more host machines around a central hub. A variation of this topology, the star ring topology, is in common use today. The star topology is still regarded as one of the major network topologies of the networking world. A star topology is typically used in a broadcast or SIMO network, where a single information source communicates directly with multiple clients. An example of this is a radio station, where a single antenna transmits data directly to many radios. If there are n number of nodes in a star topology connection, the connecting lines between the nodes should be n - 1.

Tree Topology

[edit | edit source]A tree topology is so named because it resembles a binary tree structure from computer science. The tree has a root node, which forms the base of the network. The root node then communicates with a number of smaller nodes, and those in turn communicate with an even greater number of smaller nodes. A host that is a branch off from the main tree is called a leaf. If a leaf fails, its connection is isolated and the rest of the LAN can continue onwards.

An example of a tree topology network is the DNS system. DNS root servers connect to DNS regional servers, which connect to local DNS servers, which then connect with individual networks and computers. For your personal computer to talk to the root DNS server, it needs to send a request through the local DNS server, through the regional DNS server, and then to the root server. This is a good example of a tree topology.

Ring Topology

[edit | edit source]A ring topology (commonly known as a token ring topology) creates a network by arranging 2 or more hosts in a circle. Data is passed between hosts through a token. This token moves rapidly at all times throughout the ring in one direction. If a host desires to send data to another host, it will attach that data as well as a piece of data saying who the message is for to the token as it passes by. The other host will then see that the token has a message for it by scanning for destination MAC addresses that match its own. If the MAC addresses do match, the host will take the data and the message will be delivered. A variation of this topology, the star ring topology, is in common use today.

Mesh Topology

[edit | edit source]A mesh topology creates a network by ensuring that every host machine is connected to more than one other host machine on the local area network. This topology's main purpose is for fault tolerance, as opposed to a bus topology, where the entire LAN will go down if one host fails. In a mesh topology, as long as 2 machines with a working connection are still functioning, a LAN will still exist.

The mesh topology is still regarded as one of the major network topologies of the networking world.

Line Topology

[edit | edit source]This rare topology works by connecting every host to the host located to the right of it. Most networking professionals do not even regard this as an actual topology, as it is very expensive (due to its cabling requirements) and due to the fact that it is much more practical to connect the hosts on either end to form a ring topology, which is much cheaper and more efficient.

Bus Topology

[edit | edit source]A bus topology creates a network by connecting 2 or more hosts to a length of coaxial backbone cabling. In this topology, a terminator must be placed on the end of the backbone coaxial cabling. In Michael Meyer's Network textbook, he commonly compares this network to a series of pipes that water travels through. Think of the data as water; in this respect, the terminator must be placed in order to prevent the water from flowing out of the network.

Hybrid Topologies

[edit | edit source]A hybrid topology, which is what most networks implement today, uses a combination of multiple basic network topologies, usually by functioning as one topology logically while appearing as another physically. The most common hybrid topologies include star bus, and star ring.

Network Areas

[edit | edit source]Wireless networks do not have fixed topologies, so it doesn't make sense to talk about shape of these networks. Instead, other characteristics such as network size and node mobility are of primary importance.

Wireless networks and networking protocols can be divided up based on their intended range. Networks with smaller ranges have smaller power requirements and often have less noise to deal with. However, small networks are only able to communicate with small numbers of clients, compared with larger nodes. Increasing the number of clients in a network is often more useful, but more aggressive techniques need to be employed to prevent data collisions among multiple users in a large network.

Network Size Designations

[edit | edit source]- Personal Area Network (PAN)

- Extremely small networks, often referred to as "piconets" that encompass an area around a single person. These networks, such as Bluetooth, have a range of only 1-5 meters, and tend to have very low power requirements, but also very low datarates.

- Local Area Network (LAN)

- LAN networks can encompass a building such as a house or an office, or a single floor in a multi-level building. Common LAN networks are IEEE 802.11x networks, such as 802.11a, 802.11g, and 802.11n.

- Metropolitan Area Network (MAN)

- These networks are designed to cover large municipal areas. Data protocols such as WiMAX (802.16) and Cellular 3G networks are MAN networks.

- Wide Area Network (WAN)

- Wide-Area Networks are very similar to MAN, and the two are often used interchangeably. WiMAX is also considered a WAN protocol. Television and Radio broadcasts are frequently also considered MAN and WAN systems.

- Regional Area Network (RAN)

- Large regional area networks are used to communicate with nodes over very large areas. Examples of RAN are satellite broadcast media, and IEEE 802.22.

- Sensor Area Networks

- These networks are low-datarate networks primarily used for embedded computer systems and wireless sensor systems. Protocols such as Zigbee (IEEE 802.15.4) and RFID fall into this category.

Cellular Networks

Signal Overlapping

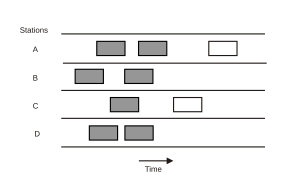

[edit | edit source]Signals need to be separated in either time, space, or frequency to prevent multiple transmissions from overlapping and interfering with one another. FDMA and TDMA techniques attempt to separate transmissions into different frequency bands and time slices, respectively. These systems allow multiple users in a single area to communicate without data collisions.

However, networks can also be physically separated by space to prevent data collisions. In such cases, users can communicate at the same time on the same channel, so long as they are in different networks in different places.

Cellular networks are a method for breaking large networks into smaller groups called "cells". Each cell has different frequency characteristics. This means that frequencies can be reused by non-adjacent cells without causing interference.

Example: Cellular Phones

[edit | edit source]Cellular phones, or mobile phones, are a very common sight today. Cellular phones connect wirelessly to a local base station, which receives the phone signal and transmits it into the phone network.

Roaming occurs when the mobile phone is moving from one cell to another. Cellular phones, in addition to transmitting voice data, also transmit and receive control data. The control data tells the phone how far it is from the base station. If a phone is moving from one cell to another, the distance from the new base station becomes smaller than the distance to the old base station. In this case, the new base station begins to handle the call and the old base station stops communicating with the phone.

Modeling Cells

[edit | edit source]Cells are typically modeled as regular hexagons. Regular hexagons have equidistant center between all adjacent cells. To avoid frequencies being used by adjacent cells, all cells don't share the same frequencies. If each cell used unique frequencies, then there wouldn't be enough frequencies to implement a large network. To get around this, frequency reuse is used to group cells into a pattern that within their group they don't share frequencies. This pattern is tessellated to fill out the area of service. The number of cells in a group is called the reuse factor. Common reuse factors include: 1, 3, 4, 7, 9, 12, 13, 16, 19, and 21.

In these hexagons, only four frequency bands are required to provide non-overlapping service to the entire network.

Network Expansion

[edit | edit source]As the demand increases, there are multiple ways in which the capacity of the network can be expanded.

Sub-Cells

[edit | edit source]Sub-cell techniques involve dividing an existing hexagonal cell into 7 smaller sub-cells. Smaller cells means that smaller base stations can be used, less transmit power is required, and more frequencies can be reused in a smaller area. Additionally, giving an entire frequency range to a smaller geographical area means that more people can be serviced in that area, and data throughput for the entire network can be increased.

Sectoring

[edit | edit source]Sectoring is similar to the sub-cell concept, except that instead of breaking a cell into smaller cells, a cell is broken up radially into "pie slices" called sectors. Each sector in a cell can reuse frequency ranges. Cells can be broken into 3 sector (120° divisions) and 6 sector (60° divisions) architectures.

Implementations

[edit | edit source]The reality of cellular networks is far different from the theoretical conception of them. In reality, base stations are not equidistant, and cells are not uniform size or shape. Because of the irregular size, shape, and placement of these cells, frequency orthogonality is more important, and networks often need to make use of many frequency ranges, instead of the theoretical minimum of three.

Duplex Networks

Duplex Networks, or networks where data travels in both directions between two nodes, pose the problem of needing two channels to communication between two nodes, instead of just one for a broadcast networks.

Frequency Division Duplex

[edit | edit source]Time Division Duplex

[edit | edit source]Circuit Switching Networks

Old folks may very well remember the first incarnation of the telephone networks, where an operator sitting at a desk would physically connect different wires to transmit a phone call from one house to another house. The days however when an operator at a desk could handle all the volume and all the possibilities of the telephone network are over. Now, automated systems connect wires together to transmit calls from one side of the country to another almost instantly.

What is Circuit-Switching?

[edit | edit source]Circuit switching is a mechanism of assigning a predefined path from source node to destination node during the entire period of connection. Plain old telephone system (POTS) is a well known example of analogue circuit switching.

Strowger Switch

[edit | edit source]Strowger Switch is the first automatic switch used in circuit switching. Prior to that all switching was done manually by operators working at various exchanges. It is named after its inventor Almon Brown Strowger.

Cross-Bar Switch

[edit | edit source]Telephony

[edit | edit source]This is a telephone thing

Telephone Network

[edit | edit source]Rotary vs Touch-Tone

[edit | edit source]Cellular Network Introduction

[edit | edit source]Further reading

[edit | edit source]- We will go into more details of the telephone network in a later chapter, Communication Networks/Analog and Digital Telephony.

Cable Television Network

The cable television network is something that is very near and dear to the hearts of many people, but few people understand how cable TV works. The chapters in this section will attempt to explain how cable TV works, and later chapters on advanced television networks will discuss topics such as cable internet, and HDTV.

coax cable has a bandwidth in the hundreds of megahertz, which is more than enough to transmit multiple streams of video and audio simultaneously. Some people mistakenly think that the television (or the cable box) sends a signal to the TV station to tell what channel it wants, and then the TV station sends only that channel back to your home. This is not the case. The cable wire transmits every single channel, simultaneously. It does this by using frequency division multiplexing.

TV Channels

[edit | edit source]Each TV channel consists of a frequency range of 6 MHz. Of this, most of it is video data, some of it is audio data, some of it is control data, and the rest of it is unused buffer space, that helps to prevent cross-talk between adjacent channels.

Scrambled channels, or "locked channels" are channels that are still sent to your house on the cable wire, but without the control signal that helps to sync up the video signal. If you watch a scrambled channel, you can still often make out some images, but they just don't seem to line up correctly. When you call up to order pay-per-view, or when you buy another channel, the cable company reinserts the control signal into the line, and you can see the descrambled channel.

A descrambler, or "cable black box" is a machine that artificially recreates the synchronization signal, and realigns the image on the TV. descrambler boxes are illegal in most places.

NTSC

[edit | edit source]NTSC, named for the National Television System Committee, is the analog television system used in most of North America, most countries in South America, Burma, South Korea, Taiwan, Japan, Philippines, and some Pacific island nations and territories (see map). NTSC is also the name of the U.S. standardization body that developed the broadcast standard.[1] The first NTSC standard was developed in 1941 and had no provision for color TV.

In 1953 a second modified version of the NTSC standard was adopted, which allowed color broadcasting compatible with the existing stock of black-and-white receivers. NTSC was the first widely adopted broadcast color system. After over a half-century of use, the vast majority of over-the-air NTSC transmissions in the United States were replaced with ATSC on June 12, 2009, and will be, by August 31, 2011, in Canada.

PAL

[edit | edit source]PAL stands for phase alternating by line.

SECAM

[edit | edit source]HDTV

[edit | edit source]Radio Communications

Everybody has a radio. Either it is in your house, or it is in your car. The pages in this chapter will discuss some of the specifics of radio transmission, will discuss the differences between AM and FM radio.

AM Radio

[edit | edit source]AM Radio is basically a receiver radio that demodulates a carrier waves amplitude to obtain the information signal

FM Radio

[edit | edit source]Amateur Radio

[edit | edit source]Other Modulated Audio

[edit | edit source]Local Loop

The Local Loop

[edit | edit source]In telephony, a local loop is the wired connection from a telephone company's end office to customer’s houses or small businesses. It is also referred as “last mile” although it can be up to several miles. The connection is usually of twisted pair copper wire. The system was originally designed for voice transmission only using analog transmission technology on a single voice channel. A computer can also send the digital data over this analog connection. For this the data is needed to be changed from digital to analog form so that it can be transmitted over the same local loop.Modem and codec is used to do this conversion of data. During the transmission of the data transmission lines mainly suffer three kinds of losses:

(i) Attenuation:-The Loss occurred due the loss of energy.

(ii) Distortion:- Due to the propagation speed v/s frequency.

(iii) Noise:-it is unwanted energy from source other than the transmitter.

MODEM

[edit | edit source]A modem (from modulate and demodulate) is a device that modulates an analogue carrier signal to encode digital information, and also demodulates such a carrier signal to decode the transmitted information. The goal is to produce a signal that can be transmitted easily and decoded to reproduce the original digital data. A MODEM accepts a serial stream of bits as a input and produce a carrier modulated by one or more by different modulation techniques.

Modulation Techniques

[edit | edit source]The AC signal is used in the telephone Lines Because DC Signaling is not Suitable due to the square waves have a wide frequency spectrum and this is why they are more prone to strong attenuation and delay Distortion.

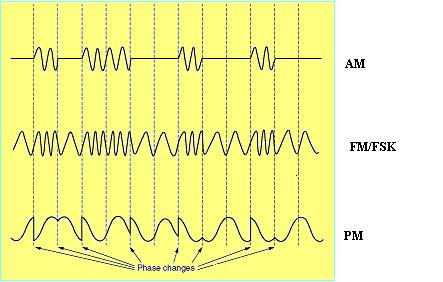

Amplitude Modulation:- In Amplitude Modulation two different amplitudes are used to represent 0 and 1.

Frequency Modulation/Frequency Shift Keying:- In FM two different tones are used. Tones are also called as keys.

Phase Modulation In the PM the carrier wave is systematically shifted 0 or 180 degrees at uniform spaced intervals.

All advance modem use a combination of modulation techniques to transmit multiple bits per baud. Most of the times multiple amplitude and phase shift are combined to transmit several bits/symbol.

Sampling

[edit | edit source]To get the higher speed we just cannot increase the sampling rate. The most modem samples at 2400 times/sec or 2400 baud. A Baud is the number of samples per second. If n is the number of bits that are sent in a baud then the rate of the modem = n*2400 bps. The rate of the modem can be increased by increasing the number of bits that are to be sent in baud.

There are various ways to pack more bits in a baud, so that our objective can be achieved. If a symbol consists of 0 volts for logical 0 and 1 volt for logical 1, then the bit rate is 2400 bps.

QPSK (Quadrature Phase Shift Keying) In QPSK each symbol consist of 2 bits. Its bit transfer rate is twice of the baud rate. So it transfers a 4800bps over a 2400 baud line.

QAM-16 (Quadrature Amplitude Modulation) In QAM-16 each symbol is consist of 4 bits, its bit transfer rate is Four times of the baud rate. So it transfers 9400bps over a 2400 baud line. In this four amplitude and four phase are used, for a total of different 16 combinations. This modulation technique can transmit 4 bits per symbol.

QAM-64 A method of modulating digital signals uses both amplitude and phase coding. It can be used for downstream and used for upstream.In QAM-64 each symbol is consist of 6 bits, its bit transfer rate is Four times of the baud rate. So it transfers 9400bps over a 2400 baud line.

TCM(Trellis Coded Modulation) Even a small amount of noise in the detected phase and amplitude can result in the big error and many bad bits. To reduce the chance of error high standard modems does error correction by adding extra bit to each sample.

V.32 modem standard uses 32 constellation points to transmit 4 data bits and 1 parity bit per symbol at 2400baud to achieve 9400bps with error correction.

V.32 bis modem standard uses 128 constellation points to transmit 6 data bits and 1parity bits per symbol at 2400 baud to achieve 14000 bps with error correction.

V.34 runs at the speed of 28,800 bps at 2400 baud with 12 data bits per symbol.

V.34 the final modem in this series achieved the speed of 33600 which uses the 14 bits/symbol at 2400 baud

'V.90 provides 33.6K upstream (from user to ISP) and 56K downstream channel (from ISP to user).

V.92 can provide 48 kbps on upstream channel if the line can handle it. The downstream rate is 56Kbps.

DSL

| |

A Wikibookian has nominated this page for cleanup. You can help make it better. Please review any relevant discussion. |

| A Wikibookian has nominated this page for cleanup. You can help make it better. Please review any relevant discussion. |

Digital Subscriber Line

[edit | edit source]Introduction

[edit | edit source]Digital Subscriber Line (also known as Digital Subscriber Loop) is a technology that transports high-bandwidth data, such as multimedia, to service subscribers over ordinary twisted pair copper wire telephone lines. A DSL line can carry both data and voice signals and the data part of the line is continuously connected. Digital Subscriber Line technology assumes that digital data does not require change into analog form and back. Digital data is transmitted to the system directly in digital data form, allowing wider bandwidth for transmission. Though, the signal can also be separated so that some of the bandwidth is used to transmit an analog signal allowing users to use telephone and computer simultaneously on the same line.

How DSL came into Action:-

[edit | edit source]The Telephone companies got pressure from the cable TV and the satellite industry as they were offering speeds up to 10Mbit/s and 50 Mbit/s respectively. While the telephone industry was only offering 56 kbit/s. As Internet grew as a new business prospect then the telephone companies realize that they need more competitive product, so that they can offer both telephony and Internet over the same local loop. This is the beginning of DSL.

Goals of DSL Service

[edit | edit source]The main goals of the xDSL services are as following:

- It must work on the existing twisted pair local loops.

- It must not affect customers existing telephones and fax machines.

- It must be faster then the 56 kbit/s

- And finally it should always remain ON.

How DSL works

[edit | edit source]DSL connects the computer to the Internet at speeds as fast as 52 Mbit/s, using the twisted pair copper lines that are commonly used for phone service. Apart from better download and upload times than traditional modems, DSL offers the benefit of always being ON; we don't have to dial up our Internet service provider every time we want to get on the Net. Since DSL connections are dedicated, so we don't have to share our bandwidth with other users as we do in cable modems. All of the DSL achieve their high speeds in the same way by sending data over previously unused frequencies in phone lines. Voice signals travel over phone lines at frequencies ranging from 0 kHz to 4 kHz. Standard modems use the same frequencies, but DSL uses frequencies between 25 kHz and 1 MHz. This extra bandwidth ensures that more data can be sent over the same line. This broadband connection requires special hardware at both, the consumer and phone company’s ends. On consumer’s end, a DSL modem modulates digital information from its computer to send it along phone lines. These signals are then translated by a Digital Subscriber Line Access Multiplexer (DSLAM) located at the phone company's nearest central office. The DSLAM separates the voice from the data signals, sending the data signal to an Internet Service Provider (ISP) and from there to the Internet.

xDSL technologies

[edit | edit source]Mainly there are two types of technology for the xDSL standard; one is the Discrete Multimode (DMT) which is the most widely used technology and another is the Carrier less Amplitude/Phase (CAP) system, which was adopted on many original installations.

CAP

[edit | edit source]The CAP method works by taking the entire bandwidth of the copper wires and simply splitting those up into 3 distinct sections or bands separated to ease interference. Each signal band is then allocated a particular task. The first band is in the signal range of 0 to 4 kHz and is used for telephone conversations. The second band occupies the range of 25 to 160 kHz which is used as an upstream channel, while the third band covers from 240 kHz up to a maximum (depending on conditions) of 1.5 MHz and is used as a downstream channel. This method was simple and effective as poor quality wires or large amounts of interference wouldn't affect the xDSL from working, instead it would just limit the range of the third band and result in slightly reduced speeds.

DMT

[edit | edit source]The DMT system is much more complex. It works by splitting the entire frequency range (bandwidth) into 247 channels of 4 kHz each and allocating a range of the lower channels, staring at around 8 kHz, as bidirectional to provide upstream and downstream channels. By splitting the bandwidth up in this way it effectively allows one connection to operate as if there were 247 modems connected to it, each of which operating at 4 kHz. The technology used in the DMT system is vastly more complex than that required for the CAP method as each of the 247 channels requires constant monitoring and assessment. If the system detects that a specific channel or range of channels are suffering from interference or a degradation in quality then the data stream must be automatically transferred to different channels. For the DMT system one need to place low pass filters into any telephone socket for making voice calls, because voice calls take place below the 4 kHz frequency and the filters simply block anything above this to prevent data signals interfering with the telephone call.

Most popular DSL Service

[edit | edit source]All the different types of DSL are known generally as xDSL, where x denotes all various types. The term xDSL covers a number of similar yet competing forms of DSL technologies, including ADSL, SDSL, HDSL, IDSL, and VDSL.

ADSL

[edit | edit source]The initially offered ADSL service worked by dividing the spectrum available on the local loop into three frequencies bands first one is for POTS (Plain Old Telephone System) the other is for upstream and the third one is for downstream.

But the most likely approach is called the DMT (Discrete MultiTone). It divides the available 1.1 MHz spectrum on the local loop into 256 independent channels of 4312.5 Hz each. Channel 0 is used for POTS (Plain Old Telephone System). Channel 1-5 are not used so that voice and the data signal cannot interfere with each other. From the remaining 250 channels one is used for downstream control and one is used for upstream control. The rest of the channels are available for user data. It is up to the service provider to determine how many channels should be allocated for upstream and downstream. Though 50-50 is possible, most providers allocate 32 channels for upstream and the remainder of the channels for downstream, because most users will download data more than they upload.

The speed provided by the ADSL (ANSI T1.413 and ITU G.992.1) is 8 Mbit/s downstream and 1 Mbit/s upstream. Within each channel a modulation scheme similar to V.34 is used and the sampling rate is 4000 Baud. The actual data is send through QAM modulation with 15 bits per baud.

- ADSL Arrangement

In a typical ADSL arrangement the telephone company installs a Network Interface Device (NID) in the customer’s premises. A splitter is combined with the NID. It is an analog filter that separates the 0-4000 Hz band used by the POTS from the data. The POTS signal is routed to the telephone, and the data signal is routed to the ADSL modem. The ADSL modem is connected to the computer through an Ethernet card or USB port. At the other end of the wire towards the central office, a corresponding splitter is installed, where the voice portion of the signal is filtered out and sent to the voice switch. The signal above 26 kHz is routed to a DSLAM (Digital Subscriber Line Access Multiplexer), which contains the same kind of digital signal processor as an ADSL modem. Once the digital signal is recovered in the bit stream, packets are formed and sent to the Internet Service Provider. The one disadvantage of this system is that a company technician is needed to install the NID, which is very expensive for the company. So another splitterless design was standardized which is normally known as G.lite. The only difference was that a microfilter has to be inserted into each phone jack between the telephone or ADSL modem and wire. The microfilter for the telephone is the low-pass filter eliminating frequencies above 3400 Hz; the microfilter for the ADSL modem is a high-pass filter eliminating frequencies below 26 kHz. Though this system is not as reliable as having a splitter, it still requires a splitter in the end office.

Other DSL Services

[edit | edit source]- ADSL2

ADSL 2 is similar to ADSL and typically the modems can be interchangeable. The difference is that ADSL 2 offers a downstream rate of up to 25 Mbit/s, while the upstream rate remains the same as regular ADSL, at 1 Mbit/s. The range of 15,000 feet from the central office also remains the same.

- ADSL 2+

ADSL2+ is the next generation of ADSL Broadband, ADSL2+ services are capable of download speeds of up to an incredible 24 Megabits per second (depending on your equipment and the length of your copper line). ADSL2+ services are capable of upload speeds of up to 2.5 Megabits per second (Annex M) or 1 Megabit per second. ADSL2+ Broadband runs much faster than standard ADSL. This allows you to get faster speeds at longer distances from your telephone exchange (as per the graph), or get ADSL when you previously have not been able to in the past

- SDSL

Symmetric Digital Subscriber Line (SDSL), a technology that allows more data to be sent over existing copper telephone lines (POTS). SDSL supports data rates up to 3 Mbit/s. SDSL works by sending digital pulses in the high-frequency area of telephone wires and can not operate simultaneously with voice connections over the same wires. SDSL requires a special SDSL modem. SDSL is called symmetric because it supports the same data rates for upstream and downstream traffic

- SHDSL

SHDSL stands for Symmetric High-Bit rate Digital Subscriber Loop. SHDSL is designed to transport rate-adaptive symmetrical data across a single copper pair at data rates from 192 kbit/s to 2.3 Mbit/s or 384 kbit/s to 4.6 Mbit/s over two pairs. With single-pair operation, SHDSL offers 192 kbit/s to 2.3 Mbit/s. Data rates are defined in increments of 8 kbit/s. With dual-pair operation (4-wire mode), SHDSL offers 384 kbit/s to 4.6 Mbit/s. Data rates are defined in increments of 16kbit/s. The line rate on both pairs must be the same.

- VDSL

VDSL (Very High-Data-Rate Digital Subscriber Line) VDSL is basically ADSL at much higher data rates. It is asymmetric and, thus, has a higher downstream rate than upstream rate. The upstream rates are from 1.5 Mbit/s to 2.3 Mbit/s. The downstream rates and distances are listed in the following table. VDSL is seen as a way to provide very high-speed access for streaming video, combined data and video, video-conferencing, data distribution in campus environments, and the support of multiple connections within apartment buildings.

- VDSL 2

VDSL 2, stands short for Very High Bit Rate DSL 2, is a type of Internet connection that uses the phone line, much like DSL. However, VDSL 2 uses 30 MHz of spectrum, has speeds of 100 Mbit/s, and has a range of 12,000 feet. These high capabilities allow for data to be sent in larger volumes, at a much faster speed, and over longer distances. It is no surprise why people are gaining interest in VDSL 2 for their Internet service.

Limitation of xDSL:-

[edit | edit source]DSL has one significant downside: The farther you are from the central office, the slower your connection is. As you move away from the central office, more distortion enters the line and the signal deteriorates. To counter this, the phone company slows down transmission rates, from 1.5 mbps to 384 kilobits per second, for example. But slowing the speed only works up to a point--if you live more than two miles from the nearest central office, you can't get DSL at all. According to the industry trade group ADSL Forum, about 60 percent of United States telephone customers live within areas that could support DSL.

Cable

Cable

[edit | edit source]Coaxial cable is an electrical cable consisting of a round conducting wire, surrounded by an insulation spacer, surrounded by a foil, surrounded by a cylindrical conducting sheath, usually surrounded by a final insulating layer. It is used as a high-frequency transmission line to carry a high-frequency or broadband signal. Sometimes DC power (called bias) is added to the signal to supply the equipment at the other end, as in direct broadcast satellite receivers. Because the electromagnetic field carrying the signal exists (ideally) only in the space between the inner and outer conductors, it cannot interfere with or suffer interference from external electromagnetic fields.A coaxial cable's self-shielding property is vital to successful use in broadband carrier systems, undersea cable systems, radio and TV antenna feeders, and community antenna television (CATV) applications.

CATV:- In the early years the cable television was known as Community Antenna Television or CATV (now often known as "community access television") is more commonly known as "cable TV." In addition to bringing television programs to those millions of people throughout the world who are connected to a community antenna, cable TV is an increasingly popular way to interact with the World Wide Web and other new forms of multimedia information and entertainment services

HFC:- Hybrid fiber-coaxial systems were provisioned using only coaxial cable. Modern systems use fiber transport from the headend to an optical node located in the neighborhood to reduce system noise. Coaxial cable runs from the node to the subscriber. The fiber plant is generally a star configuration with all optical node fibers terminating at a headend. The coaxial cable part of the system is generally a trunk-and-branch configuration.

Internet Over Cable

[edit | edit source]Over the period of time the cable system has grew and the cables between the cities are replaced with the high bandwidth fiber. A system with fiber for long distance and coaxial cable to the houses is called Hybrid Fiber Coax (H.F.C) System. The Electro-optical converters are known as fiber nodes. The fiber node can feed multiple coaxial cables due to the high bandwidth of fiber.

A single cable can be shared by many houses while in the telephone system; every house has its own local loop. While programs are broadcast it does not really make any difference whether there are 10 viewers or 10000, but if the same cable is used to provide the internet access, it makes a lot of difference. One user can utilize the other bandwidth. The more the users are the more bandwidth they needed. Cable industry has tackled this problem by splitting long cables and connecting each one directly to the fiber node. The bandwidth from the head end to each fiber node is infinite, so as long as there are not too many users the situation is under control. But as the traffic will increase the more splitting and fiber nodes will be required.

Spectrum Allocation

[edit | edit source]It is not practically possible to strictly use the cable network only for the purpose of providing internet connection. So there has to be a way of providing internet and cable TV through same cable. Normally cable channel occupy the 54-550 MHz region in which there is FM radio from 88-108 MHz. Each channel is 6 MHz wide. Some of the modern cable operates above 550 MHz, often to 750 MHz which is used as downstream data. The upstream channels are introduced in the 5-42 MHz band and frequencies at the high end for downstream. For downstream channel each 6 MHz channel is taken and modulated it with QAM-64(6 bit), so we get around 36 Mbit/s gross and net 27 Mbit/s payload without overhead. Thus total effective downstream bandwidth is 200 / 6 * 27 = 891 Mbit/s. For upstream QAM-64 does not work because of too much noise and terrestrial microwaves, so QPSK scheme is used which yields 2 bits per baud so we get around 12 Mbit/s gross and 9 Mbit/s net without no overhead. Thus total effective upstream bandwidth is 37 / 6 * 9 = 54 Mbit/s.

Cable Modem

[edit | edit source]A cable modem is a device that enables you to hook up your PC to a local cable TV line and receive data at about 1.5 Mbit/s. This data rate far exceeds that of the prevalent 28.8 and 56 kbit/s telephone modems and the up to 128 kbit/s of Integrated Services Digital Network (ISDN) and is about the data rate available to subscribers of Digital Subscriber Line (DSL) telephone service. A cable modem can be added to or integrated with a set-top box that provides your TV set with channels for Internet access. In most cases, cable modems are furnished as part of the cable access service and are not purchased directly and installed by the subscriber. A cable modem has two connections: one to the cable wall outlet and the other to a PC or to a set-top box for a TV set. Although a cable modem does modulation between analog and digital signals, it is a much more complex device than a telephone modem. It can be an external device or it can be integrated within a computer or set-top box. Typically, the cable modem attaches to a standard 10BASE-T Ethernet card in the computer. All of the cable modems attached to a cable TV company coaxial cable line communicate with a Cable Modem Termination System (CMTS) at the local cable TV company office. All cable modems can receive from and send signals only to the CMTS, but not to other cable modems on the line. Some services have the upstream signals returned by telephone rather than cable, in which case the cable modem is known as a Telco-return cable modem.

The actual bandwidth for Internet service over a cable TV line is up to 27 Mbit/s on the download path to the subscriber with about 2.5 Mbit/s of bandwidth for interactive responses in the other direction. However, since the local provider may not be connected to the Internet on a line faster than a T-carrier system at 1.5 Mbit/s, a more likely data rate will be close to 1.5 Mbit/s

ADSL versus CABLE

[edit | edit source]Though the cable and the ADSL are much more like same and no conclusion can be drawn which one is better than other, it really depends upon the circumstances. But some of their differences are as follows:-

1. Cable uses coaxial cable while ADSL uses normal twisted pair, however the much of cable’s bandwidth is wasted on television programs.

2. Cable subscribers share the line connecting them to neighborhood servers; ADSL subscribers share the line connecting them from the regional telephone office to the main telephone office.

3. ADSL users are hardly affected by the number of existing users, since each has a dedicated connection. While if more customers will subscribe for Cable connection the performance will drop.

4. Everyone who has telephone connection may not be able to get ADSL as he is not close enough to companies end office. While if one has Cable and the company is providing Internet access then he can get it.

5. ADSL offers more security then the cable. Any cable user can easily read the packets going down the cable if its cable provider is not encrypting the traffic in both directions.

6. Since the telephone system is more reliable then cable so, ADSL is more reliable then the cable. In the case of cable if one amplifier fails all downstream users are cut off instantly.

Questions

[edit | edit source]Ques1. The modem constellation diagram given below, QPSK, QAM-16, QAM -64, has data points at the following coordinates: (1,1), (1,-1), (-1,1), (-1,-1). How many bps can a modem with these parameters achieve at 1200 bit/s?

Ans1. There are four legal values per baud, so the bit rate is twice the baud rate. At 1200 baud, the data rate is 2400 bit/s.

Ques2. How many frequencies does a full-duplex QAM-64 modem use?

Ans2. Two frequencies are used, one for upstream and one for downstream. The modulation scheme itself just uses amplitude and phase. The frequency is not modulated.

Ques3. A Cable company decides to provide Internet access over cable in a neighborhood consisting of 5000 houses. The company uses a coaxial cable and spectrum allocation allowing 100 Mbit/s downstream bandwidth per cable. To attract customers, the company decides to guarantee at least 2 Mbit/s downstream bandwidth to each house at any time. Describe what the cable company needs to do to provide this guarantee.

Ans3. A 2-Mbit/s downstream bandwidth guarantee to each house implies at most 50 houses per coaxial cable. Thus, the cable company will need to split up the existing cable into 100 coaxial cables and connect each of them directly to a fiber node.

Ques4. How fast can a cable user receive the data if the network is otherwise idle?

Ans4. Even if the downstream channel works at 27 Mbit/s, the user interface is nearly always 10-Mbit/s Ethernet. There is no way to get bits to the computer any faster than 10-Mbit/s under these circumstances. If the connection between the PC and cable modem is fast Ethernet, then the full 27 Mbit/s may be available. Usually, cable operators specify 10 Mbit/s Ethernet because they do not want one user sucking up the entire bandwidth.

Parallel vs Serial

In a digital communications system, there are 2 methods for data transfer: parallel and serial. Parallel connections have multiple wires running parallel to each other (hence the name), and can transmit data on all the wires simultaneously. Serial, on the other hand, uses a single wire to transfer the data bits one at a time.

Parallel Data

[edit | edit source]The parallel port on modern computer systems is an example of a parallel communications connection. The parallel port has 8 data wires, and a large series of ground wires and control wires. IDE hard-disk connectors and PCI expansion ports are another good example of parallel connections in a computer system.

Serial Data

[edit | edit source]The serial port on modern computers is a good example of serial communications. Serial ports have either a single data wire, or a single differential pair, and the remainder of the wires are either ground or control signals. USB, FireWire, SATA and PCI Express are good examples of other serial communications standards in modern computers.

Which is Better?

[edit | edit source]It is a natural question to ask which one of the two transmission methods is better. At first glance, it would seem that parallel ports should be able to send data much faster than serial ports. Let's say we have a parallel connection with 8 data wires, and a serial connection with a single data wire. Simple arithmetic seems to show that the parallel system can transmit 8 times as fast as the serial system.

However, parallel ports suffer extremely from inter-symbol interference (ISI) and noise, and therefore the data can be corrupted over long distances. Also, because the wires in a parallel system have small amounts of capacitance and mutual inductance, the bandwidth of parallel wires is much lower than the bandwidth of serial wires. We all know by now that an increased bandwidth leads to a better bit rate. We also know that less noise in the channel means we can successfully transmit data reliably with a higher Signal-to-Noise Ratio, SNR.

If, however, we bump up the power in a serial connection by using a differential signal with 2 wires (one with a positive voltage, and one with a negative voltage), we can use the same amount of power, have twice the SNR, and reach an even higher bitrate without suffering the effects of noise. USB cables, for instance, use shielded, differential serial communications, and the USB 2.0 standard is capable of data transmission rates of 480Mbits/sec!

In addition, because of the increased potential for noise and interference, parallel wires need to be far shorter than serial wires. Consider the standard parallel port wire to connect the PC to a printer: those wires are between 3 and 4 feet long, and the longest commercially available is typically 25 meter(75 feet). Now consider Ethernet wires (which are serial, and typically unshielded twisted pair): they can be bought in lengths of 100 meters (300 feet), and a 300 meters (900 feet) run is not uncommon!

UART, USART

[edit | edit source]A Universal Asynchronous Receiver/Transmitter (UART) peripheral is used in embedded systems to convert bytes of data to bit strings which may be transmitted asynchronously using a serial protocol like RS-232.

A Universal Synchronous/Asynchronous Receiver/Transmitter (USART) peripheral is just like a UART peripheral, except there is also a provision for synchronous transmission by means of a clock signal which is generated by the transmitter.

Channels

Channels

[edit | edit source]A channel is a communication medium, the path that data takes from source to destination. A channel can be comprised of so many different things: wires, free space, and entire networks. Signals can be routed from one type of network to another network with completely different characteristics. In the Internet, a packet may be sent over a wireless WiFi network to an ethernet lan, to a DSL modem, to a fiber-optic backbone, et cetera. The many unique physical characteristics of different channels determine the three characteristics of interest in communication: the latency, the data rate, and the reliability of the channel.

Bandwidth and Bitrate

[edit | edit source]Bandwidth is the difference between the upper and lower cutoff frequencies of, for example, a filter, a communication channel, or a signal spectrum. Bandwidth, like frequency, is measured in hertz (Hz). The bandwidth can be physically measured using a spectrum analyzer.

Bandwidth, given by the variables Bw or W is closely related to the amount of digital bits that can be reliably sent over a given channel:

where rb is the bitrate. If we have an M-ary signaling scheme with m levels, we can expand the previous equation to find the maximum bit rate for the given bandwidth.

Example: Bandwidth and Bitrate

[edit | edit source]Let's say that we have a channel with 1KHz bandwidth, and we would like to transmit data at 5000 bits/second. We would like to know how many levels of transmission we would need to attain this data rate. Plugging into the second equation, we get the following result:

However, we know that in M-ary transmission schemes, m must be an integer. Rounding up to the nearest integer, we find that m = 3.

Channel Capacity

[edit | edit source]The "capacity" of a channel is the theoretical upper-limit to the bit rate over a given channel that will result in negligible errors. Channel capacity is measured in bits/s.

Shannon's channel capacity is an equation that determines the information capacity of a channel from a few physical characteristics of the channel. A communication systems can attempt to exceed the Shannon's capacity of a given channel, but there will be many errors in transmission, and the expense is generally not worth the effort. Shannon's capacity, therefore, is the theoretical maximum bit rate below which information can be transmitted with negligible errors.

The Shannon channel capacity, C, is measured in units of bits/sec and is given by the equation:

C is the maximum capacity of the channel, W is the available bandwidth in the channel, and SNR is the signal to noise ratio, not in DB.

Because channel capacity is proportional to analog bandwidth, some people call it "digital bandwidth".

Channel Capacity Example

[edit | edit source]The telephone network has an effective bandwidth less than 3000Hz (but we will round up), and transmitted signals have an average SNR less than 40dB (10,000 times larger). Plugging those numbers into Shannon's equation, we get the following result:

we can see that the theoretical maximum channel capacity of the telephone network (if we generously round up all our numbers) is approximately 40Kb/sec!. How then can some modems transmit at a rate of 56kb/sec? it turns out that 56k modems use a trick, that we will talk about in a later chapter.

Acknowledgement

[edit | edit source]Digital information packets have a number of overhead bits known as a header. This is because most digital systems use statistical TDM (as discussed in the Time-Division Multiplexing chapter). The total amount of bits sent in a transmission must be at least the sum of the data bits and the header bits. The total number of bits transmitted per second (the "throughput") is always less than the theoretical capacity. Because some of this throughput is used for these header bits, the number of data bits transmitted per second (the "goodput") is always less than the throughput.

In addition, since we all want our information to be transmitted reliably, it makes good sense for an intelligent transmitter and an intelligent receiver to check the message for errors.

An essential part of reliable communication is error detection, a subject that we will talk about more in depth later. Error detection is the process of embedding some sort of checksum (called a CRC sum in IP communications) into the packet header. The receiver uses this checksum to detect most errors in the transmission.

forward error correction

[edit | edit source]Some systems use forward error correction (FEC), a subject that we will talk about more in depth later. In such a system, the transmitter builds a packet and adds error correction codes to the packet. Under normal conditions -- with very few bit errors -- that gives the receiver enough information to not only determine that there was some sort of error, but also pinpoint exactly which bits are in error, and fix those errors.

ARQ: ACK and NAK

[edit | edit source]In addition, since we all want our information to be transmitted reliably, it makes good sense for an intelligent transmitter and an intelligent receiver to communicate directly to each other, to ensure reliable transmission. This is called acknowledgement, and the process is called hand-shaking.

In an acknowledgement request (ARQ) scheme, the transmitter sends out data packets, and the receiver will then send back an acknowledgement. A positive acknowledgement (called "ACK") means that the packet was received without any detectable errors. A negative acknowledgement (called "NAK") means that the packet was received in error. Generally, when a NAK is received by the transmitter, the transmitter will send the packet again.

If the transmitter fails to receive a ACK in a reasonable amount of time, the transmitter will send the packet again.

Streaming Packets

[edit | edit source]In some streaming protocols, such as RTP, the transmitter is sending time-sensitive data, and it can therefore not afford to wait for acknowledgement packets. In these types of systems, the receiver will attempt to detect errors in the received packets, and if an error is found, and it cannot be immediately corrected with FEC, the bad packet is simply deleted.

Further reading

[edit | edit source]- Data Coding Theory/Forward Error Correction

- Serial Programming/Error Correction Methods

- Wikipedia: forward error correction

OSI Reference Model

| |

A Wikibookian has nominated this page for cleanup. You can help make it better. Please review any relevant discussion. |

| A Wikibookian has nominated this page for cleanup. You can help make it better. Please review any relevant discussion. |

This page will discuss the OSI Reference Model

OSI Model

[edit | edit source]| Layer | What It Does |

|---|---|

| Application Layer | The application layer is what the user of the computer will see and interact with. This layer is the "Application" that the programmer develops. |

| Presentation Layer | The Presentation Layer is involved in formatting the data into a human-readable format, and translating different languages, etc... |

| Session Layer | The Session Layer will maintain different connections, in case a single application wants to connect to multiple remote sites (or form multiple connections with a single remote site). |

| Transport Layer | The Transport Layer will handle data transmissions, and will differentiate between Connection-Oriented transmissions (TCP) and connectionless transmissions (UDP) |

| Network Layer | The Network Layer allows different machines to address each other logically, and allows for reliable data transmission between computers (IP) |

| Data-Link Layer | The Data-Link Layer is the layer that determines how data is sent through the physical channel. Examples of Data-Link protocols are "Ethernet" and "PPP". |

| Physical Layer | The Physical Layer consists of the physical wires, or the antennas that comprise the physical hardware of the transmission system. Physical layer entities include WiFi transmissions, and 100BaseT cables. |

What It Does

[edit | edit source]The OSI model allows for different developers to make products and software to interface with other products, without having to worry about how the layers below are implemented. Each layer has a specified interface with layers above and below it, so everybody can work on different areas without worrying about compatibility.

Packets

[edit | edit source]Higher level layers handle the data first, so higher level protocols will touch packets in a descending order. Let's say we have a terminal system that uses TCP protocol in the transport layer, IP in the network layer, and Ethernet in the Data Link layer. This is how the packet would get created:

1. Our application creates a data packet

- |Data|

2. TCP creates a TCP Packet:

- |TCP Header|Data|

3. IP creates an IP packet:

- |IP Header|TCP Header|Data|CRC|

4. Ethernet Creates an Ethernet Frame:

- |Ethernet Header|IP Header|TCP Header|Data|CRC|

On the receiving end, the layers receive the data in the reverse order:

1. Ethernet Layer reads and removes Ethernet Header:

- |IP Header|TCP Header|Data|CRC|

2. IP layer reads the IP header and checks the CRC for errors

- |TCP Header|Data|

3. TCP Layer reads TCP header

- |Data|

4. Application reads data.

It is important to note that multiple TCP packets can be squeezed into a single IP packet, and multiple IP packets can be put together into an Ethernet Frame.

Network layer

[edit | edit source]Introduction

[edit | edit source]Network Layer is responsible for transmitting messages hop by hop. The major internet layer protocols exist in this layer. Internet Protocol (IP) plays as a major component among all others, but we will also discuss other protocols, such as Address Resolution Protocol (ARP), Dynamic Host Configuration Protocol (DHCP), Network Address Translation (NAT), and Internet Control Message Protocol (ICMP). Network layer does not guarantee the reliable communication and delivery of data.

Network Layer Functionality

[edit | edit source]Network Layer is responsible for transmitting datagrams hop by hop, which sends from station to station until the messages reach their destination. Each computer should have a unique IP address assigned as an interface to identify itself from the network. When a message arrives from Transport Layer, IP looks for the message addresses, performs encapsulation and add a header end to become a datagram, and passes to the Data Link Layer. As for the same at the receive side, IP performs decapsulation and remove network layer header, and then sends to the Transport Layer. The network model illustrates below:

Figure 1 Network Layer in OSI Model

When a datagram sends from the source to the destination, here are simple steps on how IP works with a datagram travels:

- Upper-layer application sends a packet to the Network Layer.

- Data calculation by checksum.

- IP header and datagram constructs.

- Routing through gateways.

- Each gateways IP layer performs checksum. If checksum does not match, the datagram will be dropped and an error message will send back to the sending machine. Along the way, if TTL decrements to 0, the same result will occur. And, the destination address routing path will be determined on every stop as the datagram passes along the internetwork.

- Datagram gets to the Network Layer of destination.

- Checksum calculation performs.

- IP header takes out.

- Message passes to upper-layer application.

Figure 2 IP Characteristic in Network Layer

In Network Layer, there exist other protocols, such as Address Resolution Protocol (ARP) and Internet Control Message Protocol (ICMP), but, however, IP holds a big part among all.

Figure3 Internet Protocol in Network Layer

In addition, IP is a connectionless protocol, which means each packet acts as individual and passes through the Internet independently. There is sequence, but no sequence tracking on packets on the traveling, which no guarantee, in result of unreliable transmission.

Common Alterations

[edit | edit source]Other Reference Models

[edit | edit source]TCP/ IP model

Error Control, Flow Control, MAC

Introduction

[edit | edit source]Data Link Layer is layer 2 in OSI model. It is responsible for communications between adjacent network nodes. It handles the data moving in and out across the physical layer. It also provides a well defined service to the network layer. Data link layer is divided into two sub layers. The Media Access Control (MAC) and Logical Link Control (LLC).

Data-Link layer ensures that an initial connection has been set up, divides output data into data frames, and handles the acknowledgements from a receiver that the data arrived successfully. It also ensures that incoming data has been received successfully by analyzing bit patterns at special places in the frames.

In the following sections data link layer's functions- Error control and Flow control has been discussed. After that MAC layer is explained. Multiple access protocols are explained in the MAC layer section.

Error Control

[edit | edit source]Network is responsible for transmission of data from one device to another device. The end to end transfer of data from a transmitting application to a receiving application involves many steps, each subject to error. With the error control process, we can be confident that the transmitted and received data are identical. Data can be corrupted during transmission. For reliable communication, error must be detected and corrected.

Error control is the process of detecting and correcting both the bit level and packet level errors.

Types of Errors

Single Bit Error

The term single bit error means that only one bit of the data unit was changed from 1 to 0 and 0 to 1.

Burst Error

In term burst error means that two or more bits in the data unit were changed. Burst error is also called packet level error, where errors like packet loss, duplication, reordering.

Error Detection

Error detection is the process of detecting the error during the transmission between the sender and the receiver.

Types of error detection

- Parity checking

- Cyclic Redundancy Check (CRC)

- Checksum

Redundancy

Redundancy allows a receiver to check whether received data was corrupted during transmission. So that he can request a retransmission. Redundancy is the concept of using extra bits for use in error detection. As shown in the figure sender adds redundant bits (R) to the data unit and sends to receiver, when receiver gets bits stream and passes through checking function. If no error then data portion of the data unit is accepted and redundant bits are discarded. otherwise asks for the retransmission.

Parity checking

Parity adds a single bit that indicates whether the number of 1 bits in the preceding data is even or odd. If a single bit is changed in transmission, the message will change parity and the error can be detected at this point. Parity checking is not very robust, since if the number of bits changed is even, the check bit will be invalid and the error will not be detected.

- Single bit parity

- Two dimension parity

Moreover, parity does not indicate which bit contained the error, even when it can detect it. The data must be discarded entirely, and re-transmitted from scratch. On a noisy transmission medium a successful transmission could take a long time, or even never occur. Parity does have the advantage, however, that it's about the best possible code that uses only a single bit of space.

Cyclic Redundancy Check

CRC is a very efficient redundancy checking technique. It is based on binary division of the data unit, the remainder of which (CRC) is added to the data unit and sent to the receiver. The Receiver divides data unit by the same divisor. If the remainder is zero then data unit is accepted and passed up the protocol stack, otherwise it is considered as having been corrupted in transit, and the packet is dropped.

Sequential steps in CRC are as follows.

Sender follows following steps.

- Data unit is composite by number of 0s, which is one less than the divisor.

- Then it is divided by the predefined divisor using binary division technique. The remainder is called CRC. CRC is appended to the data unit and is sent to the receiver.

Receiver follows following steps.

- When data unit arrives followed by the CRC it is divided by the same divisor which was used to find the CRC (remainder).

- If the remainder result in this division process is zero then it is error free data, otherwise it is corrupted.

Diagram shows how to CRC process works.

[a] sender CRC generator [b] receiver CRC checker

Checksum

Check sum is the third method for error detection mechanism. Checksum is used in the upper layers, while Parity checking and CRC is used in the physical layer. Checksum is also on the concept of redundancy.

In the checksum mechanism two operations to perform.

Checksum generator

Sender uses checksum generator mechanism. First data unit is divided into equal segments of n bits. Then all segments are added together using 1’s complement. Then it is complemented ones again. It becomes Checksum and sends along with data unit.

Exp:

If 16 bits 10001010 00100011 is to be sent to receiver.

So the checksum is added to the data unit and sends to the receiver.

Final data unit is 10001010 00100011 01010000.

Checksum checker

Receiver receives the data unit and divides into segments of equal size of segments. All segments are added using 1’s complement. The result is complemented once again. If the result is zero, data will be accepted, otherwise rejected.

Exp:

The final data is nonzero then it is rejected.

Error Correction

This type of error control allows a receiver to reconstruct the original information when it has been corrupted during transmission.

Hamming Code

It is a single bit error correction method using redundant bits.

In this method redundant bits are included with the original data. Now, the bits are arranged such that different incorrect bits produce different error results and the corrupt bit can be identified. Once the bit is identified, the receiver can reverse its value and correct the error. Hamming code can be applied to any length of data unit and uses the relationships between the data and the redundancy bits.

Algorithm:

- Parity bits are positions at the power of two (2 r).

- Rest of the positions is filled by original data.

- Each parity bit will take care of its bits in the code.

- Final code will sends to the receiver.

In the above example we calculates the even parities for the various bit combinations. the value for the each combination is the value for the corresponding r(redundancy)bit. r1 will take care of bit 1,3,5,7,9,11. and it is set based on the sum of even parity bit. the same method for rest of the parity bits.

If the error occurred at bit 7 which is changed from 1 to 0, then receiver recalculates the same sets of bits used by the sender. By this we can identify the perfect location of error occurrence. once the bit is identified the receiver can reverse its value and correct the error.

Flow Control

[edit | edit source]Flow Control is one important design issue for the Data Link Layer that controls the flow of data between sender and receiver.

In Communication, there is communication medium between sender and receiver. When Sender sends data to receiver then there can be problem in below case :

1) Sender sends data at higher rate and receive is too sluggish to support that data rate.

To solve the above problem, FLOW CONTROL is introduced in Data Link Layer. It also works on several higher layers. The main concept of Flow Control is to introduce EFFICIENCY in Computer Networks.

Approaches of Flow Control

- Feed back based Flow Control

- Rate based Flow Control

Feed back based Flow Control is used in Data Link Layer and Rate based Flow Control is used in Network Layer.

Feed back based Flow Control

In Feed back based Flow Control, Until sender receives feedback from the receiver, it will not send next data.

Types of Feedback based Flow Control

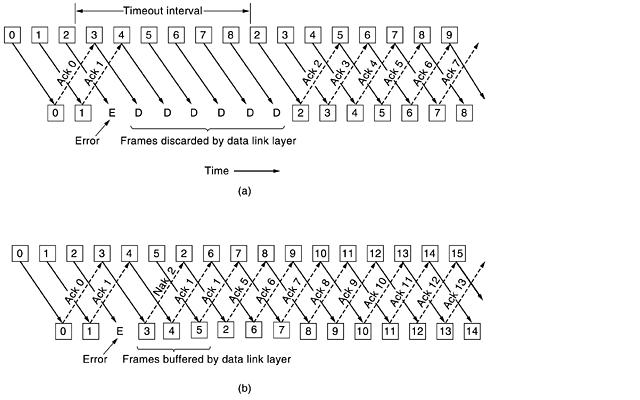

A. Stop-and-Wait Protocol

B. Sliding Window Protocol

- A One-Bit Sliding Window Protocol

- A Protocol Using Go Back N

- A Protocol Using Selective Repeat

A. A Simplex Stop-and-Wait Protocol

In this Protocol we have taken the following assumptions:

- It provides unidirectional flow of data from sender to receiver.

- The Communication channel is assumed to be error free.

In this Protocol the Sender simply sends data and waits for the acknowledgment from Receiver. That's why it is called Stop-and-Wait Protocol.

This type is not so much efficient, but it is simplest way of Flow Control.

In this scheme we take Communication Channel error free, but if the Channel has some errors then receiver is not able to get the correct data from sender so it will not possible for sender to send the next data (because it will not get acknowledge from receiver). So it will end the communication, to solve this problem there are two new concepts were introduced.

- TIMER, if sender was not able to get acknowledgment in the particular time than, it sends the buffered data once again to receiver. When sender starts to send the data, it starts timer.

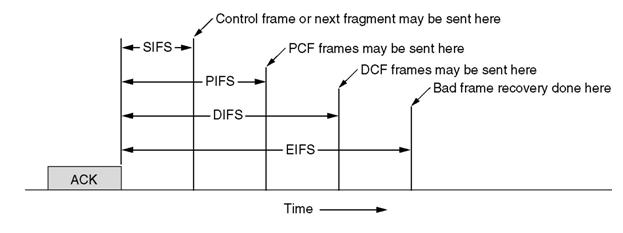

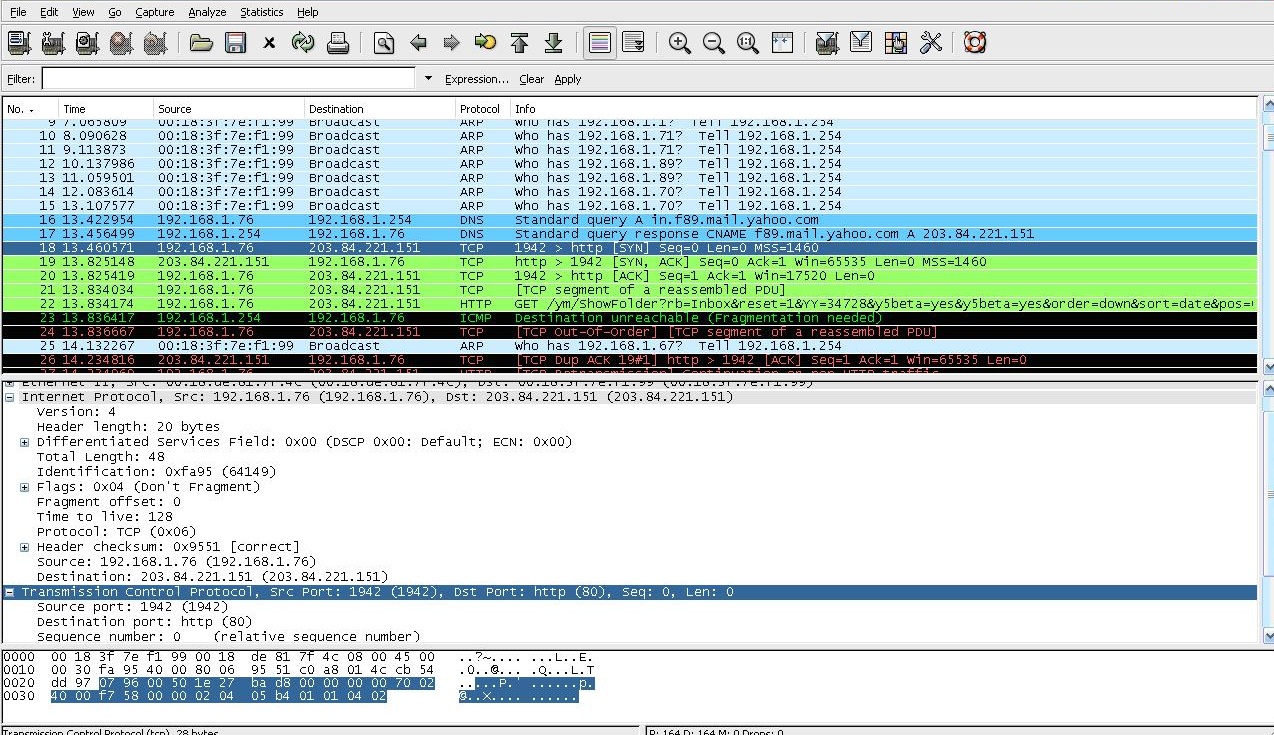

- SEQUENCE NUMBER, from this the sender sends the data with the specific sequence number so after receiving the data, receiver sends the data with that sequence number, and here at sender side it also expect the acknowledgment of the same sequence number.