Ruslan Belkin And Sean Dawson on LinkedIn's Network Updates Uncovered

- 1. LinkedIn: Network Updates Uncovered Ruslan Belkin Sean Dawson

- 2. Agenda • Quick Tour • Requirements (User Experience / Infrastructure) • Service API • Internal Architecture • Applications (e.g., Twitter Integration, Email Delivery) • Measuring Performance • Shameless self promotion

- 4. The Stack Environment 90% Java 5% Groovy 2% Scala 2% Ruby 1% C++ Containers Tomcat, Jetty Data Layer Oracle, MySQL, Voldemort, Lucene, Memcache Offline Processing Hadoop Queuing ActiveMQ Frameworks Spring

- 5. The Numbers Updates Created 35M / week Update Emails 14M / week Service Calls 20M / day 230 / second

- 7. Stream View

- 9. Profile

- 10. Groups

- 11. Mobile

- 12. Email NUS Email digest screenshot

- 13. HP without NUS

- 14. Expectations – User Experience • Multiple presentation views • Comments on updates • Aggregation of noisy updates • Partner Integration • Easy to add new updates to the system • Handles I18N and other dynamic contexts • Long data retention

- 15. Expectations - Infrastructure • Large number of connections, followers and groups • High request volume + Low Latency • Random distribution lists • Black/White lists, A/B testing, etc. • Tenured storage of update history • Tracking of click through rates, impressions • Supports real-time, aggregated data/statistics • Cost-effective to operate

- 16. Historical Note (homepage circa 2007) • Legacy “network update” feature was a mixed bag of detached services. • Neither consistent nor scalable • Tightly coupled to our Inbox • Migration plan • Introduce API, unify all disparate service calls • Add event-driven activity tracking with DB backend • Build out the product • Optimize!

- 17. Network Updates Service – Overview

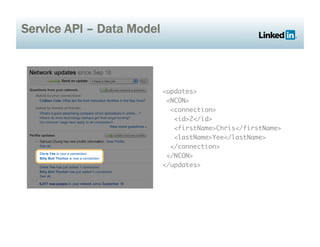

- 18. Service API – Data Model <updates> <NCON> <connection> <id>2</id> <firstName>Chris</firstName> <lastName>Yee</lastName> </connection> </NCON> </updates>

- 19. Service API – Post NetworkUpdatesNotificationService service = getNetworkUpdatesNotificationService(); ProfileUpdateInfo profileUpdate = createProfileUpdate(); Set<NetworkUpdateDestination> destinations = Sets.newHashSet( NetworkUpdateDestinations.newMemberFeedDestination(1213) ); NetworkUpdateSource source = new NetworkUpdateMemberSource(1214); Date updateDate = getClock().currentDate(); service.submitNetworkUpdate(source, destinations, updateDate, profileUpdate);

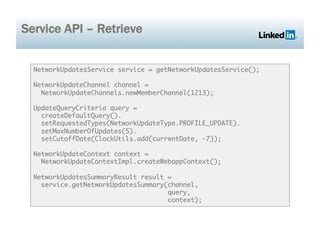

- 20. Service API – Retrieve NetworkUpdatesService service = getNetworkUpdatesService(); NetworkUpdateChannel channel = NetworkUpdateChannels.newMemberChannel(1213); UpdateQueryCriteria query = createDefaultQuery(). setRequestedTypes(NetworkUpdateType.PROFILE_UPDATE). setMaxNumberOfUpdates(5). setCutoffDate(ClockUtils.add(currentDate, -7)); NetworkUpdateContext context = NetworkUpdateContextImpl.createWebappContext(); NetworkUpdatesSummaryResult result = service.getNetworkUpdatesSummary(channel, query, context);

- 21. System at a glance

- 22. Data Collection – Challenges • How do we efficiently support collection in a dense social network • Requirement to retrieve the feed fast • But – there a lot of events from a lot of members and sources • And – there are multiplier effects

- 23. Option 1: Push Architecture (Inbox) • Each member has an inbox of notifications received from their connections/followees • N writes per update (where N may be very large) • Very fast to read • Difficult to scale, but useful for private or targeted notifications to individual users

- 24. Option 1: Push Architecture (Inbox)

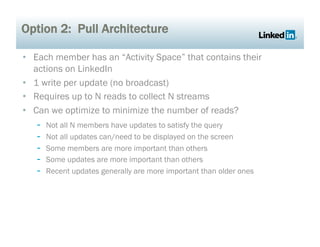

- 25. Option 2: Pull Architecture • Each member has an “Activity Space” that contains their actions on LinkedIn • 1 write per update (no broadcast) • Requires up to N reads to collect N streams • Can we optimize to minimize the number of reads? - Not all N members have updates to satisfy the query - Not all updates can/need to be displayed on the screen - Some members are more important than others - Some updates are more important than others - Recent updates generally are more important than older ones

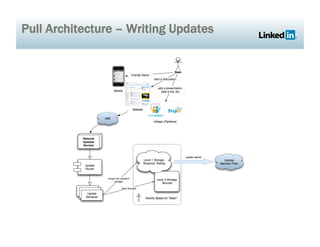

- 26. Pull Architecture – Writing Updates

- 27. Pull Architecture – Reading Updates

- 28. Storage Model • L1: Temporal • Oracle • Combined CLOB / varchar storage • Optimistic locking • 1 read to update, 1 write (merge) to update • Size bound by # number of updates and retention policy • L2: Tenured • Accessed less frequently • Simple key-value storage is sufficient (each update has a unique ID) • Oracle/Voldemort

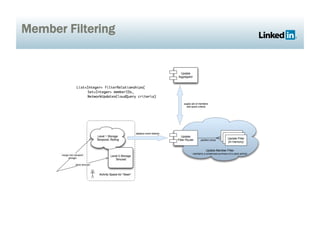

- 29. Member Filtering • Need to avoid fetching N feeds (too expensive) • Filter contains an in-memory summary of user activity • Needs to be concise but representative • Partitioned by member across a number of machines • Filter only returns false-positives, never false-negatives • Easy to measure heuristic; for the N members that I selected, how many of those members actually had good content • Tradeoff between size of summary and filtering power

- 30. Member Filtering

- 31. Commenting • Users can create discussions around updates • Discussion lives in our forum service • Denormalize a discussion summary onto the tenured update, resolve first/last comments on retrieval • Full discussion can be retrieved dynamically

- 32. Twitter Sync • Partnership with Twitter • Bi-directional flow of status updates • Export status updates, import tweets • Users register their twitter account • Authorize via OAuth

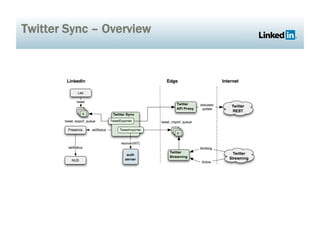

- 33. Twitter Sync – Overview

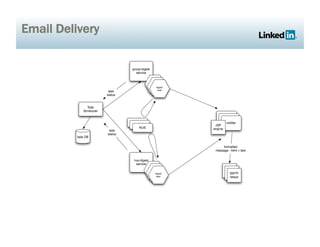

- 34. Email Delivery • Multiple concurrent email generating tasks • Each task has non-overlapping ID range generators to avoid overlap and allow parallelization • Controlled by task scheduler • Sets delivery time • Controls task execution status, suspend/resume, etc • Caches common content so it is not re-requested • Tasks deliver content to Notifier, which packages the content into an email via JSP engine • Email is then delivered to SMTP relays

- 35. Email Delivery

- 36. Email Delivery

- 37. What else? Brute force methods for scaling: • Shard databases • Memcache everything • Parallelize everything • User-initiated write operations are asynchronous when possible

- 38. Know your numbers • Bottlenecks are often not where you think they are • Profile often • Measure actual performance regularly • Monitor your systems • Pay attention to response time vs transaction rate • Expect failures

- 40. Another way of measuring performance

- 41. LinkedIn is a great place to work

- 42. Questions? Ruslan Belkin (http://www.linkedin.com/in/rbelkin) Sean Dawson (http://www.linkedin.com/in/seandawson)