NAMD

Contents

Background

| Link | Code | Version | Machine | Date |

|---|---|---|---|---|

| NAMD homepage | Download Page | 2.6 | Linux x86_64 | February 2009 |

Background

Currently this guide is for building NAMD and charm++ from source on x86 64-bit architecture. One would follow similar step on other machines but some of the file names would be changed.

Building Charm++

Automatic instrumentation of charm applications using TAU is available in the unreleased version of Charm++ which you can download here: http://charm.cs.uiuc.edu/download/ (click on the nightly CVS source archive). To compile Charm++:

%> cd charm %> ./build charm++ mpi-linux-x86_64 mpicxx ifort -O3 ... %> ./build Tau mpi-linux-x86_64 mpicxx ifort --tau-makefile=<tau_dir>/x86_64/lib/Makefile.tau-icpc-mpi --no-build-shared -O3

As always the TAU Makefile you specify determines what profiling/tracing options are set. Wait for charm to finish building then test the configuration by:

%> cd mpi-linux-x86_64-ifort-mpicx/tests/charm++/simplearrayhello %> make OPTS='-tracemode Tau -no-trace-mpi' %> ./charmrun ./hello +p4

Verify that this program runs without any errors and that you get a profile file for each processor.

Building NAMD

Begin in NAMD's home directory:

%> cd NAMD_2.6_Source %> ./config Linux-x86_64-icc --charm-base $CHARMROOT --with-cuda --cuda-prefix $CUDAROOT --with-fftw --with-fftw3 --fftw-prefix $FFTW

Now set NAMD to use TAU, edit arch/Linux-x86_64-icc.arch adding this line:

CHARMOPTS = -tracemode Tau

Then build NAMD

%> cd Linux-x86_64-icc %> make

NAMD with CUDA

To profile NAMD's CUDA kernel I few modifications need to be made to the build steps:

in charm, do:

%> ./build Tau mpi-linux-x86_64 mpicxx ifort --tau-makefile=<tau_dir>/x86_64/lib/Makefile.tau-icpc-mpi-cupti --no-build-shared -O3

(change TAU makefile)

rebuild NAMD.

and run NAMD by doing:

mpirun -np 3 tau_exec -T icpc,mpi,cupti -cupti ./namd2 src/apoa1/apoa1.namd

(have the '-T' options match the TAU makefile)

Running NAMD

If everything works properly you should now have a namd2 executable. Test by running:

%> ./charmrun ./namd2 +p4 src/alanin

Performance Data

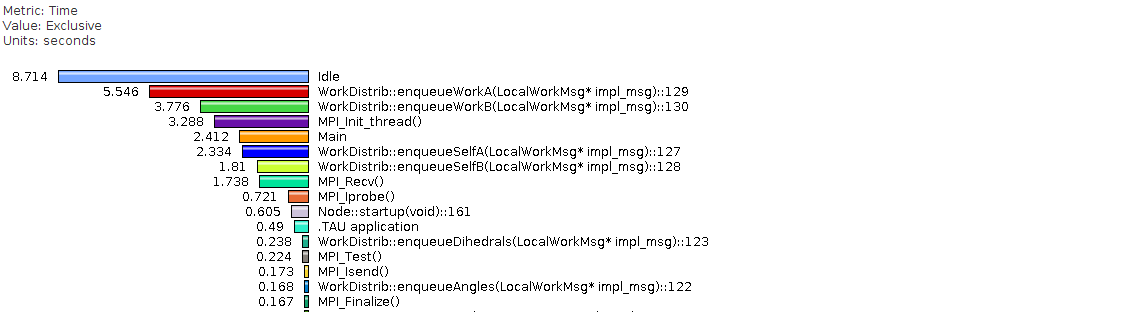

Mean profile of a run with the performance benchmark ALANIN:

Histogram of the enqueueWorkA routine across 32 processors:

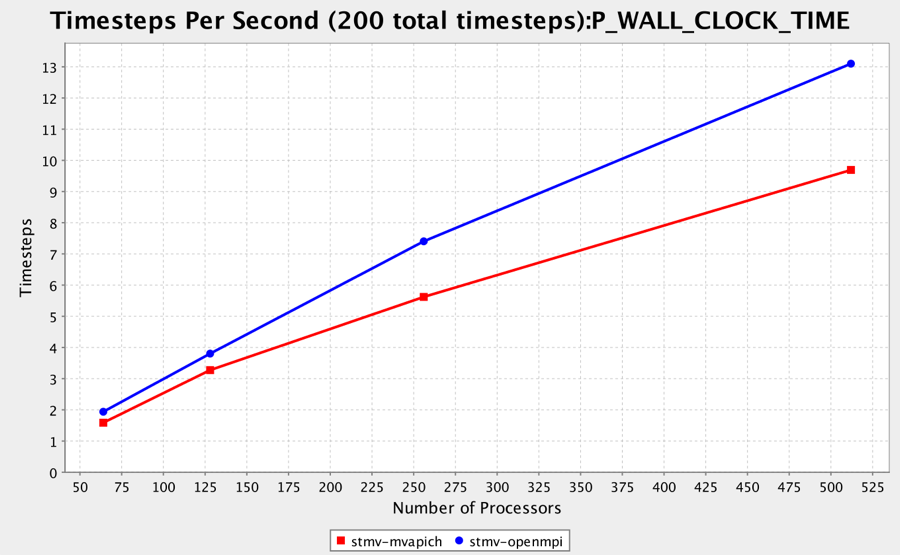

A comparison of Timestep per second between two different MPI implementations on Ranger: