Quantinuum accelerates the path to Universal Fully Fault-Tolerant Quantum Computing

Supports Microsoft’s AI and quantum-powered compute platform and “the path to a Quantum Supercomputer”

Quantinuum is uniquely known for, and has always put a premium on, demonstrating rather than merely promising breakthroughs in quantum computing.

When we unveiled the first H-Series quantum computer in 2020, not only did we pioneer the world-leading quantum processors, but we also went the extra mile. We included industry leading comprehensive benchmarking to ensure that any expert could independently verify our results. Since then, our computers have maintained the lead against all competitors in performance and transparency. Today our System Model H2 quantum computer with 56 qubits is the most powerful quantum computer available for industry and scientific research – and the most benchmarked.

More recently, in a period where we upgraded our H2 system from 32 to 56 qubits and demonstrated the scalability of our QCCD architecture, we also hit a quantum volume of over two million, and announced that we had achieved “three 9’s” fidelity, enabling real gains in fault-tolerance – which we proved within months as we demonstrated the most reliable logical qubits in the world with our partner Microsoft.

We don’t just promise what the future might look like; we demonstrate it.

Today, at Quantum World Congress, we shared how recent developments by our integrated hardware and software teams have, yet again, accelerated our technology roadmap. It is with the confidence of what we’ve already demonstrated that we can uniquely announce that by the end of this decade Quantinuum will achieve universal fully fault-tolerant quantum computing, built on foundations such as a universal fault-tolerant gate set, high fidelity physical qubits uniquely capable of supporting reliable logical qubits, and a fully-scalable architecture.

We also demonstrated, with Microsoft, what rapid acceleration looks like with the creation of 12 highly reliable logical qubits – tripling the number from just a few months ago. Among other demonstrations, we supported Microsoft to create the first ever chemistry simulation using reliable logical qubits combined with Artificial Intelligence (AI) and High-Performance Computing (HPC), producing results within chemical accuracy. This is a critical demonstration of what Microsoft has called “the path to a Quantum Supercomputer”.

Quantinuum’s H-Series quantum computers, Powered by Honeywell, were among the first devices made available via Microsoft Azure, where they remain available today. Building on this, we are excited to share that Quantinuum and Microsoft have completed integration of Quantinuum’s InQuanto™ computational quantum chemistry software package with Azure Quantum Elements, the AI enabled generative chemistry platform. The integration mentioned above is accessible to customers participating in a private preview of Azure Quantum Elements, which can be requested from Microsoft and Quantinuum.

We created a short video on the importance of logical qubits, which you can see here:

These demonstrations show that we have the tools to drive progress towards scientific and industrial advantage in the coming years. Together, we’re demonstrating how quantum computing may be applied to some of humanity’s most pressing problems, many of which are likely only to be solved with the combination of key technologies like AI, HPC, and quantum computing.

Our credible roadmap draws a direct line from today to hundreds of logical qubits - at which point quantum computing, possibly combined with AI and HPC, will outperform classical computing for a range of scientific problems.

“The collaboration between Quantinuum and Microsoft has established a crucial step forward for the industry and demonstrated a critical milestone on the path to hybrid classical-quantum supercomputing capable of transforming scientific discovery.” – Dr. Krysta Svore – Technical Fellow and VP of Advanced Quantum Development for Microsoft Azure Quantum

What we revealed today underlines the accelerating pace of development. It is now clear that enterprises need to be ready to take advantage of the progress we can see coming in the next business cycle.

Why now?

The industry consensus is that the latter half of this decade will be critical for quantum computing, prompting many companies to develop roadmaps signalling their path toward error corrected qubits. In their entirety, Quantinuum’s technical and scientific advances accelerate the quantum computing industry, and as we have shown today, reveal a path to universal fault-tolerance much earlier than expected.

Grounded in our prior demonstrations, we now have sufficient visibility into an accelerated timeline for a highly credible hardware roadmap, making now the time to release an update. This provides organizations all over the world with a way to plan, reliably, for universal fully fault-tolerant quantum computing. We have shown how we will scale to more physical qubits at fidelities that support lower error rates (made possible by QEC), with the capacity for “universality” at the logical level. “Universality” is non-negotiable when making good on the promise of quantum computing: if your quantum computer isn’t universal everything you do can be efficiently reproduced on a classical computer.

“Our proven history of driving technical acceleration, as well as the confidence that globally renowned partners such as Microsoft have in us, means that this is the industry’s most bankable roadmap to universal fully fault-tolerant quantum computing,” said Dr. Raj Hazra, Quantinuum’s CEO.

Where we go from here

Before the end of the decade, our quantum computers will have thousands of physical qubits, hundreds of logical qubits with error rates less than 10-6, and the full machinery required for universality and fault-tolerance – truly making good on the promise of quantum computing.

Quantinuum has a proven history of achieving our technical goals. This is evidenced by our leadership in hardware, software, and the ecosystem of developer tools that make quantum computing accessible. Our leadership in quantum volume and fidelity, our consistent cadence of breakthrough publications, and our collaboration with enterprises such as Microsoft, showcases our commitment to pushing the boundaries of what is possible.

We are now making an even stronger public commitment to deliver on our roadmap, ushering the industry toward the era of universal fully fault-tolerant quantum computing this decade. We have all the machinery in place for fault-tolerance with error rates around 10-6, meaning we will be able to run circuits that are millions of gates deep – putting us on a trajectory for scientific quantum advantage, and beyond.

About Quantinuum

Quantinuum, the world’s largest integrated quantum company, pioneers powerful quantum computers and advanced software solutions. Quantinuum’s technology drives breakthroughs in materials discovery, cybersecurity, and next-gen quantum AI. With over 500 employees, including 370+ scientists and engineers, Quantinuum leads the quantum computing revolution across continents.

The central question that pre-occupies our team has been:

“How can quantum structures and quantum computers contribute to the effectiveness of AI?”

In previous work we have made notable advances in answering this question, and this article is based on our most recent work in the new papers [arXiv:2406.17583, arXiv:2408.06061], and most notably the experiment in [arXiv:2409.08777].

This article is one of a series that we will be publishing alongside further advances – advances that are accelerated by access to the most powerful quantum computers available.

Large language Models (LLMs) such as ChatGPT are having an impact on society across many walks of life. However, as users have become more familiar with this new technology, they have also become increasingly aware of deep-seated and systemic problems that come with AI systems built around LLM’s.

The primary problem with LLMs is that nobody knows how they work - as inscrutable “black boxes” they aren’t “interpretable”, meaning we can’t reliably or efficiently control or predict their behavior. This is unacceptable in many situations. In addition, Modern LLMs are incredibly expensive to build and run, costing serious – and potentially unsustainable –amounts of power to train and use. This is why more and more organizations, governments, and regulators are insisting on solutions.

But how can we find these solutions, when we don’t fully understand what we are dealing with now?1

At Quantinuum, we have been working on natural language processing (NLP) using quantum computers for some time now. We are excited to have recently carried out experiments [arXiv: 2409.08777] which demonstrate not only how it is possible to train a model for a quantum computer in a scalable manner, but also how to do this in a way that is interpretable for us. Moreover, we have promising theoretical indications of the usefulness of quantum computers for interpretable NLP [arXiv:2408.06061].

In order to better understand why this could be the case, one needs to understand the ways in which meanings compose together throughout a story or narrative. Our work towards capturing them in a new model of language, which we call DisCoCirc, is reported on extensively in this previous blog post from 2023.

In new work referred to in this article, we embrace “compositional interpretability” as proposed in [arXiv:2406.17583] as a solution to the problems that plague current AI. In brief, compositional interpretability boils down to being able to assign a human friendly meaning, such as natural language, to the components of a model, and then being able to understand how they fit together2.

A problem currently inherent to quantum machine learning is that of being able to train at scale. We avoid this by making use of “compositional generalization”. This means we train small, on classical computers, and then at test time evaluate much larger examples on a quantum computer. There now exist quantum computers which are impossible to simulate classically. To train models for such computers, it seems that compositional generalization currently provides the only credible path.

1. Text as circuits

DisCoCirc is a circuit-based model for natural language that turns arbitrary text into “text circuits” [arXiv:1904.03478, arXiv:2301.10595, arXiv:2311.17892]. When we say that arbitrary text becomes ‘text-circuits’ we are converting the lines of text, which live in one dimension, into text-circuits which live in two-dimensions. These dimensions are the entities of the text versus the events in time.

To see how that works, consider the following story. In the beginning there is Alex and Beau. Alex meets Beau. Later, Chris shows up, and Beau marries Chris. Alex then kicks Beau.

The content of this story can be represented as the following circuit:

2. From text circuits to quantum circuits

Such a text circuit represents how the ‘actors’ in it interact with each other, and how their states evolve by doing so. Initially, we know nothing about Alex and Beau. Once Alex meets Beau, we know something about Alex and Beau’s interaction, then Beau marries Chris, and then Alex kicks Beau, so we know quite a bit more about all three, and in particular, how they relate to each other.

Let’s now take those circuits to be quantum circuits.

In the last section we will elaborate more why this could be a very good choice. For now it’s ok to understand that we simply follow the current paradigm of using vectors for meanings, in exactly the same way that this works in LLMs. Moreover, if we then also want to faithfully represent the compositional structure in language3, we can rely on theorem 5.49 from our book Picturing Quantum Processes, which informally can be stated as follows:

If the manner in which meanings of words (represented by vectors) compose obeys linguistic structure, then those vectors compose in exactly the same way as quantum systems compose.4

In short, a quantum implementation enables us to embrace compositional interpretability, as defined in our recent paper [arXiv:2406.17583].

3. Text circuits on our quantum computer

So, what have we done? And what does it mean?

We implemented a “question-answering” experiment on our Quantinuum quantum computers, for text circuits as described above. We know from our new paper [arXiv:2408.06061] that this is very hard to do on a classical computer due to the fact that as the size of the texts get bigger they very quickly become unrealistic to even try to do this on a classical computer, however powerful it might be. This is worth emphasizing. The experiment we have completed would scale exponentially using classical computers – to the point where the approach becomes intractable.

The experiment consisted of teaching (or training) the quantum computer to answer a question about a story, where both the story and question are presented as text-circuits. To test our model, we created longer stories in the same style as those used in training and questioned these. In our experiment, our stories were about people moving around, and we questioned the quantum computer about who was moving in the same direction at the end of the stories. A harder alternative one could imagine, would be having a murder mystery story and then asking the computer who was the murderer.

And remember - the training in our experiment constitutes the assigning of quantum states and gates to words that occur in the text.

4. Compositional generalization

The major reason for our excitement is that the training of our circuits enjoys compositional generalization. That is, we can do the training on small-scale ordinary computers, and do the testing, or asking the important questions, on quantum computers that can operate in ways not possible classically. Figure 4 shows how, despite only being trained on stories with up to 8 actors, the test accuracy remains high, even for much longer stories involving up to 30 actors.

Training large circuits directly in quantum machine learning, leads to difficulties which in many cases undo the potential advantage. Critically - compositional generalization allows us to bypass these issues.

5. Real-world comparison: ChatGPT

In order to compare the results of our experiment on a quantum computer, to the success of a classical LLM ChatGPT (GPT-4) when asked the same questions.

What we are considering here is a story about a collection of characters that walk in a number of different directions, and sometimes follow each other. These are just some initial test examples, but it does show that this kind of reasoning is not particularly easy for LLMs.

The input to ChatGPT was:

What we got from ChatGPT:

Can you see where ChatGPT went wrong?

ChatGPT’s score (in terms of accuracy) oscillated around 50% (equivalent to random guessing). Our text circuits consistently outperformed ChatGPT on these tasks. Future work in this area would involve looking at prompt engineering – for example how the phrasing of the instructions can affect the output, and therefore the overall score.

Of course, we note that ChatGPT and other LLM’s will issue new versions that may or may not be marginally better with ‘question-answering’ tasks, and we also note that our own work may become far more effective as quantum computers rapidly become more powerful.

6. What’s next?

We have now turned our attention to work that will show that using vectors to represent meaning and requiring compositional interpretability for natural language takes us mathematically natively into the quantum formalism. This does not mean that there may not be an efficient classical method for solving specific tasks, and it may be hard to prove traditional hardness results whenever there is some machine learning involved, although this is something we might have to come to terms with, just as in classical machine learning.

At Quantinuum we possess the most powerful quantum computers currently available. Our recently published roadmap is going to deliver more computationally powerful quantum computers in the short and medium term, as we extend our lead and push towards universal, fault tolerant quantum computers by the end of the decade. We expect to show even better (and larger scale) results when implementing our work on those machines. In short, we foresee a period of rapid innovation as powerful quantum computers that cannot be classically simulated become more readily available. This will likely be disruptive, as more and more use cases, including ones that we might not be currently thinking about, come into play.

Interestingly and intriguingly, we are also pioneering the use of powerful quantum computers in a hybrid system that has been described as a ‘quantum supercomputer’ where quantum computers, HPC and AI work together in an integrated fashion and look forward to using these systems to advance our work in language processing that can help solve the problem with LLM’s that we highlighted at the start of this article.

1 And where do we go next, when we don’t even understand what we are dealing with now? On previous occasions in the history of science and technology, when efficient models without a clear interpretation have been developed, such as the Babylonian lunar theory or Ptolemy’s model of epicycles, these initially highly successful technologies vanished, making way for something else.

2 Note that our conception of compositionality is more general than the usual one adopted in linguistics, which is due to Frege. A discussion can be found in [arXiv: 2110.05327].

3 For example, using pregroups here as linguistic structure, which are the cups and caps of PQP.

4 That is, using the tensor product of the corresponding vector spaces.

By Dr. Harry Buhrman, Chief Scientist for Algorithms and Innovation, and Dr. Chris Langer, Fellow

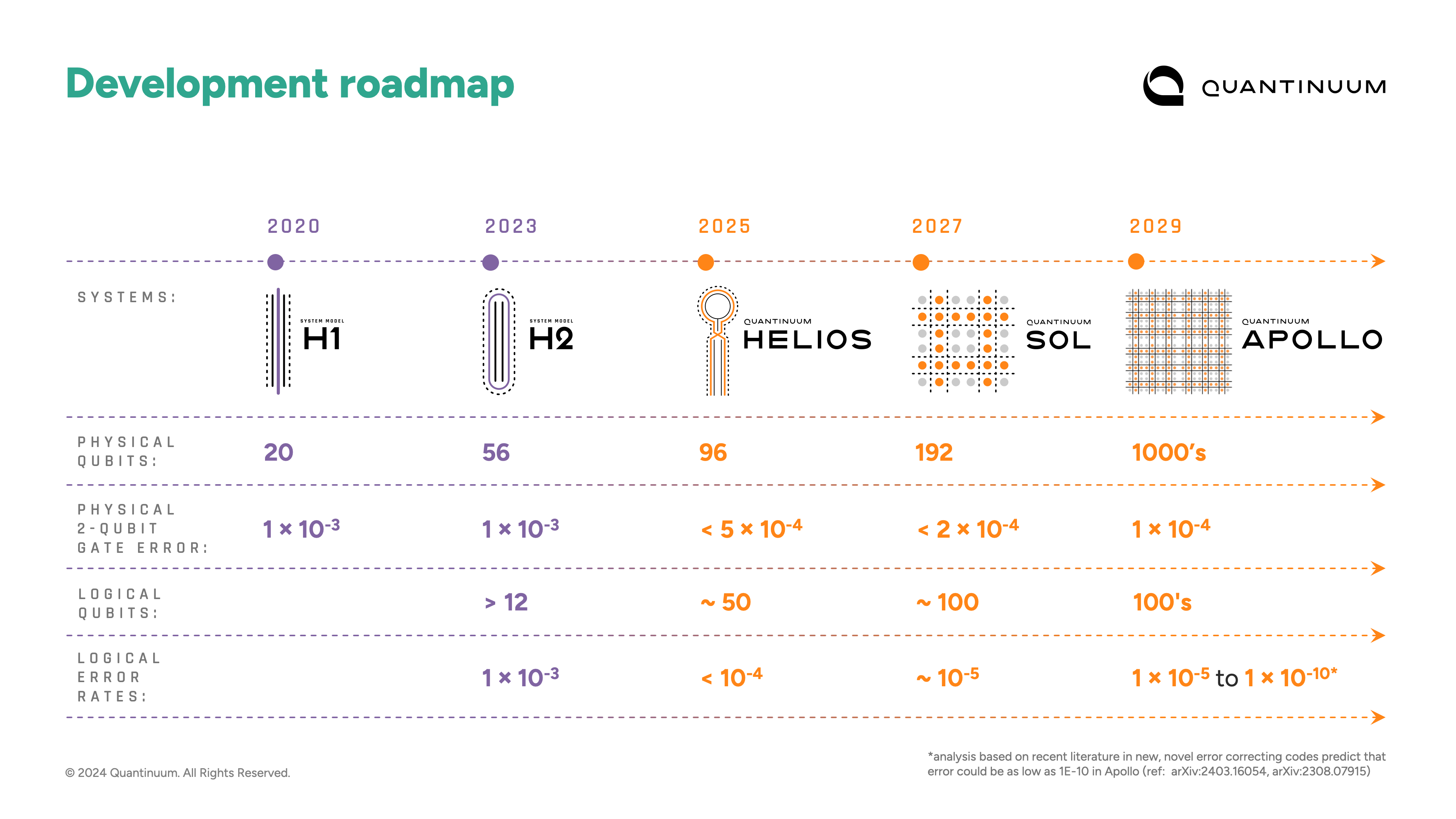

This week, we confirm what has been implied by the rapid pace of our recent technical progress as we reveal a major acceleration in our hardware road map. By the end of the decade, our accelerated hardware roadmap will deliver a fully fault-tolerant and universal quantum computer capable of executing millions of operations on hundreds of logical qubits.

The next major milestone on our accelerated roadmap is Quantinuum Helios™, Powered by Honeywell, a device that will definitively push beyond classical capabilities in 2025. That sets us on a path to our fifth-generation system, Quantinuum Apollo™, a machine that delivers scientific advantage and a commercial tipping point this decade.

What is Apollo?

We are committed to continually advancing the capabilities of our hardware over prior generations, and Apollo makes good on that promise. It will offer:

- thousands of physical qubits

- physical error rates less than 10-4

- All of our most competitive features: all-to-all connectivity, low crosstalk, mid-circuit measurement and qubit re-use

- Conditional logic

- Real-time classical co-compute

- Physical variable angle 1 qubit and 2 qubit gates

- Hundreds of logical qubits

- Logical error rates better than 10-6 with analysis based on recent literature estimating as low a 10-10

By leveraging our all-to-all connectivity and low error rates, we expect to enjoy significant efficiency gains in terms of fault-tolerance, including single-shot error correction (which saves time) and high-rate and high-distance Quantum Error Correction (QEC) codes (which mean more logical qubits, with stronger error correction capabilities, can be made from a smaller number of physical qubits).

Studies of several efficient QEC codes already suggest we can enjoy logical error rates much lower than our target 10-6 – we may even be able to reach 10-10, which enables exploration of even more complex problems of both industrial and scientific interest.

Error correcting code exploration is only just beginning – we anticipate discoveries of even more efficient codes. As new codes are developed, Apollo will be able to accommodate them, thanks to our flexible high-fidelity architecture. The bottom line is that Apollo promises fault-tolerant quantum advantage sooner, with fewer resources.

Like all our computers, Apollo is based on the quantum charged coupled device (QCCD) architecture. Here, each qubit’s information is stored in the atomic states of a single ion. Laser beams are applied to the qubits to perform operations such as gates, initialization, and measurement. The lasers are applied to individual qubits or co-located qubit pairs in dedicated operation zones. Qubits are held in place using electromagnetic fields generated by our ion trap chip. We move the qubits around in space by dynamically changing the voltages applied to the chip. Through an alternating sequence of qubit rearrangements via movement followed by quantum operations, arbitrary circuits with arbitrary connectivity can be executed.

The ion trap chip in Apollo will host a 2D array of trapping locations. It will be fabricated using standard CMOS processing technology and controlled using standard CMOS electronics. The 2D grid architecture enables fast and scalable qubit rearrangement and quantum operations – a critical competitive advantage. The Apollo architecture is scalable to the significantly larger systems we plan to deliver in the next decade.

What is Apollo good for?

Apollo’s scaling of very stable physical qubits and native high-fidelity gates, together with our advanced error correcting and fault tolerant techniques will establish a quantum computer that can perform tasks that do not run (efficiently) on any classical computer. We already had a first glimpse of this in our recent work sampling the output of random quantum circuits on H2, where we performed 100x better than competitors who performed the same task while using 30,000x less power than a classical supercomputer. But with Apollo we will travel into uncharted territory.

The flexibility to use either thousands of qubits for shorter computations (up to 10k gates) or hundreds of qubits for longer computations (from 1 million to 1 billion gates) make Apollo a versatile machine with unprecedented quantum computational power. We expect the first application areas will be in scientific discovery; particularly the simulation of quantum systems. While this may sound academic, this is how all new material discovery begins and its value should not be understated. This era will lead to discoveries in materials science, high-temperature superconductivity, complex magnetic systems, phase transitions, and high energy physics, among other things.

In general, Apollo will advance the field of physics to new heights while we start to see the first glimmers of distinct progress in chemistry and biology. For some of these applications, users will employ Apollo in a mode where it offers thousands of qubits for relatively short computations; e.g. exploring the magnetism of materials. At other times, users may want to employ significantly longer computations for applications like chemistry or topological data analysis.

But there is more on the horizon. Carefully crafted AI models that interact seamlessly with Apollo will be able to squeeze all the “quantum juice” out and generate data that was hitherto unavailable to mankind. We anticipate using this data to further the field of AI itself, as it can be used as training data.

The era of scientific (quantum) discovery and exploration will inevitably lead to commercial value. Apollo will be the centerpiece of this commercial tipping point where use-cases will build on the value of scientific discovery and support highly innovative commercially viable products.

Very interestingly, we will uncover applications that we are currently unaware of. As is always the case with disruptive new technology, Apollo will run currently unknown use-cases and applications that will make perfect sense once we see them. We are eager to co-develop these with our customers in our unique co-creation program.

How do we get there?

Today, System Model H2 is our most advanced commercial quantum computer, providing 56 physical qubits with physical two-qubit gate errors less than 10-3. System Model H2, like all our systems, is based on the QCCD architecture.

Starting from where we are today, our roadmap progresses through two additional machines prior to Apollo. The Quantinuum Helios™ system, which we are releasing in 2025, will offer around 100 physical qubits with two-qubit gate errors less than 5x10-4. In addition to expanded qubit count and better errors, Helios makes two departures from H2. First, Helios will use 137Ba+ qubits in contrast to the 171Yb+ qubits used in our H1 and H2 systems. This change enables lower two-qubit gate errors and less complex laser systems with lower cost. Second, for the first time in a commercial system, Helios will use junction-based qubit routing. The result will be a “twice-as-good" system: Helios will offer roughly 2x more qubits with 2x lower two-qubit gate errors while operating more than 2x faster than our 56-qubit H2 system.

After Helios we will introduce Quantinuum Sol™, our first commercially available 2D-grid-based quantum computer. Sol will offer hundreds of physical qubits with two-qubit gate errors less than 2x10-4, operating approximately 2x faster than Helios. Sol being a fully 2D-grid architecture is the scalability launching point for the significant size increase planned for Apollo.

Opportunity for early value creation discovery in Helios and Sol

Thanks to Sol’s low error rates, users will be able to execute circuits with up to 10,000 quantum operations. The usefulness of Helios and Sol may be extended with a combination of quantum error detection (QED) and quantum error mitigation (QEM). For example, the [[k+2, k, 2]] iceberg code is a light-weight QED code that encodes k+2 physical qubits into k logical qubits and only uses an additional 2 ancilla qubits. This low-overhead code is well-suited for Helios and Sol because it offers the non-Clifford variable angle entangling ZZ-gate directly without the overhead of magic state distillation. The errors Iceberg fails to detect are already ~10x lower than our physical errors, and by applying a modest run-time overhead to discard detected failures, the effective error in the computation can be further reduced. Combining QED with QEM, a ~10x reduction in the effective error may be possible while maintaining run-time overhead at modest levels and below that of full-blown QEC.

Why accelerate our roadmap now?

Our new roadmap is an acceleration over what we were previously planning. The benefits of this are obvious: Apollo brings the commercial tipping point sooner than we previously thought possible. This acceleration is made possible by a set of recent breakthroughs.

First, we solved the “wiring problem”: we demonstrated that trap chip control is scalable using our novel center-to-left-right (C2LR) protocol and broadcasting shared control signals to multiple electrodes. This demonstration of qubit rearrangement in a 2D geometry marks the most advanced ion trap built, containing approximately 40 junctions. This trap was deployed to 3 different testbeds in 2 different cities and operated with 2 different collections of dual-ion-species, and all 3 cases were a success. These demonstrations showed that the footprint of the most complex parts of the trap control stay constant as the number of qubits scales up. This gives us the confidence that Sol, with approximately 100 junctions, will be a success.

Second, we continue to reduce our two-qubit physical gate errors. Today, H1 and H2 have two-qubit gate errors less than 1x10-3 across all pairs of qubits. This is the best in the industry and is a key ingredient in our record >2 million quantum volume. Our systems are the most benchmarked in the industry, and we stand by our data - making it all publicly available. Recently, we observed an 8x10-4 two-qubit gate error in our Helios development test stand in 137Ba+, and we’ve seen even better error rates in other testbeds. We are well on the path to meeting the 5x10-4 spec in Helios next year.

Third, the all-to-all connectivity offered by our systems enables highly efficient QEC codes. In Microsoft’s recent demonstration, our H2 system with 56 physical qubits was used to generate 12 logical qubits at distance 4. This work demonstrated several experiments, including repeated rounds of error correction where the error in the final result was ~10x lower than the physical circuit baseline.

In conclusion, through a combination of advances in hardware readiness and QEC, we have line-of-sight to Apollo by the end of the decade, a fully fault-tolerant quantum advantaged machine. This will be a commercial tipping point: ushering in an era of scientific discovery in physics, materials, chemistry, and more. Along the way, users will have the opportunity to discover new enabling use cases through quantum error detection and mitigation in Helios and Sol.

Quantinuum has the best quantum computers today and is on the path to offering fault-tolerant useful quantum computation by the end of the decade.

Every year, The IEEE International Conference on Quantum Computing and Engineering – or IEEE Quantum Week – brings together engineers, scientists, researchers, students, and others to learn about advancements in quantum computing.

At this year’s conference, from September 15th – 20th, the Quantinuum team will share insights on how we are forging the path to fault-tolerant quantum computing with our integrated full-stack. Join the below sessions to learn about recent upgrades to our hardware, our path to error correction, enhancements to our open-source toolkits, and more.

Visit our team at booth 304 in the exhibit hall to talk in detail about our recent milestones in quantum computing.

You can also catch us at several sessions, from Keynote speeches to tutorials. Come say hi!

Sunday, September 15

Workshop: Towards Error Correction within Modular Quantum Computing Architectures

Speaker: Henry Semenenko, Senior Advanced Optics Engineer

Time: 10:00 – 16:30

Speakers: Bob Coecke, Chief Scientist, chaired by Lia Yeh, Research Engineer, who is chair of Quantum in K-12 and Quantum Understanding sessions

Time: 13:00 – 13:15

Monday, September 16

Birds of a Feather: AI in Quantum Computing

Speaker: Josh Savory, Director of Offering Management, Hardware and Cloud Platform Products

Time: 10:00 – 11:30

Tutorial: Using and benefiting from Quantinuum H-Series quantum computers’ unique features

Speakers: Irfan Khan, Senior Application Engineer, and Shival Dasu, Advanced Physicist

Time: 13:00 – 16:30

Tuesday, September 17

Workshop: Applications Explored on H-Series Quantum Hardware

Speakers: Michael Foss-Feig, Principal Physicist, and Nathan Fitzpatrick, Senior Research Scientist

Time: 10:00 – 16:30

Speakers: Josh Savory, Director of Offering Management, Hardware and Cloud Platform Products, and David Hayes, Senior R&D Manager for the theory and architecture groups

Time: 10:00 – 11:30

Speaker: Michael Foss-Feig, Principal Physicist

Time: 15:00 – 16:30

Thursday, September 19

Keynote: Quantinuum H-Series: Advancing Quantum Computing to Scalable Fault-Tolerant Systems

Speaker: Rajeeb Hazra, President & Chief Executive Officer

Time: 8:00 – 9:00

Workshop: Current Progress and Remaining Challenges in Scaling Trapped-Ion Quantum Computing

Speaker: Robert Delaney, Advanced Physicist

Time: 10:00 – 16:30

Tutorial: From Quantum in Pictures to Interpretable Quantum NLP

Speakers: Bob Coecke, Chief Scientist, and Lia Yeh, Research Engineer

Time: 13:00 – 16:30

Workshop: Quantum Software 2.0: Enabling Large-scale and Performant Quantum Computing

Speaker: Kartik Singhal, Quantum Compiler Engineer

Time: 10:00 – 16:30

Birds of a Feather: Navigating the Quantum Computing Journey: Student to Professional Opportunities

Speaker: Lia Yeh

Time: 10:00 – 11:30

Friday, September 20

Workshop: Academic and professional training in quantum computing: the importance of open-source

Speaker: Lia Yeh, Research Engineer

Time: 10:00 – 16:30

Panel: What Does “Break Even” Mean?

Speaker: David Hayes, Senior R&D Manager for the theory and architecture groups

Time: 10:00 – 11:30

*All sessions are listed in Montreal time, Eastern Daylight Time