This README file provides information on the steps involved in the following two Python scripts,

which are used to process source documents into plain text chunks and generate embeddings for

vector databases:

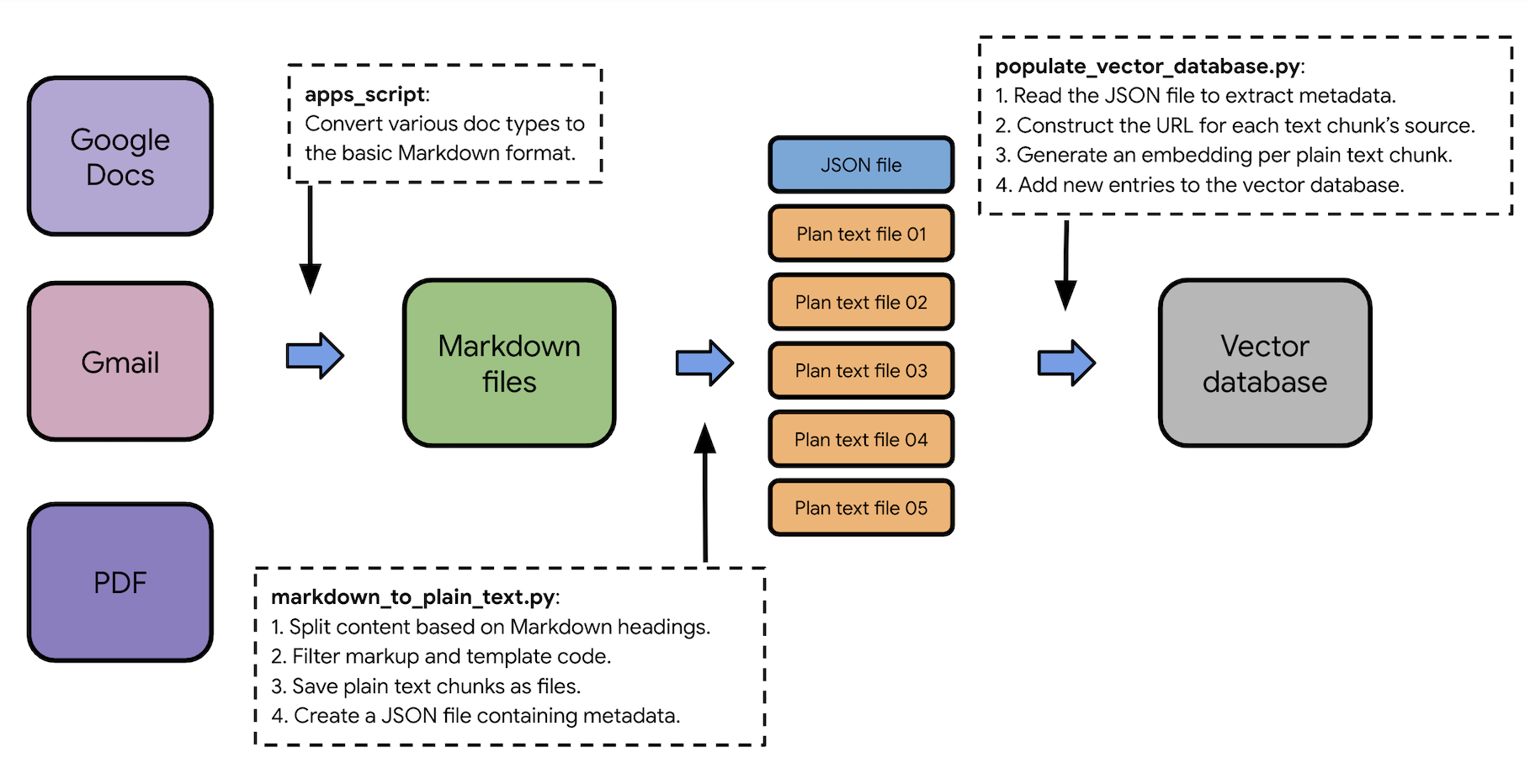

Figure 1. Docs Agent's pre-processing flow from source documents to the vector database.

Note: The markdown_to_plain_text.py script is deprecated in favor of

the files_to_plain_text.py script.

The files_to_plain_text.py script splits documents

into smaller chunks based on Markdown headings (#, ##, and ###).

For example, consider the following Markdown page:

# Page title

This is the introduction paragraph of this page.

## Section 1

This is the paragraph of section 1.

### Sub-section 1.1

This is the paragraph of sub-section 1.1.

### Sub-section 1.2

This is the paragraph of sub-section 1.2.

## Section 2

This is the paragraph of section 2.

This example Markdown page is split into the following 5 chunks:

# Page title

This is the introduction paragraph of this page.

## Section 1

This is the paragraph of section 1.

### Sub-section 1.1

This is the paragraph of sub-section 1.1.

### Sub-section 1.2

This is the paragraph of sub-section 1.2.

## Section 2

This is the paragraph of section 2.

Additionally, becasue the token size limitation of embedding models, the script recursively splits the chunks above until each chunk's size becomes less than 5000 bytes (characters).

In the default setting, when processing Markdown files to plain text using the

files_to_plain_text.py script, the following events take place:

- Read the configuration file (

config.yaml) to identify input and output directories. - Construct an array of input sources (which are the

pathentries). - For each input source, do the following:

- Extract all input fields (

path,url_prefix, and more). - Call the

process_files_from_input()method using these input fields. - For each sub-path in the input directory and for each file in these directories:

- Check if the file extension is

.md(that is, a Markdown file). - Construct an output directory that preserves the path structure.

- Read the content of the Markdown file.

- Call the

process_page_and_section_titles()method to reformat the page and section titles.- Process Front Matter in Markdown.

- Detect (or construct) the title of the page.

- Detect Markdown headings (#, ##, and ###).

- Convert Markdown headings into plain English (to preserve context when generating embeddings).

- Call the

process_document_into_sections()method to split the content into small text chunks.- Create a new empty array.

- Divide the content using Markdown headings (#, ##, and ###).

- Insert each chunk into the array and simplify the heading to # (title).

- Return the array.

- For each text chunk, do the following:

- Call the

markdown_to_text()method to clean up Markdown and HTML syntax.- Remove

<!-- -->lines in Markdown. - Convert Markdown to HTML (which makes the plan text extraction easy).

- Use

BeautifulSoupto extract plain text from the HTML. - Remove

[][]in Markdown. - Remove

{: }in Markdown. - Remove

{. }in Markdown. - Remove a single line

shin Markdown. - Remove code text and blocks.

- Return the plain text.

- Remove

- Construct the text chunk’s metadata (including URL) for the

file_index.jsonfile. - Write the text chunk into a file in the output directory.

- Call the

- Check if the file extension is

- Extract all input fields (

When processing plain text chunks to embeddings using the

populate_vector_database.py script, the following events take place:

- Read the configuration file (

config.yaml) to identify the plain text directory and Chroma settings. - Set up the Gemini API environment.

- Select the embeddings model.

- Configure the embedding function (including the API call limit).

- For each sub-path in the plain text directory and for each file in these directories:

- Check if the file extension is

.md(that is, a Markdown file). - Read the content of the Markdown file.

- Construct the URL of the text chunk’s source.

- Read the metadata associated with the text chunk file.

- Store the text chunk and metabase to the vector database, which also generates an embedding for the text chunk at the time of insertion.

- Skip if the file size is larger than 5000 bytes (due to the API limit).

- Skip if the text chunk is already in the vector database and the checksum hasn’t changed.

- Check if the file extension is

The process below describes how the delete chunks feature is implemented in the

populate_vector_database.py script:

-

Read all exiting entries in the target database.

-

Read all candidate entries in the

file_index.jsonfile (created after running theagent chunkcommand). -

For each entry in the existing entries found in step 1:

Compare the

text_chunk_filenamefields (included in the entry'smetadata).-

If not found in the candidate entires in step 2, delete this entry in the database.

-

If found, compare the

md_hashfields:If they are different, delete this entry in the database.

-