SPECsip_Infrastructure2011 Design Document

Revision Date: February 7th, 2011

Table of Contents

1 Overview of SPECsip_Infrastructure2011

SPECsip_Infrastructure2011 is a software benchmark product developed by the Standard Performance Evaluation Corporation (SPEC), a non-profit group of computer vendors, system integrators, universities, research organizations, publishers, and consultants. It is designed to evaluate a system's ability to act as a SIP server supporting a particular SIP application. For SPECsip_Infrastructure2011, the application modeled is a VoIP deployment for an enterprise, telco, or service provider, where the SIP server performs proxying and registration. This document overviews the design of SPECsip_Infrastructure2011, including design principles, included and excluded goals, the structure and components of the benchmark, the chosen workload, and reasons behind various choices. Separate documents will discuss specifics of how to build and run the benchmark. Multiple documents exist to describe different aspects of the benchmark. These include:

- The design document (this document): The overall architecture of the benchmark.

- Design FAQ: Frequently asked questions about the design of the benchmark, in Q&A form, not explicitly addressed by the design document.

- Run and reporting rules: How to (and how not to) run the benchmark; appropriate and inappropriate optimizations; standards compliance, hardware & software availability.

- User Guide: How to install, configure, and run the benchmark; how to submit results to SPEC.

- Support FAQ - Frequently asked questions about running the benchmark; typical problems and solutions encountered.

The remainder of this document discusses the design of the SPECsip_Infrastructure2011 benchmark. We assume the reader is aware of core SIP terminology provided by IETF's RFC 3261, "SIP: Session Initiation Protocol", available at http://www.ietf.org/rfc/rfc3261.txt. The reader should also be aware of RFC 6076, "Basic Telefony SIP End-to-End Performance Metrics", available at http://www.ietf.org/rfc/rfc6076.txt. Another useful document is http://www.ietf.org/rfc/rfc4458.txt, which describes options for handling voicemail.

1.1 Design Principles

The main principle behind the design of the SPECsip_Infrastructure2011 benchmark is to emulate SIP user behavior in a realistic manner. Users are the main focus of how SIP traffic is generated. This means defining a set of assumptions for how users behave, applying these assumptions through the use of profiles, and implementing the benchmark to reproduce and emulate these profiles. These assumptions are made more explicit in the next section.

1.2 Requirements and Goals

The primary requirement and goal for SPECsip_Infrastructure2011 is to evaluate SIP server systems as a comparative benchmark in order to aid customers. The main features are:

- The SUT is the SIP server software and underlying hardware.

- Core SIP operations are exercised (e.g., packet parsing, user lookup, message routing, stateful proxying, registration).

- Generated traffic is based on best available estimates of user behavior.

- Configurations match requirements of SIP application providers (e.g., to require authentication).

- Logging for accounting and management purposes is required.

- The standards defined by core IETF SIP RFCs (e.g., RFC 3261) are obeyed.

- Assumptions are made explicit so as to allow modifying the benchmark for custom client engagements (but not for publishing numbers)

The last feature illustrates a secondary additional goal, which is to aid in capacity planning and provisioning of systems. SPECsip_Infrastructure2011 explicitly defines a standard workload, which consists of standard SIP scenarios (what SIP call flows happen) and the frequency with which they happen (the traffic profile). Different environments (e.g., ISP, Telco, University, Enterprise) clearly have different behaviors and different frequencies of events. SPECsip_Infrastructure2011 is designed to be configurable so as to allow easy modification of the benchmark for use in customer engagements so that the traffic profile and resulting generated workload can be adapted to more closely match a particular customer's environment. Towards this end, we make traffic profile variables explicit to allow customization. However, for publishing numbers with SPEC, the standard SPECsip_Infrastructure2011 benchmark with the standard traffic profile must be used.

1.3 Excluded Goals

Due to time, complexity, and implementation issues, the SPECsip_Infrastructure2011 benchmark is necessarily limited. Goals that are excluded in this release of the benchmark include:

- Completeness: Any benchmark does not capture a complete application, of course. Instead, it is meant to capture the common-case operations that occur in practice and that provide a significant impact on overall performance. SPECsip_Infrastructure2011 cannot exercise all possible code paths and is not a substitute for unit or system testing of SIP software.

- Other SIP applications: SPECsip_Infrastructure2011 focuses on Voice proxying and registration. It does not evaluate instant messaging or presence, which are planned for future releases of the benchmark.

- Network effects: network characteristics such as packet loss, jitter, and delay can have substantial impacts on performance. However, network effects are outside the scope of this benchmark, which is intended to stress the server software and hardware in relative isolation. It is expected that organizations running the benchmark will do so in a high-bandwidth, local-area network environment where the effects of network characteristics are minimized.

Assumptions are listed in detail in Section 2.5.

2 Component Outlines

The SPECsip_Infrastructure2011 Benchmark consists of several logical components that make up the benchmark. This section provides a high-level view of the architecture for how these components fit together and interact with each other.

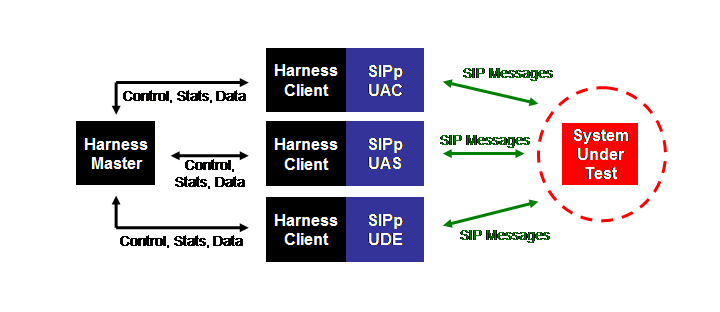

2.1 Logical Architecture

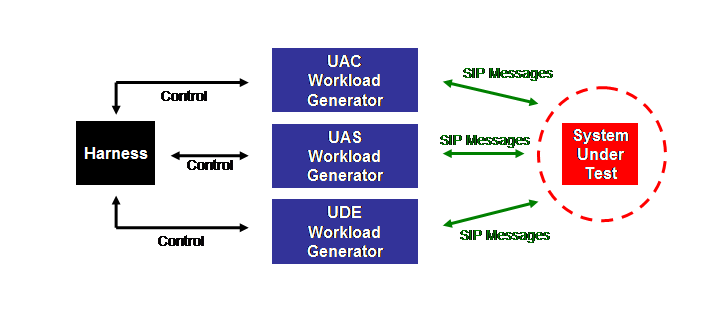

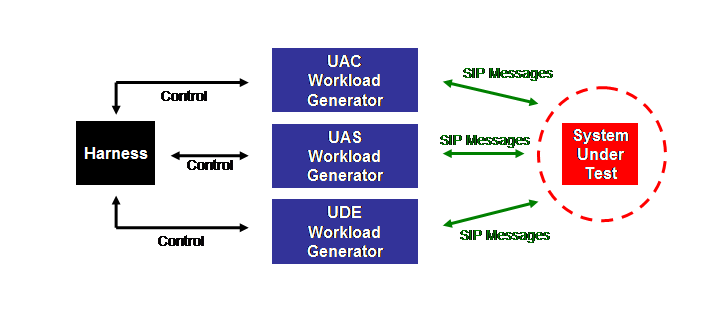

The above Figure shows the logical architecture for how the components of the benchmark system fit together. The benchmark system simulates a group of SIP end point users who perform SIP operations through the System Under Test (SUT).

2.2 SUT (SIP Server for an Application)

The System Under Test (SUT) is the combination of hardware and software that provides the SIP application (in this case, VoIP) that the benchmark stresses. The SUT is not part of the SPEC benchmark suite, but is provided by hardware and/or software vendors wishing to publish SIP performance numbers. The SUT performs both SIP stateful proxying that forwards SIP requests and responses between users and registration that records user location provided by users via the REGISTER request. The SUT receives, processes and responds to SIP messages from the client SIP load drivers (UAC, UAS, and UDE, described below). In addition, it logs SIP transaction information to disk for accounting purposes. The SUT exposes a single IP address as the interface for communication. More extensive details about the SUT (namely, requirements) are given in Section 5.1.

2.3 Clients (Workload Generators/Consumers)

Clients emulate the users sending and receiving SIP traffic. Per the SIP RFC notion of making client-side versus server-side behavior explicit and distinct, a user is represented by three SIP components, each of which both sends and receives SIP packets depending on its role.

- The User-Agent Client (UAC), which initiates calls and represents the client or calling side of a SIP transaction;

- The User-Agent Server (UAS), which receives calls, and represents the server or receiving side;

- The User Device Emulator (UDE), which represents the device employed by the user that sends periodic registration requests.

While the User Device Emulator (UDE) is technically a UAC in SIP RFC terms, we explicitly distinguish it from the UAC here that makes phone calls so as to avoid confusion. Each User-Agent component above is defined by a user or device model that specifies test scenarios and user behaviors, described in more detail in Section 4.4.

2.4 Benchmark Harness

The Benchmark harness is responsible for coordinating the test: starting and stopping multiple client workload generators; collecting and aggregating the results from the clients; determining if a run was successful and displaying the results. It is based on the FABAN framework and is described in more detail in Section 7.

2.5 Assumptions

We make the following assumptions about the environment for the benchmark:

- The SUT is both a proxy and a registrar.

- All SIP traffic traverses the SUT.

- Media traffic does not traverse the SUT; the environment is not NAT'ed.

- The SUT is not the voicemail server.

- VoiceMail is handled similarly to a completed call; i.e., redirection to the VoiceMail server is handled at the SDP layer, which is not captured in this benchmark.

3 Performance Metrics

This section describes the primary and secondary metrics that are measured by SPECsip_Infrastructure2011.

3.1 Primary Metric

The primary metric for the SPECsip_Infrastructure2011 benchmark is SPECsip_Infrastructure2011 supported subscribers, where a subscriber is a statistical model of user behavior, specified below. A supported subscriber is one which observes the QoS requirements, also specified below. We use the term user and subscriber interchangeably. A user includes both

- a user model, which captures explicit user behavior (making a call, answering a call, terminating a call); and

- a device model, which captures implicit device behavior, namely periodic registrations.

While a user could potentially be represented by multiple devices simultaneously, for simplicity in the benchmark, there is only one device per user.

3.2 QoS Definitions

Since SIP is a protocol for interactive media sessions, responsiveness is an important characteristic for providing good quality of service (QoS). For a SPECsip_Infrastructure2011 benchmark run to be valid, 99.99 percent ( "4 nines") of transactions throughout the lifetime of the test must be successful. The definition of a successful transaction is:

- A SIP transaction (INVITE, CANCEL, ACK, BYE, REGISTER) must complete without transaction timeouts (Timers B and F)), and;

- Each appropriate non-INVITE SIP transactions (BYE, CANCEL, REGISTER) must complete successfully with a 200 (OK) response, and;

- Each INVITE transaction must complete with the following response depending on the scenario:

- Completed calls must complete with a 200 (OK) response;

- VoiceMail calls must complete with a 200 (OK) response;

- Canceled calls must complete with a 487 (Request Terminated) response;

- Each INVITE transaction (including those that are canceled or redirected to VoiceMail) must have a Session Request Delay (as defined by the Malas draft, measured from the INVITE request to the 180 (Ringing) response) of less than 600 milliseconds.

A transaction that does not satisfy the above is considered a failed transaction. Examples of a failed transaction include a transaction timeout or a 503 (Service Unavailable) response. In addition, for a test to be valid, it must comply with the following requirements:

- 95 percent of all successful INVITE transactions must have a Session Request Delay of under 250 milliseconds.

- The traffic profile described in Section 4 below must be adhered to.

The idea behind these requirements is that the SUT must provide both correct and timely responses. Most responses should complete without SIP-level retransmissions (the 95 percent requirement) but a small number may incur one retransmission (Timer A). The session request delay does include the time for the SUT to interact with the UAS. We thus expect those running the benchmark will provision the UAS machines sufficiently so that the response time contributed by the UAS is negligible. We also expect the same to be true of the network, which will likely be an isolated high-bandwidth LAN. The bulk of the SRD should thus be composed of service and queuing time on the SUT, not service or queuing time on the UAC, UAS or network.

3.3 Secondary Metrics

Secondary metrics are reported to help describe the behavior of the test that has completed. They include:

- Session Request Delay (SRD): As defined by the Malas draft, this is response time as measured from the initial INVITE request to the 180 (Ringing) response. SRD will be reported as times in milliseconds.

- Calls per second: This is the number of completed calls divided by the run time. It is provided for interest to compare with other benchmarks that report calls per second, realizing that such comparisons may be meaningless.

- Registrations per second: This is the number of completed registrations divided by the run time.

- Registration Request Delay (RRD): As defined by the Malas draft, this is response time as measured from the initial REGISTER request to the 200 (OK) response. RRD will be reported as times in milliseconds.

- Transactions: the number of SIP transactions over the lifetime of the test and the rate in transactions/second.

- Transaction mix: the ratio of SIP transactions (INVITES vs. BYES vs. CANCEL vs. REGISTERs) and whether they match the traffic profile defined in Section 4.3.

4 Workload Description

As described earlier, the modeled application is a VoIP deployment in an enterprise, telco, or service provider context. Users make calls, answer them, and talk. Devices also act on behalf of users by periodically registering their location. Additional details of the SPECsip_Infrastructure2011 workload include:

- RTP traffic is excluded; only SIP traffic traverses the proxy.

- All SIP traffic is local; there is no inbound or outbound traffic.

- All requests than can be authenticated are authenticated, namely INVITE, BYE, and REGISTER. Per RFC 3261, CANCEL and ACK are not authenticated.

4.1 Scaling Definition: SPECsip_Infrastructure2011 Supported Subscribers

Since the primary performance metric is the number of supported subscribers, the way load in the benchmark is increased is by adding subscribers. Each instantiated subscriber implies an instantiated UAC, UAS, and UDE for that user.

4.2 Traffic Profile (Scenario Probabilities)

This section defines the SIP transaction scenarios that make up the traffic profile, for both users making calls and devices making registrations. Registration scenarios are executed by the UDE. Calling scenarios are executed by the UAC and UAS acting together.

| Registration Scenario Name |

Variable Name |

Definition< |

Default Value |

| Successful Registration |

RegistrationSuccessProbability |

Probability that a registration is successful |

1.00 |

| Failed Registration |

RegistrationFailureProbability |

Probability that a registration fails due to authentication error |

0.00 |

While the SPEC SIP Subcommittee considered the scenario where a device does not authenticate successfully due to a bad password, we believe that scenario is so infrequent so as to not be worth including in the benchmark.

| Call Scenario Name |

Variable Name |

Definition |

Default Value |

| Completed Call |

CompletedProbability |

Probability that a call is completed to a human |

0.65 |

| VoiceMail Call |

VoiceMailProbability |

Probability that a call is routed to voicemail |

0.30 |

| Canceled Call |

CancelProbability |

Probability that a call is canceled by the UAC |

0.05 |

| Rejected Call |

RejectProbability |

Probability that a call is rejected by the UAS (busy signal) |

0.00 |

| Failed Call |

FailProbability |

Probability that a user cannot be found |

0.00 |

The final two call scenarios, rejected call and failed call, were considered by the SPEC SIP subcommittee but rejected since they did not seem to be frequent enough to warrant including. The reject call scenario (busy signals) appears to be a relic of the past, since when a user is on the phone the call is routed to voicemail rather than rejected with a busy signal. Similarly, failed call appears to be obsolete since when a non-working extension is dialed, the user is connected to a machine that plays the message "you have reached an invalid extension at XYZ Corporation." For these reasons, the last two scenarios are not included in the traffic profile, since they effectively map to completed calls to a voicemail-like service, and thus the impact on the SUT is similar to a voicemail call. Future versions of the benchmark may revisit these decisions if, for example, real traffic studies show these scenarios contribute a non-trivial amount of traffic in a VoIP environment.

4.3 Traffic Parameters

This section defines the traffic parameters used by the user and device models. Durations are periods of time taken for particular events, for example, CallDuration. Most durations are parameterized distributions but are occasionally fixed, constant values.

| Parameter Name |

Definition |

Distribution |

Default Value |

| RegistrationDuration |

Time between successive REGISTER requests by the UDE |

Constant |

10 minutes |

| RegistrationValue |

Timeout value placed in the REGISTER request |

Constant |

15 minutes |

| RegistrationStartupDuration |

Time for UDE to wait to unsynchronize UDEs at startup |

Uniform |

10 minutes (average) |

| ThinkDuration |

Time a user thinks between successive calls during the busy hour |

Exponential |

1 hour (average) |

| CompletedCallDuration |

Talk time for a completed call |

Lognormal |

u=12.111537753638979 sigma=1.0 (average 300,000 milliseconds) |

| VoiceMailCallDuration |

Talk time for a call routed to voicemail |

Lognormal |

u=10.50209984120 sigma=1.0 (average 60,000 milliseconds) |

| CompletedRingDuration |

Time the phone rings before picked up by the user |

Uniform |

Range of 1-15 seconds |

| VoiceMailRingDuration |

Time the phone rings before being routed to voicemail |

Constant |

15 seconds |

| CancelRingDuration |

Time the phone rings before the user gives up |

Uniform |

5-15 seconds |

4.4 User and Device Models

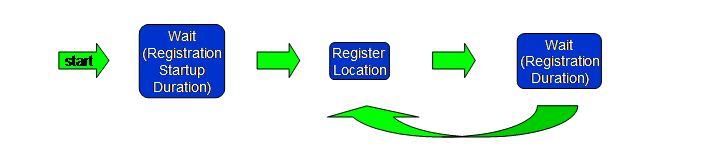

User models are meant to capture the way in which a user interacts with the SIP protocol stack below. User behavior is represented using a standard workflow model, where users take programmatic steps and make decisions that cause branching behavior. Steps are in terms what the user does, rather than what the SIP protocol does. User steps may trigger particular SIP call flows that depend on the action taken; however, the actions taken at the SIP protocol layer occur ``below'' the user behavior layer. Thus, for example, a step may say "make a call" but will not enumerate the packet exchanges for the INVITE request. Device models serve a similar function, but are intended to capture the way a device behaves, where a device is some piece of software or hardware utilized by the user, e.g., a hard phone, a soft phone, or IM client software. They are typically used to model periodic behavior by the device. For example, two packet traces of SIP software show that a common value for registration is to register with a 15 minute timeout value, and then re-register at 10 minute intervals, so that the registration does not expire. These periodic values are not defined by the SIP protocol; thus, they represent some opportunity for a developer to make an arbitrary decision. Note that the device model is meant to capture a common-case decision made by these developers.

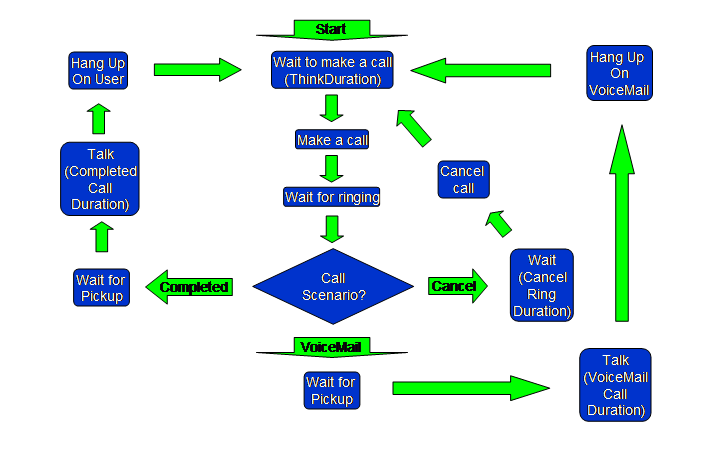

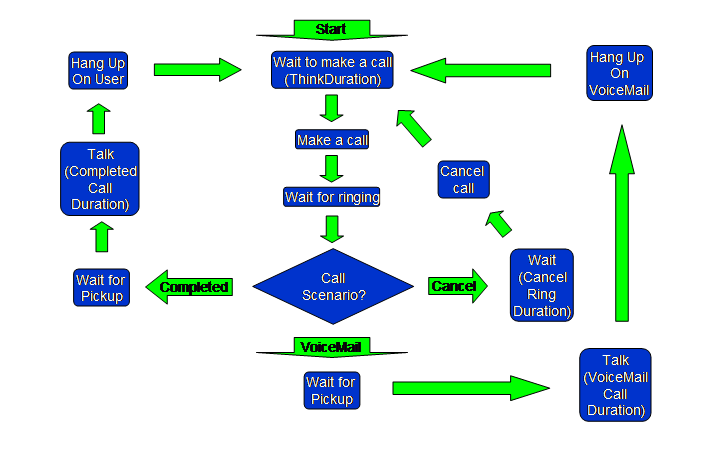

4.4.1 UAC User Model

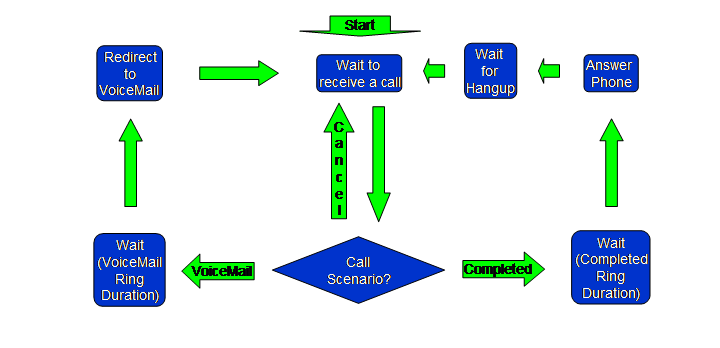

The above Figure shows the user model for the UAC. Note that the UAC makes the decision of which call scenario is being performed (Completed, VoiceMail, Canceled), and communicates that information to the UAS via an out-of-band channel. Note also that, depending on the scenario, the UAC may hang up on the user, generating traffic to the SUT, or the UAC may hang up on the VoiceMail server, which does not generate traffic to the SUT.

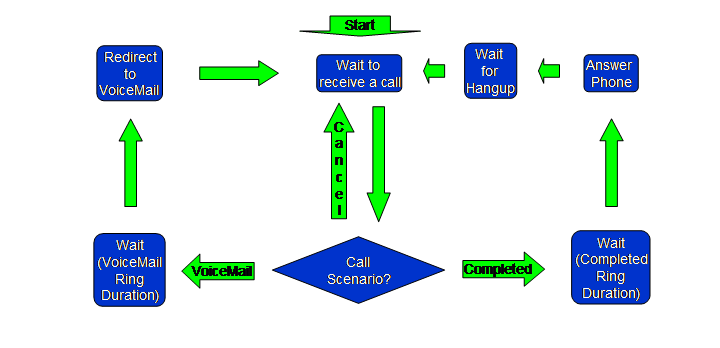

4.4.2 UAS User Model

The above Figure shows the user model for the UAS. Note that the UAS must know which call scenario is being performed, and receives this information from the UAC via the User-Agent: header. Note that in the Cancel call scenario, the UAS returns to the "Wait to receive a call" state and effectively does nothing.

4.4.3 UDE Device Model

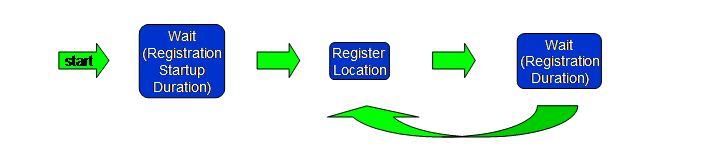

The above Figure shows the user model for the UDE.

4.5 Use Case Scenarios

4.5.1 Registration Scenario 1: Successful Registration

This scenario models the process of user registration in a SIP environment. Registration is common to many SIP applications. Each user has a unique public SIP identity, and must be registered and authenticated to the SUT before being able to receive any messages. Through registration, each user binds its public identity to an actual UA SIP address (i.e., IP address and port number). In general, SIP allows multiple addresses to be registered for each user. In the benchmark, however, for simplicity, a maximum of one such UA address is allowed to be registered for a single public identity

.

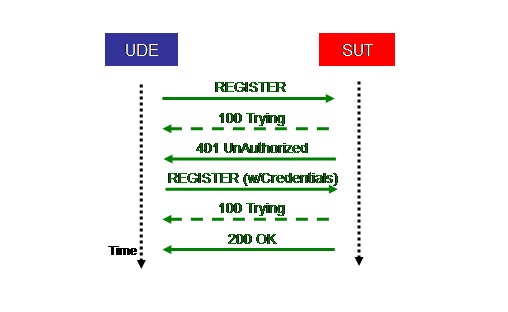

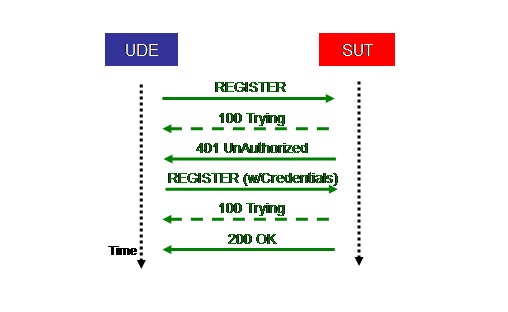

The above Figure shows the call flow for the successful registration scenario, which proceeds as follows:

- The UDE sends an initial REGISTER request to the SUT with the SIP To: header set to the user’s public identity (SIP URI).

- The SUT optionally sends a 100 (Trying) response to the UAC (denoted by dashed lines).

- The SUT then generates a challenge and sends a 401 (Unauthorized) response back to the UDE with the challenge.

- After receiving the 401 response, the UDE generates a credential based on an MD5 hash of the challenge and the user password.

- The UDE then re-transmits the REGISTER request to the SUT with an Authorization: header that includes the credential.

- The SUT optionally sends a 100 (Trying) response to the UAC (denoted by dashed lines).

- The SUT extracts the public identity, and examines the user profile.

- The SUT verifies the credential and sends back a 200 (OK) response.

In general, it is possible in some cases to cache the nonce that is used in the challenge, so that the Authorization header can be re-used on later SIP request without necessitating going through the 401 response above. However, for security reasons, the nonce is valid for only a limited period of time. SPECsip_Infrastructure2011 assumes that any nonce would have expired and thus the authorization exchange above is necessary for each transaction.

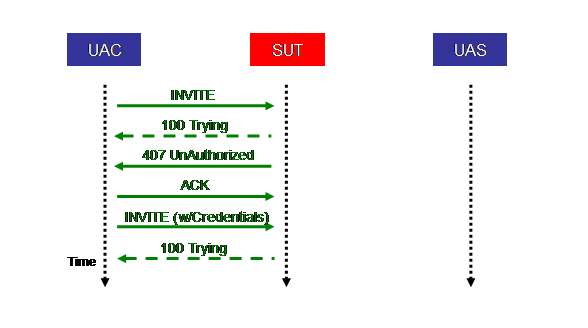

4.5.2 Call Scenario 1: Completed Call

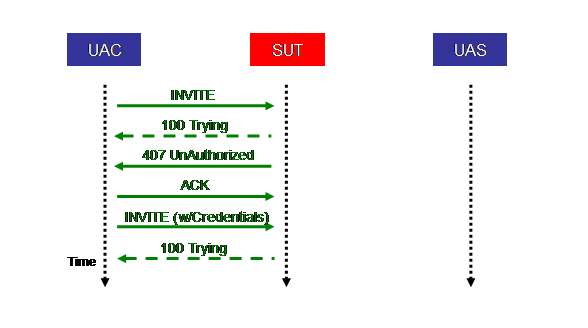

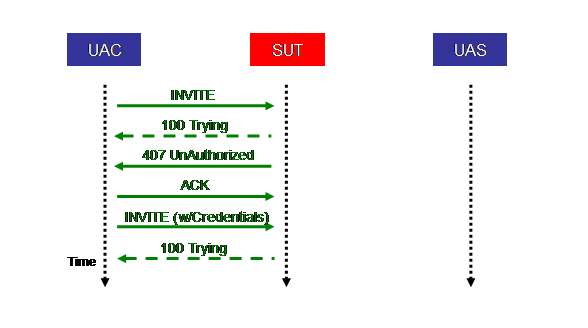

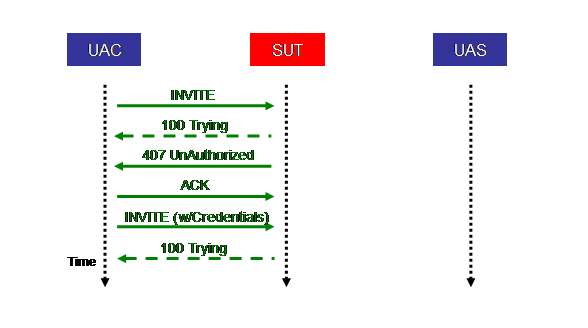

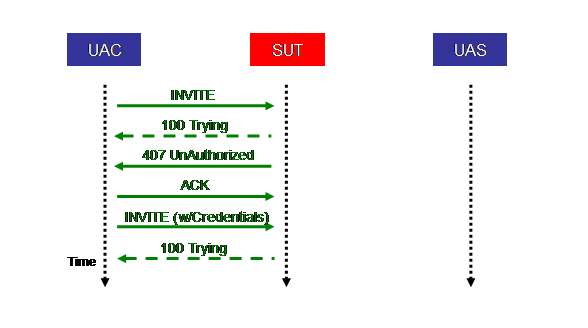

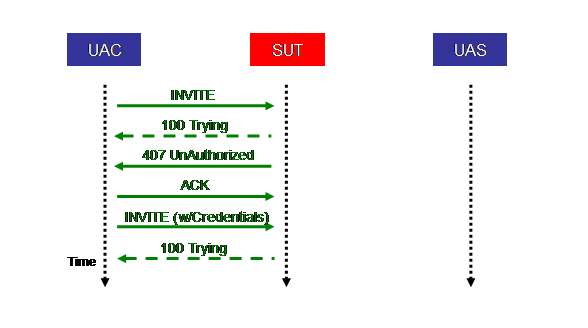

The above Figure shows the call flow of the first stage of the INVITE part of the Completed call scenario. The message flow is as follows:

- The UAC sends an initial INVITE request to the SUT.

- The SUT optionally sends a 100 (Trying) response to the UAC (denoted by dashed lines).

- The SUT generates a challenge and sends a 407 (Unauthorized) response back to the UAC with the challenge.

- The UAC sends an ACK request for the 407, completing the first INVITE transaction.

- The UAC generates a credential based on an MD5 hash of the challenge and the user password.

- The UAC then re-transmits the INVITE request to the SUT with an Authorization: header that includes the credential, creating a new transaction.

- The SUT extracts the public identity, examines the user profile, and verifies the credential.

- The SUT optionally sends a 100 (Trying) response to the UAC (denoted by dashed lines).

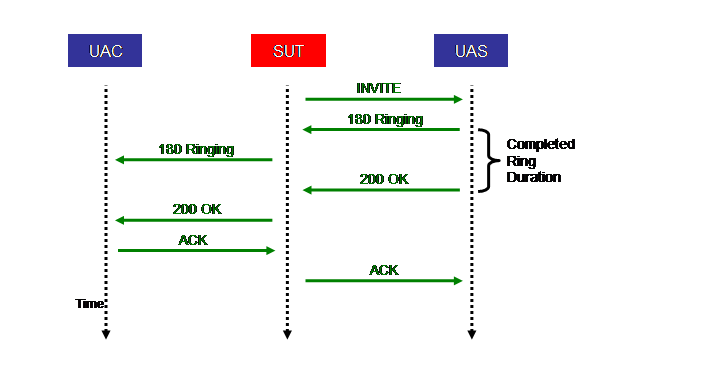

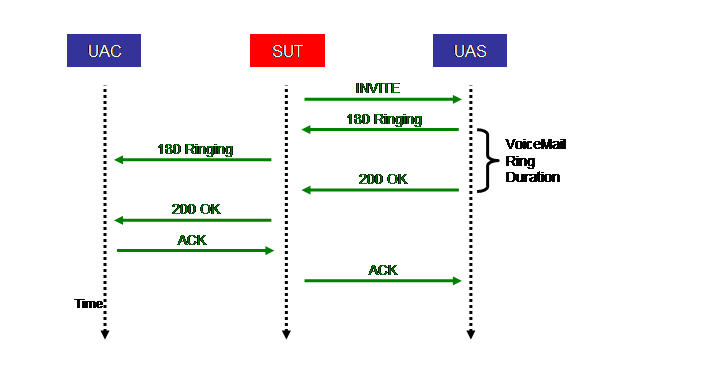

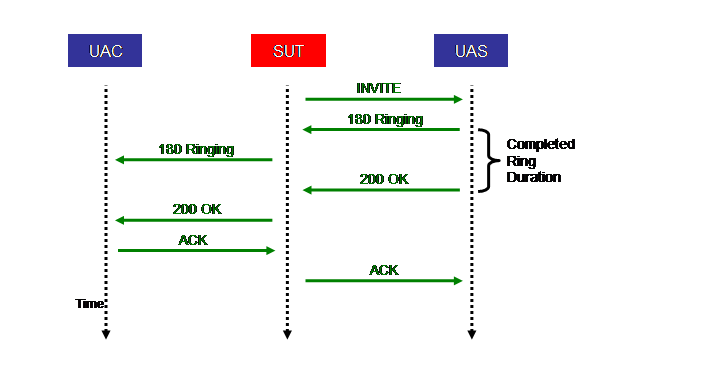

The above Figure shows the call flow of the second stage of the INVITE part of the Completed call scenario. It proceeds as follows:

- The SUT forwards the authenticated INVITE request to the UAS.

- The UAS sends a 180 (Ringing) response, which the SUT forwards back to the UAC.

- The UAS sends a 200 (OK) response, which the SUT forwards back to the UAC.

- The UAC sends an ACK request, which the SUT forwards to the UAS.

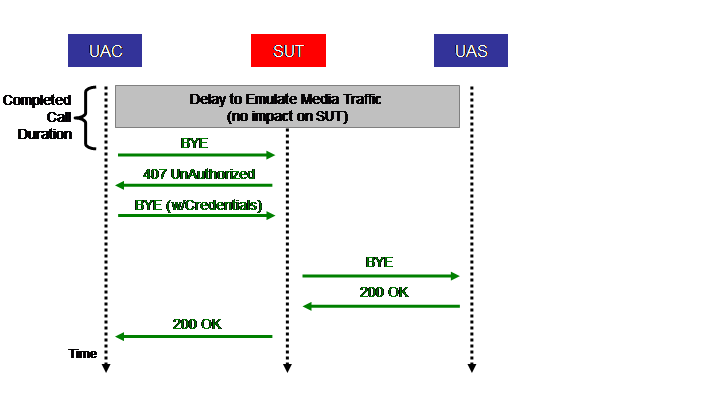

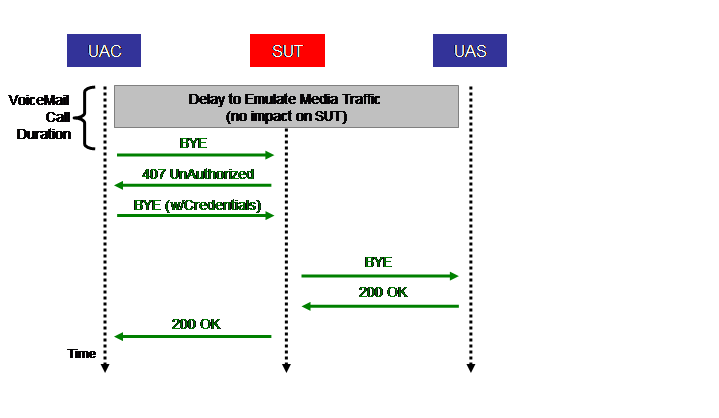

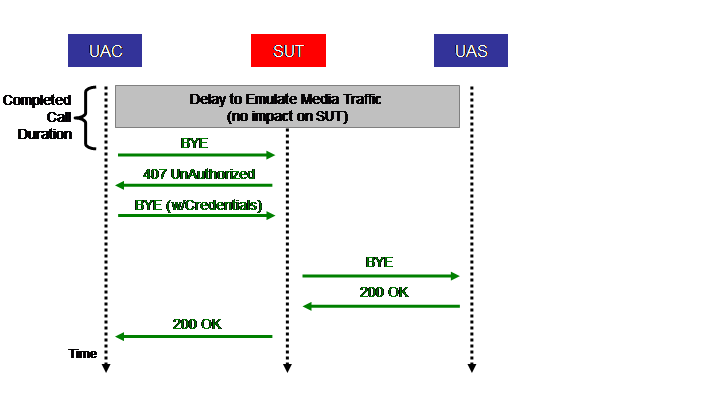

The above Figure shows the call flow of the BYE part of the Completed call scenario. It proceeds as follows:

- The media portion is emulated by the UAC by waiting for CompletedCallDuration seconds. No action is taken by the SUT.

- The UAC generates a BYE request, which is challenged by the SUT with a 407 (Unauthorized) response.

- The BYE is retransmitted with the proper credentials.

- The SUT extracts the public identity, and examines the user profile.

- The SUT verifies the credentials in the retransmitted BYE request, and then forwards the BYE to the UAS.

- The UAS sends a 200 (OK) response, which the SUT forwards back to the UAC.

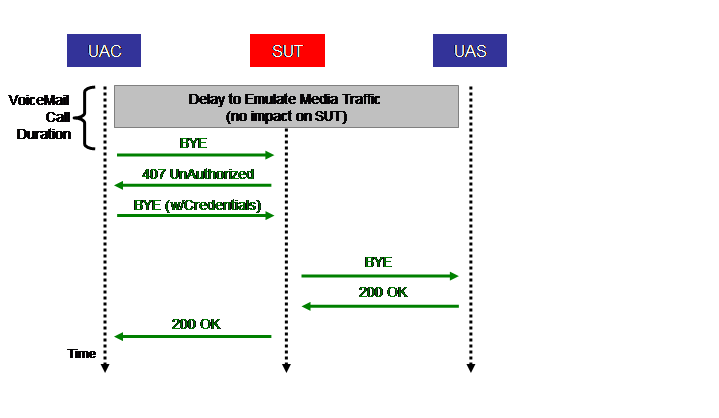

4.5.3 Call Scenario 2: VoiceMail Call

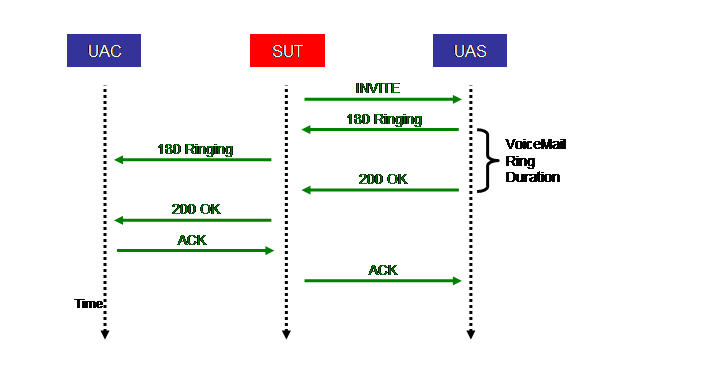

The above Figure shows the call flow of the first stage of the VoiceMail call scenario. It is identical to the first stage of the Completed call scenario in Section 4.3.2. For that reason we do not repeat the description of the steps here.

The above Figure shows the call flow of the second stage of the VoiceMail call scenario. It is identical to the second stage of the Completed call scenario in Section 4.3.2. For that reason we do not repeat the description of the steps here. The one difference is that the ring time is VoiceMailRingDuration, rather than CompletedRingDuration

The above Figure shows the call flow of the third stage of the VoiceMail call scenario. It is identical to the third stage of the Completed call scenario in Section 4.3.2. For that reason we do not repeat the description of the steps here. The one difference is that the call hold time is VoiceMailCallDuration, rather than CompletedCallDuration.

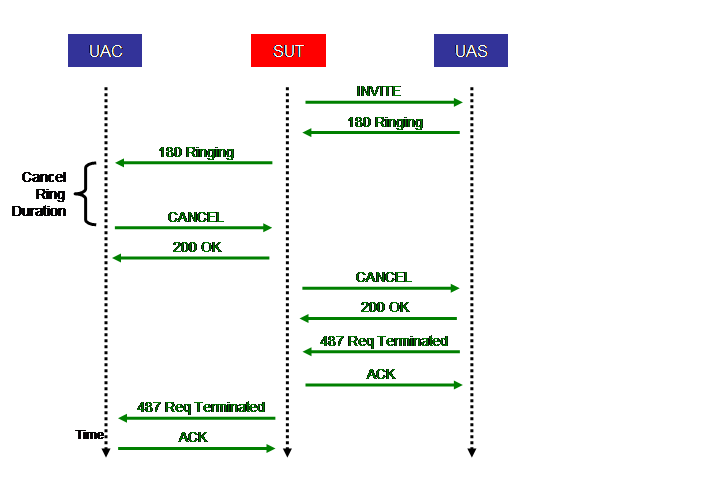

4.5.4 Call Scenario 3: Canceled Call

The above Figure shows the call flow of the first stage of the INVITE part of the Canceled call scenario. It is identical to the first part of the Completed call scenario in Section 4.3.2. For that reason we do not repeat the description of the steps here

.

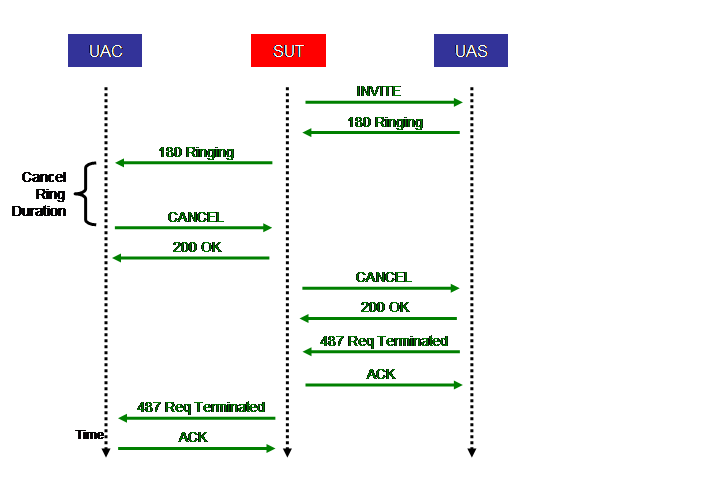

The above Figure shows the call flow for the remainder of the Canceled call scenario. It proceeds as follows:

- The SUT forwards the authenticated INVITE to the UAS.

- The UAS sends a 180 (Ringing) response, which the SUT forwards back to the UAC.

- The UAC reacts to the 180 (Ringing) response by generating a CANCEL request to the SUT.

- The SUT reacts to the CANCEL request by sending a 200 (OK) response to the UAC.

- The SUT sends a CANCEL request to the UAS.

- The UAS reacts to the CANCEL request by sending a 200 (OK) response to the SUT.

- The UAS sends a 487 (Request Terminated) response to the SUT.

- The SUT sends an ACK request to the UAS.

- The SUT sends a 487 (Request Terminated) response to the UAC.

- The UAC sends an ACK request to the SUT.

While it is possible for race conditions to occur (e.g., the UAS picks up before the CANCEL request arrives at the UAS), for simplicity, these are not modeled by SPECsip_Infrastructure2011.

5 Client Workload Generator Details

SIPp is used as a load driver to generate, receive and respond to SIP messages. SIPp is a de facto standard workload generator for SIP and is available via Open Source at http://sipp.sourceforge.net/. SIPp supports a wide array of features including:

- Multiple transports: TCP, UDP, and SSL (TLS)

- Authentication

- UDP retransmissions

- Regular expression matching

- Simple flow control and branching

- RTP replay and echo

SIPp is programmable; its behavior is controlled by an XML scenario file in which a user or device model is specified as a series of messages to be sent and expected responses to be received. As an example, three logical instantiations of SIPp are required for the benchmark, each of which implements one portion of the user/device model:

- The user agent client (UAC) that initiates new calls.

- The user agent server (UAS) that receives calls.

- The user device emulator (UDE) that registers the IP address of the user.

Each portion is implemented via a specific SIPp XML file. SIPp also supports reporting results at predetermined intervals using a statistics file. The statistics file is like a CSV file, but is delimited by semicolons rather than commas. The statistics file displays the number of created calls, successful calls, failed calls, and response time distributions.

6 SUT Details

The SUT providing the VoIP application is responsible for user location registration, call routing, authentication, and state management. It is a proxy and registrar application that receives, processes and responds to SIP messages from the load drivers (UAC, UAS, and UDE). In addition, it also retrieves and archives the user ’s registration information and accounting information. It must, of course, conform to RFC 3261.

6.1 SUT Accounting

The SUT is required to log all SIP transactions to disk during the run for accounting and administrative purposes. It also enables billing and aids in management and capacity planning. This log will be required to be provided to the SPEC SIP SubCommittee for publishing performance results. The format of the log is not specified; instead, the following information is required to be in the log, in any format chosen by the SUT (e.g., in compressed format). When results are submitted, a script must be provided that transforms the log into text that can be analyzed (e.g., a perl or awk script). The following information is logged for every SIP transaction:

| Record Name |

Implied Type |

Definition |

| TransactionType |

VARCHAR(16) |

BYE, CANCEL, INVITE, REGISTER |

| FinalResponse |

INTEGER |

Final Response Code (200, 302, 487) |

| FromUser |

VARCHAR(64) |

User Name |

| ToUser |

VARCHAR(64) |

User Name |

| Call-ID |

VARCHAR(256) |

Call ID |

| Time |

TIMESTAMP NOT NULL |

Time Event Occurred |

Implied Type means the type of the record that will be produced by the script when processed on the transaction log. It does not mean that the log must specifically use that type. For example, one can imagine using a single byte to encode a transaction type, rather than a variable-length string, for efficiency. Each SIP transaction generates a log entry for that particular transaction: INVITE, BYE, CANCEL, and REGISTER. ACK requests do not need to be logged, since they are considered part of the INVITE transaction. Similarly, authentication challenges do not need to be logged, such as the initial REGISTER request that is challenged with a 401 (Unauthorized) response. Only the successful REGISTER transaction that completes with a 200 (OK) response needs to be logged. TransactionType is the SIP transaction being logged. In this test, it will always be INVITE, BYE, CANCEL, or REGISTER. FinalResponse is the final response code (200 or greater) for the transaction. In this test, it should always be 200 (OK), 302 (Moved Temporariliy) or 487 (Request Terminated). FromUser is the user the request originates from (the caller). ToUser is the user the request is destined to (the callee). Call-ID is the Call-ID: used in the transaction which uniquely identifies the call. Time is the absolute time when the event occurred in milliseconds. The idea is that given a stream of events, a provider can produce accounting information by running a post-processing step on the log and reconciling items. This is why the Call-ID is included: to match an INVITE transaction with the matching BYE or CANCEL transaction. Given this information, for example, call duration can be calculated and the appropriate accounting/billing performed.

7 Harness Details

The harness is an infrastructure that automates the benchmarking process. It provides an interface for scheduling and launching benchmark runs. It also offers extensive functionality for viewing, comparing and charting results. The harness consists of two types of components: master and client.

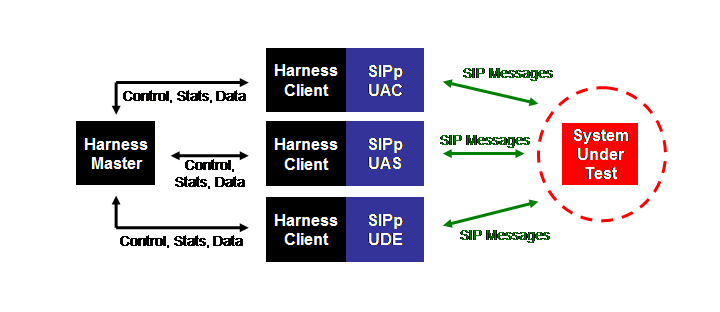

The above Figure shows a more detailed version of the logical architecture presented in Section 2.1. It shows how the harness master communicating with the harness clients, which are co-located with and control the SIPp instances that provide the UAC, UAS, and UDE workload generators. The harness master is the node that controls the experimental setup. It contains a Web server, a run queue, a log server and a repository of benchmark code and results. The master may run on a client machine or on a separate machine. The Web server facilitates access to the harness for submitting and managing runs, as well as accessing the results. The run queue is the main engine for scheduling and controlling benchmark runs. The log server receives logs from all clients in near real time and records them. The harness client invokes and controls each workload generator. It communicates with the master for commands to deploy the configuration to the workload generators, and to collect benchmark results, system information and statistics. More details about the harness configuration and how to use it can be found in the SPECsip_Infrastructure2011 User Guide and User FAQ.

8 Summary and Conclusion

This is the first version of the SPECsip_Infrastructure benchmark. As such, we have attempted to keep the design simple, representative, and consistent with the SIP specification. We expect to release improved versions of the benchmark in the future, reflecting better understanding of real-world workloads and experience in running the benchmark itself. We encourage constructive feedback for improving the SPECsip_Infrastructure benchmark.

This document is Copyright ©2011 Standard Performance Evaluation Corporation.