SPEC SFS®2014_vda Result

Copyright © 2016-2021 Standard Performance Evaluation Corporation

|

SPEC SFS®2014_vda ResultCopyright © 2016-2021 Standard Performance Evaluation Corporation |

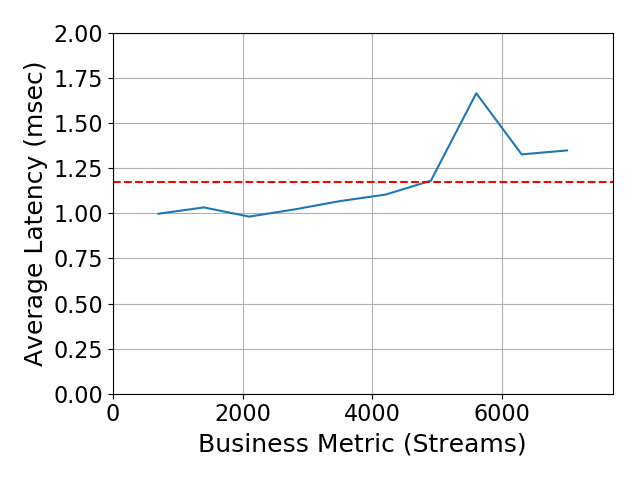

| DDN | SPEC SFS2014_vda = 7000 Streams |

|---|---|

| DDN ES400NVX 2014 VDA | Overall Response Time = 1.17 msec |

|

|

| DDN ES400NVX 2014 VDA | |

|---|---|

| Tested by | DDN |

| Hardware Available | 02 21 |

| Software Available | 02 21 |

| Date Tested | 03 21 |

| License Number | 4722 |

| Licensee Locations | Colorado Springs, CO |

Simple appliances for the largest data challenges. Whether you are speeding up financial service analytics, running challenging autonomous vehicle workloads, or looking to boost drug development pipelines, the ES400NVX all flash appliance is a powerful building block for application acceleration. Unlike traditional enterprise storage, DDN EXAScaler solutions offer efficient performance from a parallel filesystem to deliver an optimized data path designed to scale. Building on over a decade of experience deploying storage solutions in the most demanding environments around the world, DDN delivers unparalleled performance, capability and flexibility for users looking to manage and gain insights from massive amounts of data.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 3 | Scalable 2U NVMe Appliance | DDN | DDN ES400NVX | Dual Intel(R) Xeon(R) Gold 6230 CPU @ 2.10GHz |

| 2 | 24 | Network Adapter | NVIDIA(Mellanox) | MCX653106A-ECAT,ConnectX-6 VPI | For HDR100 connection from the EXAScaler Servers/OSS/MDS to the QM8700 Switch. |

| 3 | 2 | Network Switch | NVIDIA (Mellanox) | QM8700 | 40 Port HDR200 NETWORK Switch |

| 4 | 16 | EXAScaler Clients | SuperMicro | SYS-1028TP-DC0R | Dual Intel Xeon(R) CPU E5-2650 v2 @ 2.60GHz, 128GB memory |

| 5 | 16 | Network Adapter | NVIDIA (Mellanox) | MCX653106A-ECAT,ConnectX-6 VPI | For HDR100 connection from the EXSCaler Clients |

| 6 | 72 | NVMe Drives | Samsung | MZWLL3T2HAJQ-00005 | 3.2TB Samsung NVMe Drives for Storage and Lustre Filesystem |

| 7 | 16 | 10000rpm Sas-12gbps | Toshiba | AL14SEB030N | 300GB Toshiba Drives for Client Nodes OS Boot |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | Storage Appliance Software | DDN SFAOS | 11.8.3 | Storage Operating System designed for scalable performance |

| 2 | EXAScaler Parallel Filesystem Software | Parallel Filesystem Software | 5.2.1 | Distributed/Parallel File system software that runs on Embedded/Physical Servers |

| 3 | CentOS | Linux OS | CentOS 8.1 | 64-bit Operating system for the Client Nodes |

| 4 | EXAScaler Client Software | Parallel Filesystem Client Node Software | lustre-client-2.12.6_ddn3-1.el8.x86_64 | Distributed/Parallel File system software that runs on Client/Compute Nodes |

| CPU Performance Setting | ||

|---|---|---|

| Parameter Name | Value | Description |

| Scaling Governor | Performance | Runs the CPU at the Maximum Frequency |

No other Hardware Tunings and Configurations were applied to the solution offered.

| EXAScaler Component Tunings | ||

|---|---|---|

| Parameter Name | Value | Description |

| osd-ldiskfs.*.writethrough_cache_enable,osd-ldiskfs.*.read_cache_enable | 0 | Disables writethrough cache and read cache on the OSSs/OSTs |

| osc.*.max_pages_per_rpc | 1m | maximum number of pages per RPC |

| osc.*.max_rpcs_in_flight | 8 | maximum number of rpcs in flight |

| osc.*.max_dirty_mb | 1024 | maximum amount of outstanding dirty data |

| llite.*.max_read_ahead_mb | 2048 | maximum amount of cache reserved for readahead cache |

| osc.*.checksums | 0 | Disables network/wire checksums |

All of the tunings applied have been mentioned in the previous section

None

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | 24 NVME drives in a single DCR pool per ES400NVX appliance.54.33 TiB per ES400NVX set aside for data for a total of 163TiB for data with 3xES400NVX appliance. Data and Metadata in the same pool. | DCR Raid6 [8+2p] | Yes | 72 |

| 2 | 24 NVME drives in a single DCR pool per ES400NVX appliance.1TiB per ES400NVX set aside for metadata for a total of 3TiB for metadata with 3xES400NVX appliance.Data and Metadata in the same pool | DCR Raid6 [8+2p] | Yes | 72 |

| 3 | 16 in total for the client OS Boot | None | No | 16 |

| Number of Filesystems | 1 |

|---|---|

| Total Capacity | 163TiB |

| Filesystem Type | EXAScaler File System |

8 Virtual Disks/Raid Objects for OST usage and 4 Virtual Disks/Raid Objects for MDT usage were created from a single DCR pool consisting of 24 NVMEs drives per ES400NVX appliances. Complete solution consisted of 24 OSTs and 12 MDTs.

Declustered RAID (DCR) is a RAID system in which physical disks (PD) are logically broken into smaller pieces known as physical disk extents (PDE). A RAIDset is allocated from the PDEs across a large number of drvies in a random fashion so as to distribute the RAIDset across as many physical drives as possible. Configurable RAID Group Sizes feature allows users to configure the system with the desired RAID[in our case 10disks/raid6 group] and redundancy levels based on data requirements. Each RAID group is configured independently and users can select any valid combination of the number of disks for the respective RAID group.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 100Gb/S HDR100 | 24 | LNETs[Lustre Network Communication Protocol] for EXAScaler MDS/OSS |

| 2 | 100Gb/S HDR100 | 16 | LNETs for EXAScaler Clients |

| 3 | 100Gb/S HDR100 | 8 | ISL between Switches |

None

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | QM8700 | HDR200/HDR100 | 40 | 16 | EXAScaler Clients |

| 2 | QM8700 | HDR200/HDR100 | 40 | 24 | EXAScaler MDS/OSS |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 6 | CPU | ES400NVX | Dual Intel(R) Xeon(R) Gold 6230 CPU @ 2.10GHz | Storage Platform |

| 2 | 16 | CPU | Clients | Dual Intel(R) Xeon(R) CPU E5-2660 v4 @ 2.00GHz | EXAScaler Client Nodes |

None

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 120 | Virtual Cores | EXASCaler VMs/OSS | Virtual Cores from ES400NVX assigned to EXASCaler MDS/OSS | EXAScaler MDS/OSS |

None

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| Memory for ES400NVX SFAOS and cache | 90 | 6 | NV | |

| Memory set aside for each ES400NVX VMs which act as EXAScaler Filesystem OSS/MDS | 150 | 12 | V | |

| Memory per EXAScaler Client Nodes | 128 | 16 | V | |

| Grand Total Memory Gibibytes | 4388 | |||

150GiB of memory are assigned to each of the VMs in the ES400NVX platform. These VMs function as OSS/MDS within the exascaler filesystem.

SFAOS DCR[declustered raid] utilizes large number of drives within a single configuration for fast rebuild time, spreading the load across multiple drives. SFAOS also has the capability of utilizing DCR for setting aside drives for extra redundancy on top of the raid architecture. Other features includes redundant power supply, battery backups,partial rebuilds, dual active-active controller and online upgrades of drives, SFAOS and BIOS, etc. SFAOS features also provide transfer of ownership including raid devices between the controllers as well as the VMs within the SFA Platform and the EXAScaler Filesystem.

The SUT Configuration utilized DDNs fastest NVME storage offering, the ES400NVX, combined with EXAScaler Parallel filesystem. 4 VMS residing in each ES400NVX[12 in total] acted as both Metadata Servers and Object Storage servers, and utilized 24 NVME drives[72 in total] for the creation of Medatata Targets[MDTs] and Object Storage Targets[OSTs] and the EXAScaler Filesystem. 16 physical servers acted as client Nodes, participating in the SPEC SFS benchmark.

None

DCR raid objects[Pools and Virtual Disks] were utlized by the ES400NVX VMs[OSS and MDS] to create a single shared namespace[EXAScaler Filesystem].Network data and bulk data communication between the nodes within the fileystem[Lustre Network Communication Protocol] was carried out via HDR100 interfaces. Client Nodes shared the same namespace/filesystem via their own HDR100 connection to the Mellanox [NVIDIA] QM8700 switch.

Spectre turned off. spectre_v2=off nopti.

None

Generated on Tue Jun 29 17:09:52 2021 by SpecReport

Copyright © 2016-2021 Standard Performance Evaluation Corporation