SPEC SFS®2014_eda Result

Copyright © 2016-2019 Standard Performance Evaluation Corporation

|

SPEC SFS®2014_eda ResultCopyright © 2016-2019 Standard Performance Evaluation Corporation |

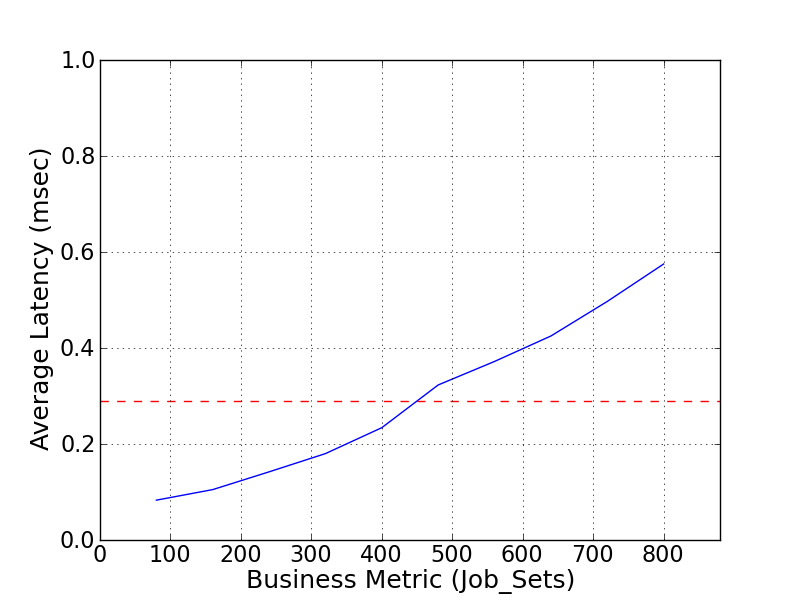

| DDN Storage | SPEC SFS2014_eda = 800 Job_Sets |

|---|---|

| SFA14KX with GridScaler | Overall Response Time = 0.29 msec |

|

|

| SFA14KX with GridScaler | |

|---|---|

| Tested by | DDN Storage |

| Hardware Available | 08 2018 |

| Software Available | 09 2018 |

| Date Tested | 09 2018 |

| License Number | 4722 |

| Licensee Locations | Santa Clara |

To address the comprehensive needs of High Performance Computing and Analytics environments, the revolutionary DDN SFA14KX Hybrid Storage Platform is the highest performance architecture in the industry that delivers up to 60GB/s of throughput, extreme IOPs at low latency with industry-leading density in a single 4U appliance. By integrating the latest high-performance technologies from silicon, to interconnect, memory and flash, along with DDN's SFAOS - a real-time storage engine designed for scalable performance, the SFA14KX outperforms everything on the market. Leveraging over a decade of leadership in the highest end of Big Data, the GRIDScaler Parallel File system solution running on the SFA14KX provides flexible choices for Enterprise-grade data protection, availability features, and the performance of a parallel file system coupled with DDN's deep expertise and history of supporting highly efficient, large-scale deployments.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 1 | Storage Appliance | DDN Storage | SFA14KX (FC) | Dual Intel(R) Xeon(R) CPU E5-2650 v2 @ 2.60GHz |

| 2 | 25 | Network Adapter | Mellanox | ConnectX- VPI (MCX4121A-XCAT) | Dual-port QSFP, EDR IB (100Gb/s) / 100GigE, PCIe 3.0 x16 8GT/s |

| 3 | 6 | GridScaler Server | Supermicro | SYS-1027R-WC1RT | Dual Intel(R) Xeon(R) CPU E5-2667 v3 @ 3.20GHz, 96GB of memory per Server |

| 4 | 19 | GridScaler Clients | Supermicro | SYS-1027R-WC1RT | Dual Intel Xeon(R) CPU E5-2650 v2 @ 2.60GHz, 128GB of memory per client |

| 5 | 2 | Switch | Mellanox | SB7700 | 36 port EDR Infiniband switch |

| 6 | 72 | Drives | HGST | SDLL1DLR400GCCA1 | HGST Hitachi Ultrastar SS200 400GB MLC SAS 12Gbps Mixed Use (SE) 2.5-inch Internal Solid State Drive (SSD) |

| 7 | 50 | Drives | Toshiba | AL14SEB030N | AL14SEB-N Enterprise Performance Boot HDD |

| 8 | 6 | FC Adapter | QLogic | QLogic QLE2742 | QLogic 32Gb 2-port FC to PCIe Gen3 x8 Adapter |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | Server Nodes | Distributed Filesystem | GRIDScaler 5.0.2 | Distributed file system software that runs on server nodes. |

| 2 | Client Nodes | Distributed Filesystem | GRIDScaler 5.0.2 | Distributed file system software that runs on client nodes. |

| 3 | Client Nodes | Operating System | RHEL 7.4 | The Client operating system - 64-bit Red Hat Enterprise Linux version 7.4. |

| 4 | Storage Appliance | Storage Appliance | SFAOS 11.1.0 | SFAOS - real-time storage Operating System designed for scalable performance. |

| GRIDScaler NSD Server configuration | ||

|---|---|---|

| Parameter Name | Value | Description |

| nsdBufSpace | 70 | The parameter nsdBufSpace specifies the percent of pagepool which can be utilized for NSD IO buffers. |

| nsdMaxWorkerThreads | 1536 | The parameter nsdMaxWorkerThreads sets the maximum number of NSD threads on an NSD server that will be concurrently transferring data with NSD clients. |

| pagepool | 4g | The Pagepool parameter determines the size of the file data cache. |

| nsdSmallThreadRatio | 3 | The parameter nsdSmallThreadRatio determines the ratio of NSD server queues for small IO's (default less than 64KiB) to the number of NSD server queues that handle large IO's (> 64KiB). |

| nsdThreadsPerQueue | 8 | The parameter nsdThreadsPerQueue determines the number of threads assigned to process each NSD server IO queue. |

| GRIDScaler common configuration | ||

| Parameter Name | Value | Description |

| maxMBpS | 30000 | The maxMBpS option is an indicator of the maximum throughput in megabytes that can be submitted per second into or out of a single node. |

| ignorePrefetchLUNCount | yes | Specifies that only maxMBpS and not the number of LUNs should be used to dynamically allocate prefetch threads. |

| verbsRdma | enable | Enables the use of RDMA for data transfers. |

| verbsRdmaSend | yes | Enables the use of verbs send/receive for data transfers. |

| verbsPorts | mlx5_0/1 8x | Lists the Ports used for the communication between the nodes. |

| workerThreads | 1024 | The workerThreads parameter controls an integrated group of variables that tune the file system performance in environments that are capable of high sequential and random read and write workloads and small file activity. |

| maxReceiverThreads | 64 | The maxReceiverThreads parameter is the number of threads used to handle incoming network packets. |

None

| GridScaler Clients | ||

|---|---|---|

| Parameter Name | Value | Description |

| maxFilesToCache | 4m | The maxFilesToCache (MFTC) parameter controls how many file descriptors (inodes) each node can cache. |

| maxStatCache | 40m | The maxStatCache parameter sets aside pageable memory to cache attributes of files that are not currently in the regular file cache. |

| pagepool | 16g | The Pagepool parameter determines the size of the file data cache. |

| openFileTimeout | 86400 | Determines the max amount of seconds we allow inode informations to stay in cache after the last open of the file before discarding them. |

| maxActiveIallocSegs | 8 | Determines how many inode allocation segments a individual client is allowed to select free inodes from in parallel. |

| syncInterval | 30 | Specifies the interval (in seconds) in which data that has not been explicitly committed by the client is synced systemwide. |

| prefetchAggressiveness | 1 | Defines how aggressively to prefetch data. |

Detailed description of the configuration and tuning options can be found here --> https://www.ibm.com/developerworks/community/wikis/home?lang=ja#!/wiki/General%20Parallel%20File%20System%20(GPFS)/page/Tuning%20Parameters

None

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | 72 SSDs in SFA14KX (FC) | DCR 8+2p | Yes | 72 |

| 2 | 50 mirrored internal 300GB 10K SAS Boot drives | RAID-1 | No | 50 |

| Number of Filesystems | 1 |

|---|---|

| Total Capacity | 20.3 TiB |

| Filesystem Type | GRIDScaler |

A single filesystem with 1MB blocksize (no separate metadata disks) in scatter allocation mode was created. The filesystem inode limit was set to 1.5 Billion.

The SFA14KX had 24 8+2p virtual disks with a 128KB strip size created out of a single 72 drive DCR storage pool.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 100Gb EDR | 25 | mlx5_0 |

| 2 | 16 Gb FC | 24 | FC0-3 |

2x 36-port switches in a single infiniband fabric, with 8 ISLs between them. Management traffic used IPoIB, data traffic used RDMA on the same physical adapter.

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | Client Mellanox SB7700 | 100Gb EDR | 36 | 19 | The default configuration was used on the switch |

| 2 | Server Mellanox SB7700 | 100Gb EDR | 36 | 6 | The default configuration was used on the switch |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 2 | CPU | SFA14KX | Dual Intel(R) Xeon(R) CPU E5-2650 v2 @ 2.60GHz | Storage unit |

| 2 | 19 | CPU | client nodes | Dual Intel Xeon(R) CPU E5-2650 v2 @ 2.60GHz | Filesystem client, load generator |

| 3 | 6 | CPU | server nodes | Dual Intel(R) Xeon(R) CPU E5-2667 v3 @ 3.20GHz | GRIDScaler Server |

None

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| Cache in SFA System | 128 | 2 | NV | 256 |

| GRIDScaler client node system memory | 128 | 19 | V | 2432 |

| GRIDScaler Server node system memory | 96 | 6 | V | 576 |

| Grand Total Memory Gibibytes | 3264 | |||

The GRIDScaler clients use a portion of the memory (configured via pagepool and file cache parameter) to cache metadata and data. The GRIDScaler servers use a portion of the memory (configured via pagepool) for write Buffers. In the SFA14KX, some portion of memory is used for the SFAOS Operating system as well as data caching.

SFAOS with Declustered RAID performs rapid rebuilds, spreading the rebuild process across many drives. SFAOS also supports a range of features which improve uptime for large scale systems including partial rebuilds, enlosure redundancy, dual active-active controllers, online upgrades and more. The SFA14KX (FC) has built-in backup battery power support to allow destaging of cached data to persistent storage in case of a power outage. The system doesn't require further battery power after the destage process completed. All servers and the SFA14KX are redundantly configured. All 6 Servers have access to all data shared by the SFA14KX. In the event of loss of a server, that server's data will be failed over automatically to a remaining server with continued production service. Stable writes and commit operations in GRIDScaler are not acknowledged until the NSD server receives an acknowledgment of write completion from the underlying storage system (SFA14KX)

The solution under test used a GRIDSCaler Cluster optimized for small file, metadata intensive workloads. The Clients served as Filesystem clients as well as load generators for the benchmark. The Benchmark was executed from one of the server nodes. None of the component used to perform the test where patched with Spectre or Meltdown patches (CVE-2017-5754,CVE-2017-5753,CVE-2017-5715).

None

All 19 Clients where used to generate workload against a single Filesystem mountpoint (single namespace) accessible as a local mount on all clients. The GRIDScaler Server received the requests by the clients and processed the read or write operation against all connected DCR backed VD's in the SFA14KX.

GRIDScaler are trademarks of DataDirect Network in the U.S. and/or other countries. Intel and Xeon are trademarks of the Intel Corporation in the U.S. and/or other countries. Mellanox is a registered trademark of Mellanox Ltd.

None

Generated on Wed Mar 13 16:26:03 2019 by SpecReport

Copyright © 2016-2019 Standard Performance Evaluation Corporation