SPEC SFS®2014_swbuild Result

Copyright © 2016-2019 Standard Performance Evaluation Corporation

|

SPEC SFS®2014_swbuild ResultCopyright © 2016-2019 Standard Performance Evaluation Corporation |

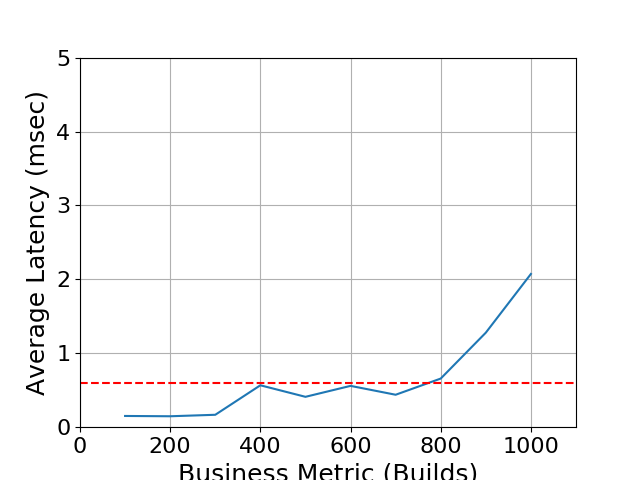

| Huawei | SPEC SFS2014_swbuild = 1000 Builds |

|---|---|

| Huawei OceanStor 6800F V5 | Overall Response Time = 0.59 msec |

|

|

| Huawei OceanStor 6800F V5 | |

|---|---|

| Tested by | Huawei |

| Hardware Available | 04/2018 |

| Software Available | 04/2018 |

| Date Tested | 04/2018 |

| License Number | 3175 |

| Licensee Locations | Chengdu, China |

Huawei's OceanStor 6800F V5 Storage System is the new generation of mission-critical all-flash storage, dedicated to providing the highest level of data services for enterprises' mission-critical business. Flexible scalability, flash-enabled performance, and hybrid-cloud-ready architecture, provide the optimal data services for enterprises along with simple and agile management.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 1 | Storage Array | Huawei | OceanStor 6800F V5 All Flash System(Four Active-Active Controller) | A single Huawei OceanStor 6800F V5 engine includes 4 controllers. Huawei OceanStor 6800F V5 is 4-controller full redundancy. Each controller includes 1TB memory and 2 4-port 10GbE Smart I/O Modules, 8 ports used for data (connections to load generators). The 6800F V5 engine includes 2 12-port SAS IO Modules, 4 ports for one SAS IO Modules used to connect to disk enclosure. Included Premium Bundle which includes NFS, CIFS, NDMP, SmartQuota, HyperClone, HyperSnap, HyperReplication, HyperMetro, SmartQoS, SmartPartition, SmartDedupe, SmartCompression, Only NFS protocol license is used in the test. |

| 2 | 60 | Disk drive | Huawei | SSDM-900G2S-02 | 900GB SSD SAS Disk Unit(2.5"), each disk enclosure used 15 SSD disks in the test. |

| 3 | 4 | Disk Enclosure | Huawei | 2U SAS disk enclosure | 2U, AC\240HVDC, 2.5", Expanding Module, 25 Disk Slots. The disks were in the disk enclosures and the enclosures were connected to the storage controller directly. |

| 4 | 16 | 10GbE HBA card | Intel | Intel Corporation 82599ES 10-Gigabit SFI/SFP+ | Used in client for data connection to storage,each client used 2 10GbE cards,and each card with 2 ports. |

| 5 | 8 | Client | Huawei | Huawei FusionServer RH2288 V3 servers | Huawei server, each with 128GB main memory. 1 used as Prime Client; 8 used to generate the workload including Prime Client. |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | Linux | OS | Suse12 SP3 for x86_64SUSE Linux Enterprise Server 12 SP3 (x86_64) with the kernel 4.4.73-5-default | OS for the 8 clients |

| 2 | OceanStor | Storage OS | V500R007 | Storage Operating System |

| Client | ||

|---|---|---|

| Parameter Name | Value | Description |

| MTU | 9000 | Jumbo Frames configured for 10Gb ports |

Client 10Gb ports used for connection to storage controller. And just the clients were configured for Jumbo frames. The storage used the default MTU(1500).

| Clients | ||

|---|---|---|

| Parameter Name | Value | Description |

| rsize,wsize | 1048576 | NFS mount options for data block size |

| protocol | tcp | NFS mount options for protocol |

| tcp_fin_timeout | 600 | TCP time to wait for final packet before socket closed |

| nfsvers | 3 | NFS mount options for NFS version |

Used the mount command "mount -t nfs -o nfsvers=3 11.11.11.1:/fs_1 /mnt/fs_1" in the test. The mount information is 11.11.11.1:/fs_1 on /mnt/fs_1 type nfs (rw,relatime,vers=3,rsize=1048576,wsize=1048576,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,mountaddr=11.11.11.1,mountvers=3,mountport=2050,mountproto=udp,local_lock=none,addr=11.11.11.1).

None

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | 900GB SSD Drives used for data; 4x 15 RAID5-9 groups including 4 coffer disk; | RAID-5 | Yes | 60 |

| 2 | 1 800GiB NVMe used for system data for one controller;7GiB on the 4 coffer disks and 800GiB NVMe to be a RAID1 group; | RAID-1 | Yes | 4 |

| Number of Filesystems | 32 |

|---|---|

| Total Capacity | 28800GiB |

| Filesystem Type | thin |

The file system block size was 8KB.

Used one engine of OceanStor 6800F V5 in the test. And one engine included four controllers. 4 disk enclosure were connnected to the engine. Each disk enclosure used 15 900GB SSD disks. 15 disks in one disk enclosure were created to a storage pool. In the storage pool 8 filesystems were created. And for the 8 filesystems of one storage pool, each controller included 2 filesystems. RAID5-9 was 8+1. The RAID5-9 was on the each stripe and all the stripe was distributed in the all the 15 drives by specifical algorithm. For example, stripe 1 was RAID5-9 from disk1 to disk8. And stripe 2 was from disk2 to disk9. All the stripe was just like this.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 10GbE | 32 | For the client-to-storage network, client connected the storage directly. No switch was used.There were 32 10GbE connections totally,communicating with NFSv3 over TCP/IP to 8 clients. |

Each controller used 2 10GbE card and each 10GbE card included 4 port. Totally 8 10GbE port were used for each controller for data transport connectivity to clients. Totally 32 ports for the 8 clients and 32 ports for 4 storage controller were used and the clients connected the storage directly. The 4 controller interconnect used PCIe to be HA pairs.

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | None | None | None | None | None |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 8 | CPU | Storage Controller | Intel(R) Xeon(R) Gold 5120T @ 2.20GHz, 14 core | NFS, TCP/IP, RAID and Storage Controller functions |

| 2 | 16 | CPU | Client | Intel(R) Xeon(R) CPU E5-2670 v3 @ 2.30GHz | NFS Client, Suse Linux OS |

Each OceanStor 6800F V5 Storage Controller contains 2 Intel(R) Xeon(R) Gold 5120T @ 2.20GHz processor. Each client contains 2 Intel(R) Xeon(R) CPU E5-2670 v3 @ 2.30GHz processor.

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| Main Memory for each OceanStor 6800F V5 Storage Controller | 1024 | 4 | V | 4096 |

| Memory for each client | 128 | 8 | V | 1024 |

| Grand Total Memory Gibibytes | 5120 | |||

Main memory in each storage controller was used for the operating system and caching filesystem data including the read and write cache.

1.There are three ways to protect data. For the disk failure, OceanStor 6800F V5 Storage uses RAID to protect data. For the controller failure, OceanStor 6800F V5 Storage uses cache mirror which data also wrote to the another controller's cache. And for power failure, there are BBUs to supply power for the storage to flush the cache data to disks. 2.No persistent memory were used in the storage, The BBUs could supply the power for the failure recovery and the 1 TiB memory for each controller included the mirror cache. The data was mirrored between controllers. 3.The write cache was less than 800GiB, so the 800GiB of NVMe drive could cover the user write data.

None

None

Please reference the configuration diagram. 8 clients were used to generate the workload; 1 client acted as Prime Client to control the 7 other clients. Each client had 4 ports and each port connected to each controller. And each port mounted one filesystem for each controller. Totally for one client, 4 filesystems were mounted.

There were two SAS IO module in the engine. 2 controllers shared 1 SAS IO module. 1 disk enclosure had 2 connections to the engine, one connection to one SAS IO module.

None

Generated on Wed Mar 13 16:39:02 2019 by SpecReport

Copyright © 2016-2019 Standard Performance Evaluation Corporation