This post discusses some of the things I realised while coding the raytracer in Structure Synth and some thoughts about GPU raytracing.

Luxion

Back in October, I saw a presentation by Henrik Wann Jensen (known for his work on photon mapping and subsurface scattering) where he demonstrated the Keyshot renderer made by his company Luxion. It turned out to be a very impressive demonstration: the system was able to render very complex scenes (a car with a million polygons), with very complex materials (such as subsurface scattering) and very complex lighting, in real-time on a laptop.

After having downloaded a Keyshot renderer demo, I was able to figure out some of the tricks used to achieve this kind of performance.

- Using panoramic HDRI maps as backgrounds: the use of a panoramic background image gives the illusion of a very complex scene at virtually no computational cost. The 3D objects in the scene even seem to cast shadows on this background image: something which can be done by adding an invisible ground floor. In most cases this simple hack works out quite nice.

- Image Based Lighting: by using the panoramic HRDI maps to calculate the lighting as well, you achieve very complex and soft lighting, which of course matches the panoramic background. And you don’t have to setup complicated area lights or stuff like that.

- Progressive rendering: images in Keyshot may take up to minutes before the rendering has converged, but you do not notice. Because Luxshot renders progressively you can immediately adjust your settings without having to wait for the system. This means the perceived rendering speed is much higher than in classic raytracers (such as POV-Ray).

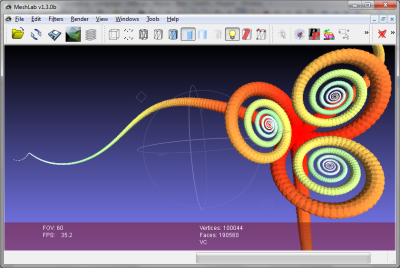

The above image is a Structure Synth model exported in OBJ format, and rendered in Keyshot.

Some of these ideas should be relatively easy to implement. Especially the idea of using panoramic maps as light sources is clever – setting up individual light sources in Structure Synth programmatically would be tedious, and the complex lighting from a panoramic scene is very convincing (at least in Keyshot).

Henrik Wann Jensen also made some interesting comments about GPU computing: essentially he was not very impressed, and seemed convinced that similar performance could be obtained on CPUs, if utilizing the resources properly.

Of course Wann Jensen may be somewhat biased here: after all Luxions biggest competitor (and former partner), Bunkspeed, use a hybrid GPU/CPU approach in their Bunkspeed Shot raytracer. The raytracing technology used here is a licensed version of Mental Images iray engine.

Having tried both raytracers, I must say Luxion’s pure CPU technique was by far the most impressive, but as I will discuss later, GPU raytracing may have other advantages. (And it is really difficult to understand why it should not be possible to benefit from using both CPU and GPU together).

If you haven’t tried Keyshot, it is definitely worth it: I never really liked integrated raytracing environments (such as Blender), because it almost always is so difficult to setup stuff like light sources and materials, but Keyshot is certainly a step forward in usability.

Physically Based Rendering

As I described in my previous post, I made many mistakes during the development of the Raytracer in Structure Synth. And all these mistakes could have been avoided, if I had started out by reading ‘Physically Based Rendering‘ by Matt Pharr and Greg Humphreys. But yet again – part of the fun is trying to figure out new ways to do things, instead of just copying other people.

I have only read fragments of it so far, but still I can wholeheartedly recommend it: even though I’m a physicist myself, I was surprised to see just how far you can get using physical principles to build a complete raytracing framework. It is a very impressive and consistent approach to introducing raytracing, and the book covers nearly all aspects of raytracing I could think of – except one minor thing: I would have loved to see a chapter on GPU raytracing, especially since both authors seem to have extensive experience with this topic.

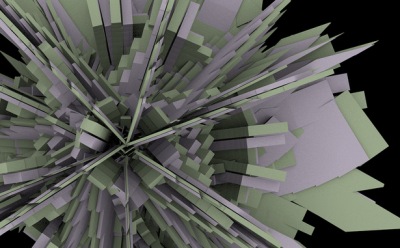

Microcity.pbrt – modeled by Mark Schafer

But I was flattered to see an image created with Structure Synth in the book – and their pbrt raytracer even has integration to Structure Synth!

GPU Based Rendering

Now, for the past months I’ve made a few experiments with GPU based rendering and generative systems using GLSL. And even though Henrik Wann Jensen may be right, and the performance gain of GPU’s may be somewhat exaggerated, they do have some advantages which are often ignored:

- GPU programming is easy. If you want to achieve high performance on a CPU, you need to write complex multi-threaded code and do tricky low-level optimizations, such as inlined SSE instructions and custom memory management. On the other hand GLSL is very easy to write and understand and even comes with built-in support for matrices and vectors. Well, actually GPU programming can also be terrible hard: if you need to share memory between threads, or move data between the GPU and CPU, things can get very ugly (and you will need a more powerful API than GLSL). But for ’embarrassingly parallel’ problems – for instance if each pixel can be calculated independently – with simply, unshared memory access – GPU programming can be just as easy as for instance Processing.

- Code can be compiled and uploaded to the GPU dynamically. In C++ or Java making a small change to one part of a program requires a recompilation and restart of the program. But with GLSL (and all other GPU API’s) you get a fast compiler you can use during run-time to create highly efficient multi-threaded code.

The combination of being easy, fast, and dynamic, makes GPU rendering wonderful for exploring many types of generative art systems. In fact, probably the biggest hurdle is to setup the boilerplate code required to start a GLSL program. And even though there are many nice tools out there (e.g. Pixel Bender) none of them are really suited for generative systems.

So, for the past month I’ve been working a bit on an integrated environment for exploring GPU accelerated pixel based systems, called Fragmentarium. It is still quite immature, but I plan to release a version (with binary builds) later this January.

This also means that I will use most of my spare time on this project the coming months, but some of the ideas will probably find use in Structure Synth as well.