The first post discussed how to find the intersection between a camera ray and a fractal, but did not talk about how to color the object. There are two steps involved here: setting up a coloring scheme for the fractal object itself, and the shading (lighting) of the object.

Lights and shading

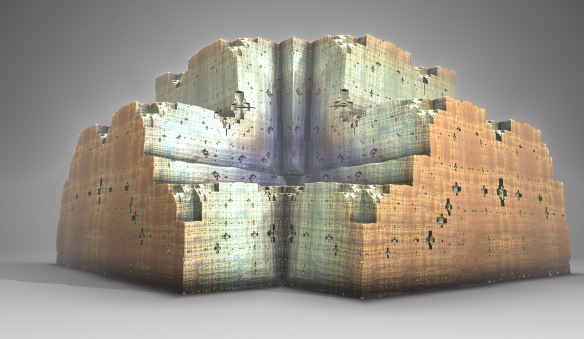

Since we are raymarching our objects, we can use the standard lighting techniques from ray tracing. The most common form of lightning is to use something like Blinn-Phong, and calculate approximated ambient, diffuse, and specular light based on the position of the light source and the normal of the fractal object.

Surface Normal

So how do we obtain a normal of a fractal surface?

A common method is to probe the Distance Estimator function in small steps along the coordinate system axis and use the numerical gradient obtained from this as the normal (since the normal must point in the direction where the distance field increase most rapidly). This is an example of the finite difference method for numerical differentiation. The following snippet shows how the normal may be calculated:

vec3 n = normalize(vec3(DE(pos+xDir)-DE(pos-xDir), DE(pos+yDir)-DE(pos-yDir), DE(pos+zDir)-DE(pos-zDir)));

The original Hart paper also suggested that alternatively, the screen space depth buffer could be used to determine the normal – but this seems to be both more difficult and less accurate.

Finally, as fpsunflower noted in this thread it is possible to use Automatic Differentiation with dual numbers, to obtain a gradient without having to introduce an arbitrary epsilon sampling distance.

Ambient Occlusion

Besides the ambient, diffuse, and specular light from Phong-shading, one thing that really improves the quality and depth illusion of a 3D model is ambient occlusion. In my first post, I gave an example of how the number of ray steps could be used as a very rough measure of how occluded the geometry is (I first saw this at Subblue’s site – his Quaternion Julia page has some nice illustrations of this effect). This ‘ray step AO‘ approach has its shortcomings though: for instance, if the camera ray is nearly parallel to a surface (a grazing incidence) a lot of steps will be used, and the surface will be darkened, even if it is not occluded at all.

Another approach is to sample the Distance Estimator at points along the normal of the surface and use this information to put together a measure for the Ambient Occlusion. This is a more intuitive method, but comes with some other shortcomings – i.e. new parameters are needed to control the distance between the samplings and their relative weights with no obvious default settings. A description of this ‘normal sampling AO‘ approach can be found in Iñigo Quilez’s introduction to distance field rendering.

In Fragmentarium, I’ve implemented both methods: The ‘DetailAO’ parameter controls the distance at which the normal is sampled for the ‘normal sampling AO’ method. If ‘DetailAO’ is set to zero, the ‘ray step AO’ method is used.

Other lighting effects

Besides Phong shading and ambient occlusion, all the usual tips and tricks in ray tracing may be applied:

Glow – can be added simply by mixing in a color based on the number of ray steps taken (points close to the fractal will use more ray steps, even if they miss the fractal, so pixels close to the object will glow).

Fog – is also great for adding to the depth perception. Simply blend in the background color based on the distance from the camera.

Hard shadows are also straight forward – check if the ray from the surface point to the light source is occluded.

Soft shadows: Iñigo Quilez has a good description of doing softened shadows.

Reflections are pretty much the same – reflect the camera ray in the surface normal, and mix in the color of whatever the reflected ray hits.

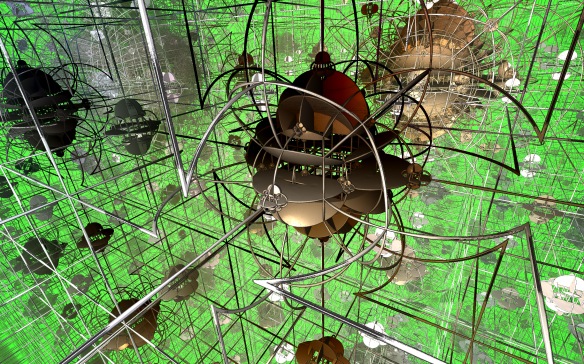

The effects above are all implemented in Fragmentarium as well. Numerous other extensions could be added to the raytracer: for example, environment mapping using HDRI panoramic maps provides very natural lighting and is easy to apply for the user, simulated depth-of-field also adds great depth illusion to an image, and can be calculated in reasonable time and quality using screen space buffers, and more complex materials could also be added.

Coloring

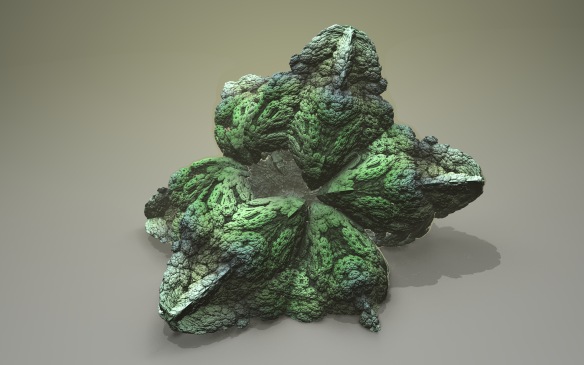

Fractal objects with a uniform base color and simple colored light sources can produce great images. But algorithmic coloring is a powerful tool for bringing the fractals to life.

Algorithmic color use one or more quantities, determined by looking at the orbit or the escape point or time.

Orbit traps is a popular way to color fractals. This method keeps track of how close the orbit comes to a chosen geometric object. Typical traps include keeping track of the minimum distance to the coordinate system center, or to simple geometric shapes like planes, lines, or spheres. In Fragmentarium, many of the systems use a 4-component vector to keep track of the minimum distance to the three x=0, y=0, and z=0 planes and to the distance from origo. These are mapped to color through the X,Y,Z, and R parameters in the ‘Coloring’ tab.

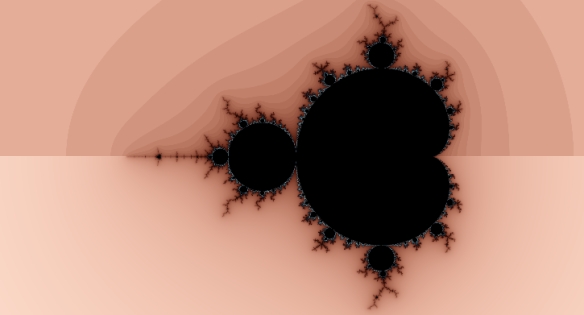

The iteration count is the number of iterations it takes before the orbit diverges (becomes larger than the escape radius). Since this is an integer number it is prone to banding, which is discussed later in this post. One way to avoid this is by using a smooth fractional iteration count:

float smooth = float(iteration) + log(log(EscapeRadiusSquared))/log(Scale) - log(log(dot(z,z)))/log(Scale);

(For a derivation of this quantity, see for instance here)

Here ‘iteration’ is the number of iterations, and dot(z,z) is the square of the escape time length. There are a couple of things to notice. First, the formula involves a characteristic scale, referring to the scaling factor in the problem (e.g. 2 for a standard Mandelbrot, 3 for a Menger). It is not always possible to obtain such a number (e.g. for Mandelboxes or hybrid systems). Secondly, if the smooth iteration count is used to lookup a color in a palette, offset may be ignored, which means the second term can be dropped. Finally, which ‘log’ functions should be used? This does not matter if only they are used consistently: since all different log functions are proportional, the ratio of two logs does not depend on the base used. For the inner logs (e.g. log(dot(,z))), changing the log will result in a constant offset to the overall term, so again this will just result in an offset in the palette lookup.

The lower half of this image use a smooth iteration count.

Conditional Path Coloring

(I made this name up – I’m not sure there is an official name, but I’ve seen the technique used several times in Fractal Forums posts.)

Some fractals may have conditional branches inside their iteration loop (sometimes disguised as an ‘abs’ operator). The Mandelbox is a good example: the sphere fold performs different actions depending on whether the length of the iterated point is smaller or larger than a set threshold. This makes it possible to keep track of a color variable, which is updated depending on the path taken.

Many other types of coloring are also possible, for example based on the normal of the surface, spherical angles of the escape time points, and so on. Many of the 2D fractal coloring types can also be applied to 3D fractals. UltraFractal has a nice list of 2D coloring types.

Improving Quality

Some visual effects and colorings, are based on integer quantities – for example glow is based on on the number of ray steps. This will result in visible boundaries between the discrete steps, an artifact called banding.

The smooth iteration count introduced above is one way to get rid of banding, but it is not generally applicable. A more generic approach is to add some kind of noise into the system. For instance, by scaling the length of the first ray step for each pixel by a random number, the banding will disappear – at the cost of introducing some noise.

Personally, I much prefer noise to banding – in fact I like the noisy, grainy look, but that is a matter of preference.

Another important issue is aliasing: if only one ray is traced per pixel, the image is prone to aliasing and artifacts. Using more than one sample will remove aliasing and reduce noise. There are many ways to oversample the image – different strategies exist for choosing the samples in a way that optimizes the image quality and there are different ways of weighting (filtering) the samples for each pixel. Physical Based Rendering has a very good chapter on sampling and filtering for ray tracing, and this particular chapter is freely available here:

In Fragmentarium there is some simple oversampling built-in – by setting the ‘AntiAlias’ variable, a number of samples are chosen (on a uniform grid). They are given the same weight (box filtered). I usually only use this for 2D fractals – because they render faster, which allows for a high number of samples. For 3D renders, I normally render a high resolution image, and downscale it in a image editing program – this seems to create better quality images for the same number of samples.

Part III discusses how to derive and work with Distance Estimator functions.

Thank you very, very much.

My interest in crazy fractals and mad graphics had wained a bit in the last ten years, and you’ve thrown me right back to being 11 and wondering how I could implement the Scientific American’s Mathematical Recreations sections on a BBC micro with almost no idea how to program, and even less idea what a complex number was.

A few months back I saw one of those mind blowing 4k demos, and then today I stumbled on your blog that explains how they’re working, which is quite a relief; I was starting to think it must be witchcraft. The simplicity of the ray tracer using distance estimation is absolutely stunning and the result is beautiful and gloriously stark – thank you so much for sharing it.

I’m going to have a look through your new post today, and I’m very much looking forward to the promised section three! I’m hoping you’ll be covering distance estimators in lots of detail! I noticed that wikipedia covers distance estimators for the Mandelbrot set a bit, so I’ll see how I get on with that for now.

You see, putting together your posts with something else I’ve been learning recently, you’ve inspired me with an absolutely fiendish idea that I think might just produce a really stonking Mandelbrot variant – I’ll let you know!

Many thanks,

Benjohn

Thanks for you comments!

The Distance Estimator stuff on Wikipedia is quite hairy (the Koebe 1/4 Theorem) – so I’ll be aiming for a less theoretical presentation 🙂

Looking forward to see your Mandelbrot variant,

Mikael.

In my experience using the z-buffer for the normals, or better, using limited ray-stepping on rays at 1/2 pixel offsets from the central ray (even allowing users to control this offset) is much more successful than relying on the finite difference method using the localised (analytical) DE values simply because the objects are fractal and these values obviously rely on the accuracy of the DE with the assumption that it’s an accurate linear function but of course in many cases it’s not that accurate and in nearly all it isn’t linear.

In practice using the DE estimates has the effect of smoothing the surface as compared to using extra limited traces to get the “true” adjacent surface points.

Of course from the point of view of using shaders using the DE values is massively more optimum in terms of speed 😉

I still haven’t tried the automatic differentiation method because I only just got around to looking at this:

http://en.wikipedia.org/wiki/Automatic_differentiation#Automatic_differentiation_using_dual_numbers

Hi David, I haven’t tried z-buffer normal estimation (because that would require two passes with shader code), but I believe all screen-space methods are prone to artifacts – for instance, imagine trying to estimate a normal for a pixel whose z-buffer neighbour doesn’t hit the fractal. Screen-space normals are also finite difference approximations, but you cannot control the delta step size here: two neighbour pixels may have a large z-buffer difference. Also, your normals will depend on the pixel size (or rather, the z-buffer size). Of course, the only true test would be visual comparison 🙂

I’ve been loosely using these tutorials and Hart’s papers to write my own DE raymarcher (if that’s the correct terminology). I’ve encountered a problem I cannot seem to solve: my distance estimation formulas have been returning zero or negative values.

Is there something wrong with this distance estimator, or is it my raymarcher?

float sphereDE(vec3 p) {

return length(p*vec3(1.0, 1.0, 2.0))-0.3;

}

If I understood the Appendices of Hart’s paper on sphere tracing, this should work.

These tutorials have been extremely helpful.

@Austin – It is perfectly okay for distance estimators to return negative numbers if you are inside objects – it will even provide better results: see the sphere example at the top of: http://blog.hvidtfeldts.net/index.php/2011/08/distance-estimated-3d-fractals-iii-folding-space/